This article documents all of the changes we found in Android 13 so developers can prepare their applications or devices while users can know what to expect from the new version. Although Google has not publicly documented many of the changes in Android 13, we have painstakingly combed through the Android developer docs, AOSP Gerrit, and other sources to put together a comprehensive changelog of everything new in Android 13.

What is Android 13?

Android 13, also known by its internal dessert name Android Tiramisu, is the latest version of the Android operating system. Building on the foundation laid by Android 12, described by many as the biggest Android OS update since 2014, this year’s Android 13 release refines the feature set and tweaks the user interface in subtle ways. However, it also includes many significant behavioral and platform changes under the hood, as well as several new platform APIs that developers should be aware of. For large screen devices in particular, Android 13 also builds upon the enhancements and features introduced in Android 12L, the feature drop for large screen devices.

Android 13 is now publicly available, Google shared multiple preview builds so developers can test their applications. The early preview builds provided an early look at Android 13 and introduced many — but not all — of the new features, API updates, user interface tweaks, platform changes, and behavioral changes to the Android platform. Since Android 13 Beta 3 in June, however, the APIs and app-facing system behaviors have been frozen. Google calls this “Platform Stability”, a term that lets developers know that they can begin updating their app without any fear of breaking changes. And since Android 13 Beta 4’s release in mid-July, developers have been able to publish compatible versions of their apps.

When is the Android 13 release date?

Google released Android 13 on August 15, 2022. The source code is now available on AOSP, so system engineers can now begin compiling their own builds based on the latest Android release.

There were 2 developer previews and 4 betas during development of Android 13. Android 13 reached Platform Stability with the third beta release in June 2022. Once Platform Stability was reached, Android 13’s SDK and NDK APIs and app-facing system behaviors were finalized. Since the Android 12L release came with framework API level 32, Android 13 was released alongside framework API level 33.

The Developer Previews were intended for developers only and could thus only be installed manually. With the launch of the Android 13 beta program, however, Pixel users can enroll in the program to have the release roll out their devices over the air. Pixel devices that are eligible to install the Android 13 Beta include the Pixel 4, Pixel 4 XL, Pixel 4a, Pixel 4a (5G), Pixel 5, Pixel 5a with 5G, Pixel 6, and Pixel 6 Pro.

Although the initial public release of Android 13 is now available, the Android 13 beta program has not ended. Users who have already enrolled in the beta program can remain enrolled, and users who have not already enrolled in the program can enroll themselves. The beta program will proceed with testing the Android 13 QPR1, Android 13 QPR2, and Android 13 QPR3 releases. The program will conclude with the QPR3 release as Android 14 will already be in preview at that time.

At Google I/O 2022, multiple OEMs launched their own Android 13 Developer Preview/Beta programs for a select few devices. These OEMs include ASUS, Lenovo, Nokia (HMD Global), OnePlus, OPPO, Realme, Sharp, Tecno, Vivo, Xiaomi, and ZTE, and cover devices including the ASUS ZenFone 8, Lenovo Tab P12 Pro, Nokia X20, OnePlus 10 Pro, OPPO Find X5 Pro, OPPO Find N, Realme GT 2 Pro, Sharp AQUOS sense6, Tecno CAMON 19 Pro, Vivo X80 Pro, Xiaomi 12, Xiaomi 12 Pro, Xiaomi Pad 5, and ZTE Axon 40 Ultra. The full list of Android 13 beta builds available from OEMs can be found on this page.

Users who do not have a Pixel device or one of the aforementioned OEM devices can try the Android 13 Beta by installing an official Generic System Image (GSI). Alternatively, Android 13 can be installed on PCs through the Android Emulator.

What are the new features in Android 13?

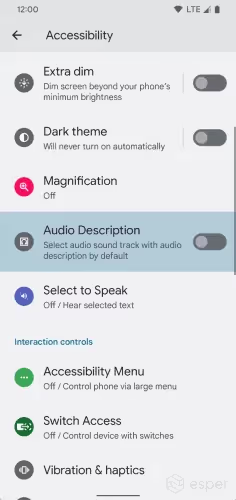

Accessibility audio description

Under Accessibility settings, there’s a new “Audio Description” toggle. The description of this toggle reads as follows: “Select audio sound track with audio description by default.” The value of this toggle is stored in Settings.secure.enabled _accessibility_audio _description_by_default.

In the Android 13 developer documentation, there’s a new isAudioDescriptionRequested method in AccessibilityManager that apps can call to determine if the user wants to select a sound track with audio description by default. As the documentation explains, audio description is a form of narration used to provide information about key visual elements in a media work for the benefit of visually impaired users. Apps can also register a listener to detect changes in the audio description state.

Accessibility magnifier can now follow the text as you type

Under Settings > Accessibility > Magnification, a new “Follow typing” toggle has been added that makes the “magnification area automatically [follow] the text as you type.” The value of this toggle is stored in

Here is a video showing this feature in action.

Quick Settings tiles for color correction & one-handed mode

In addition to the Quick Setting tile for the QR code scanner, Google has added other new tiles. These include:

- A Quick Setting tile to toggle color correction.

- A Quick Setting tile to toggle one-handed mode.

- One-handed mode is disabled by default in AOSP but can be enabled with ?setprop ro.support_one_handed_mode true’. One-handed mode settings won’t appear on large screen devices, but the Quick Setting tile can be appended to the set of active tiles by adding “onehanded” to Settings.Secure.sysui_qs_tiles.

- The Quick Setting tile for “Device Controls” will have its title changed to “Home” when the user has selected Google Home as the Controls provider.

- A Quick Setting tile to launch a QR code scanner. Read this section for more information on this feature.

In Android 13 preview builds, a Quick Setting tile to launch Privacy Controls, where users can toggle the camera, microphone, and location availability, was added. Privacy Controls also contained a shortcut to launch security settings. The tile was provided by the PermissionController Mainline module but was removed in later preview builds. Instead, it seems the activity launched by the tile will instead be accessible by tapping the privacy indicators for the microphone, camera, or location.

Bluetooth LE Audio support

Bluetooth LE Audio is the next-generation Bluetooth standard defined by the Bluetooth SIG. It promises lower power consumption and higher audio quality using the new Low Complexity Communications Codec (LC3). The new standard also introduces features such as location-based audio sharing, multi-device audio broadcasting, and hearing aid support.

There are multiple products on the market with hardware support for BLE Audio, and to prepare for the release of new BLE Audio-enabled audio products, Google has built support for LE Audio into Android 13. Android 13’s Bluetooth stack supports BLE Audio, from including an LC3 encoder and decoder to integrating support for detecting and swapping to the codec in developer options. Developers do not have to make any changes to their applications to take advantage of the new capabilities afforded by Bluetooth LE Audio.

One of the key features of BLE Audio is Broadcast Audio, which lets an audio source device broadcast audio streams to many audio sink devices. Android 13, of course, will support this feature. Devices with BLE Audio support will see an option to broadcast media when opening the media output picker. A dialog will inform users that they can “broadcast media to devices near [them], or listen to someone else’s broadcast.” Other users who are nearby with compatible Bluetooth devices can listen to media that’s being broadcasted by scanning a QR code or entering the name and password for the broadcast.

MIDI 2.0 support

Musicians will be delighted to learn that Android 13 introduces support for the MIDI 2.0 standard. MIDI 2.0 was introduced in late 2020 and adds bi-directionality so MIDI 2.0 devices can communicate with each other to auto-configure themselves or exchange information on available functionality. The new standard also makes controllers easier to use and adds enhanced, 32-bit resolution.

Spatial audio with head tracking support

Android 13 improves upon the initial spatial audio implementation introduced in Android 12L. The audio framework adds support for both static spatial audio and dynamic spatial audio with head tracking. Spatial audio produces immersive audio that seems like it’s coming from all around the user. However, spatial audio only works with media content that has a multichannel audio track for the decoder to output a multichannel stream.

Audio can be spatialized when played back through wired headphones or the phone’s speakers. However, spatial audio support must be implemented by the device maker. The system property ?ro.audio.spatializer_enabled’ should be set to true if an audio spatializer service is present and enabled, while Settings.Secure.spatial_audio_enabled holds the value of the spatial audio toggle.

Devices with an audio spatializer service may have a toggle in Bluetooth settings to enable spatial audio. This feature produces immersive audio that seems like it’s coming from all around you. However, the description in settings warns that spatial audio only works with some media. Audio can also be spatialized when played back through wired headphones or the phone’s speakers. Spatial audio support must be implemented by the device maker. The system property ?ro.audio.spatializer_enabled’ should be set to true if an audio spatializer service is present and enabled, while Settings.Secure.spatial_audio_enabled holds the value of the spatial audio toggle.

If connected to a Bluetooth audio product with a head tracking sensor, Android 13 can also show a toggle in Bluetooth settings to enable head tracking. Head tracking makes audio sound more realistic by shifting the position of audio as you move your head around so it sounds more natural. Devices that can interface with Bluetooth products containing head tracking sensors should declare the feature? android.hardware.sensor .dynamic.head_tracker.’

OEMs can use Android’s standardized platform architecture to integrate multichannel codecs. This architecture enables low latency head tracking and integration with a codec-agnostic spatializer. Google at I/O stated that Android 13 will include a standard spatializer and head tracking protocol in the platform.

App developers can use Android’s Spatializer APIs to detect device capabilities and multichannel audio. The Spatializer class includes APIs for querying whether the device supports audio spatialization, whether audio spatialization is enabled, and whether the audio track can be spatialized. If the audio can be spatialized, then a multichannel audio track can be sent. If not, then a stereo audio track should be sent.

Media apps that have updated their ExoPlayer dependency to version 2.17+ can configure the platform for multichannel spatial audio. ExoPlayer enables spatialization behavior and configures the decoder to output a multichannel audio stream on Android 12L or later when possible.

Cinematic wallpapers

Android 13 adds new system APIs that Google will be using to generate “3D wallpapers” that “[move] when your phone moves.” Within the latest version of the WallpaperPicker app included in Android 13 DP2 on Pixel devices, there are strings that hint at a new “Effects” tab being added to the interface. This tab will let users apply cinematic effects to their wallpaper, including the 3D wallpaper effect.

Under the hood, this feature makes use of the new WallpaperEffects API. A new permission has been added to Android,

which must be held by the app implementing the system’s wallpaper effects generation service in order to generate wallpaper effects. This permission was added because the wallpaper service is trusted and thus can be activated without the explicit consent of the user.

The system’s wallpaper effects generation service is defined in the new configuration value config_defaultWallpaperEffects GenerationService. On Pixel, this value is set to

This points to a component within the Android System Intelligence, however, there is no evidence of this component existing within current versions of the ASI app. It’s likely that only internal versions of the ASI app have this component. Since no service with this name exists on any of our test devices, the wallpaper effects generation service is disabled, hence we are unable to test this feature at the moment.

However, we are able to test another aspect of this feature: wallpaper dimming. Android’s WallpaperService has added several methods related to a new wallpaper dimming feature. It checks if wallpaper dimming is enabled through the value of persist.debug.enable _wallpaper_dimming before dimming the wallpaper set by the user. This feature is currently not enabled yet, but there’s a CLI used for testing that lets us see how different wallpapers appear at different dimming values. It’s accessed through the ?cmd wallpaper’ command as follows:

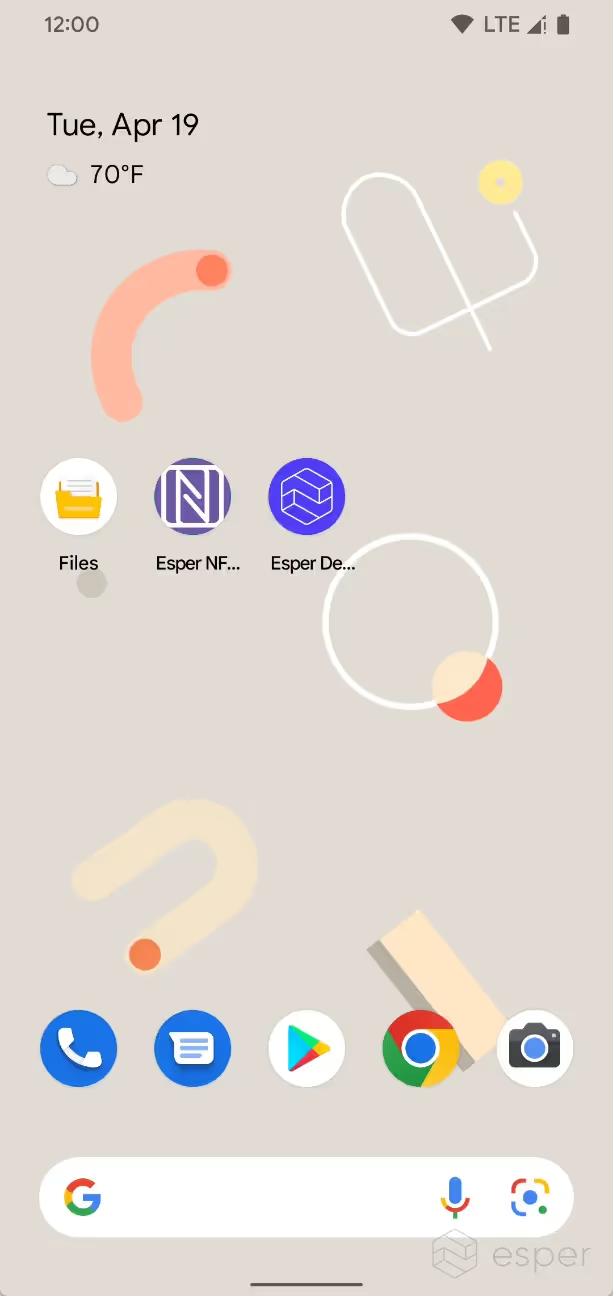

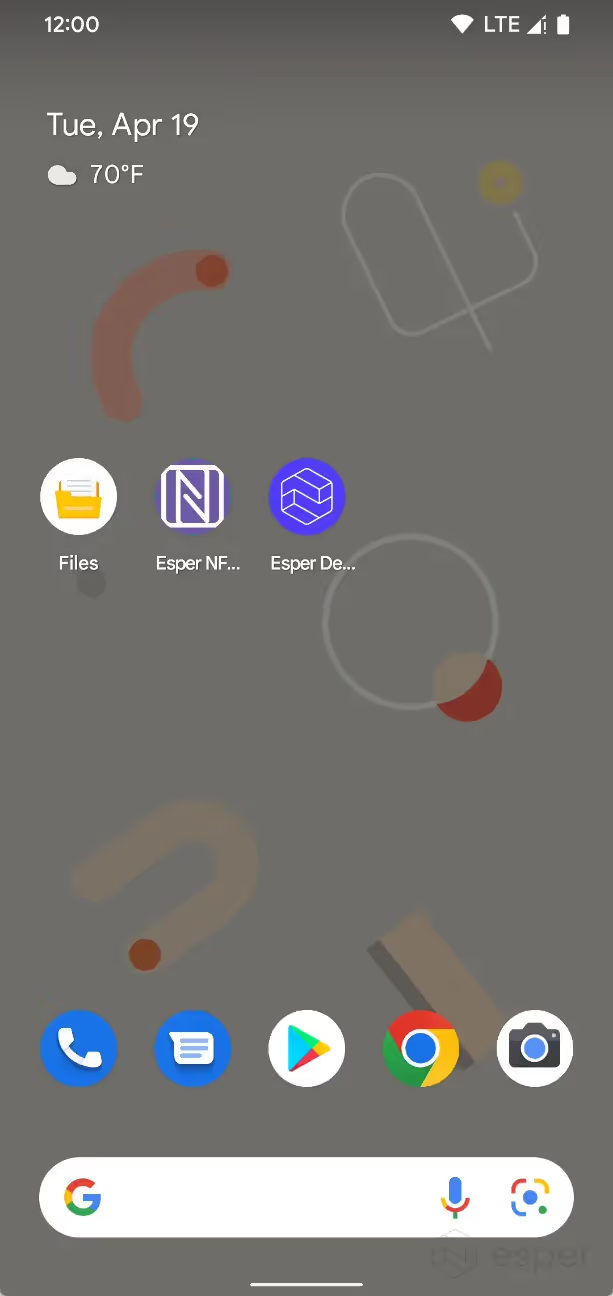

Left: 0% dim. Right: 50% dim.

Although Google’s service for implementing wallpaper effects is likely proprietary, the API seems to be open for any device maker to hook their own service into. The UI implementation in WallpaperPickerGoogle is also likely Google’s proprietary work, but other device makers could adapt the open source WallpaperPicker to add an Effects tab and a cinematic effects toggle as well.

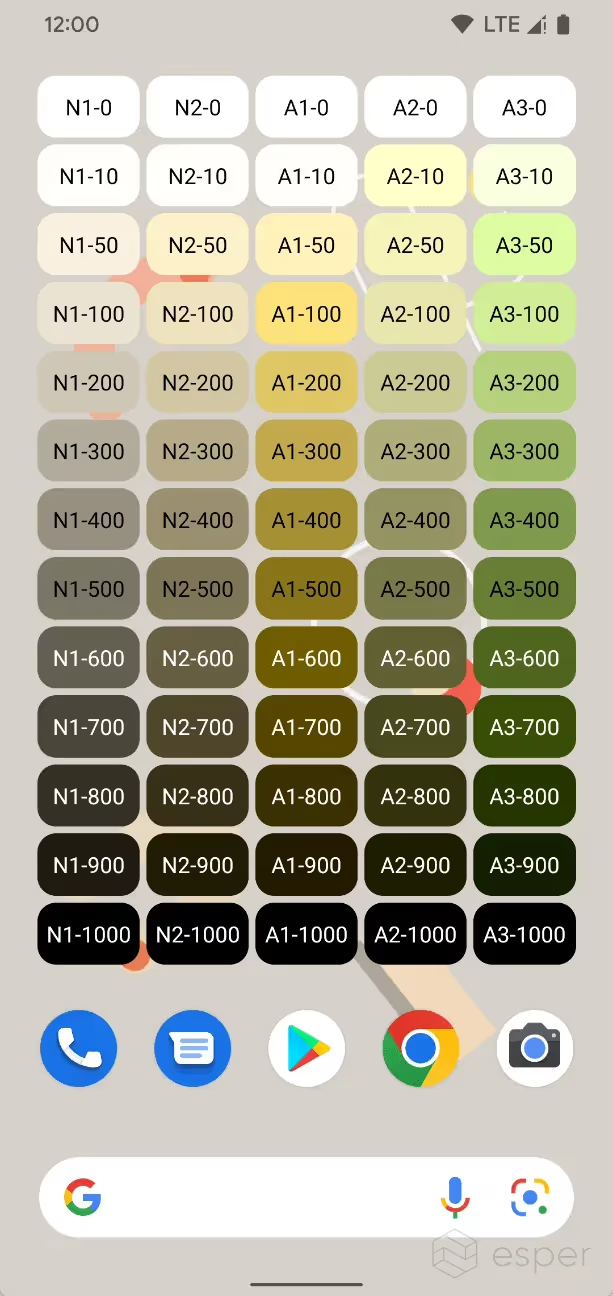

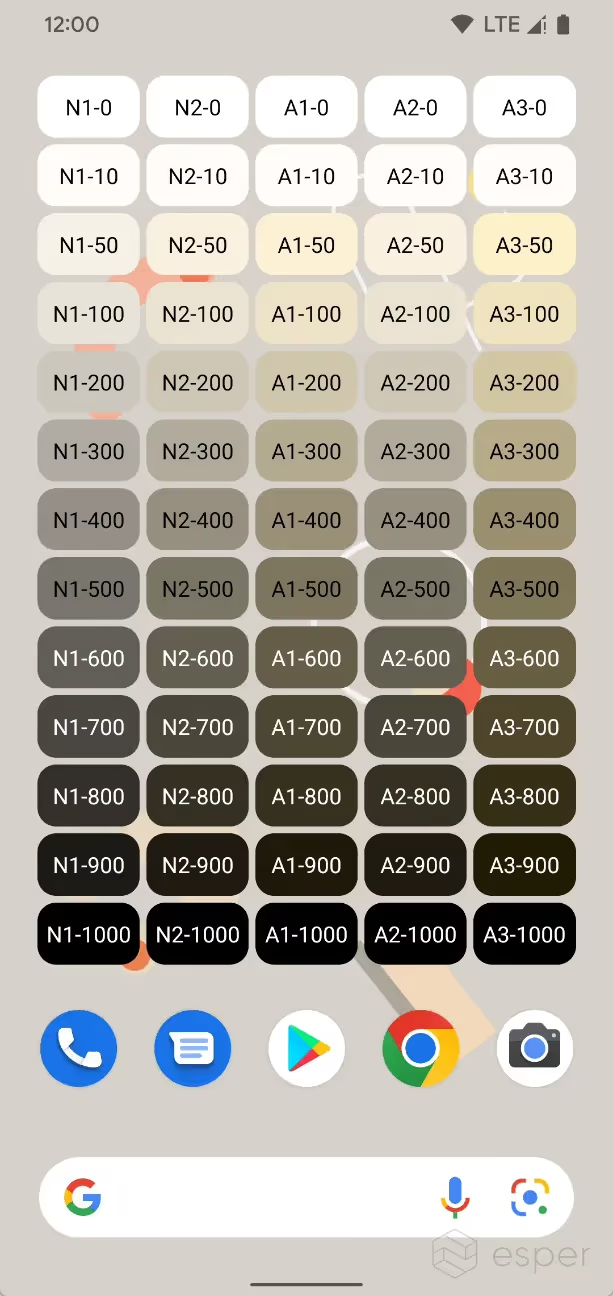

Material You dynamic color styles

Google introduced dynamic color, one of the key features of Google’s new Material You design language, in Android 12 on Pixel phones. Dynamic color support is set to arrive on more devices from other OEMs in the near future, according to Google, due in large part to new GMS requirements. Google’s dynamic color engine, codenamed monet, grabs a single source color from the user’s wallpaper and generates 5 tonal palettes that are each comprised of 13 tonal colors of various luminances. These 65 colors make up the R.color attributes that apps can use to dynamically adjust their themes.

Each of these colors have undefined hue and chroma values that can be generated at runtime by monet. This is what Google is seemingly taking advantage of in Android 13 for a new feature that will likely let users choose from a handful of additional Material You tonal palettes, called “styles.”

In Android 13, Google is working on new styles that adjust the hue and chroma values when generating the 5 Material You tonal palettes. These new styles are called TONAL_SPOT, VIBRANT, EXPRESSIVE, SPRITZ, RAINBOW, and FRUIT_SALAD. The TONAL_SPOT style will generate the default Material You tonal palettes as seen in Android 12 on Pixel. VIBRANT will generate a tonal palette with slightly varying hues and more colorful secondary and background colors. EXPRESSIVE will generate a palette with multiple prominent hues that are even more colorful. SPRITZ generates an almost grayscale, low color palette.

The specs of these new styles are defined in the new com.android.systemui.monet.Styles class. These new style options are hooked up to SystemUI’s ThemeOverlayController, so Fabricated Overlays containing the 3 accent and 2 neutral tonal palettes can be generated using these new specs. The WallpaperPicker/Theme Picker app interfaces with SystemUI’s monet by providing values to

in JSON format.

Users can run the following shell command to generate a tonal palette using these style keys:

where STYLE is one of TONAL_SPOT, VIBRANT, EXPRESSIVE, SPRITZ, RAINBOW, or FRUIT_SALAD.

Left: TONAL_SPOT. Middle: VIBRANT. Right: EXPRESSIVE.

Left: SPRITZ. Middle: RAINBOW. Right: FRUIT_SALAD.

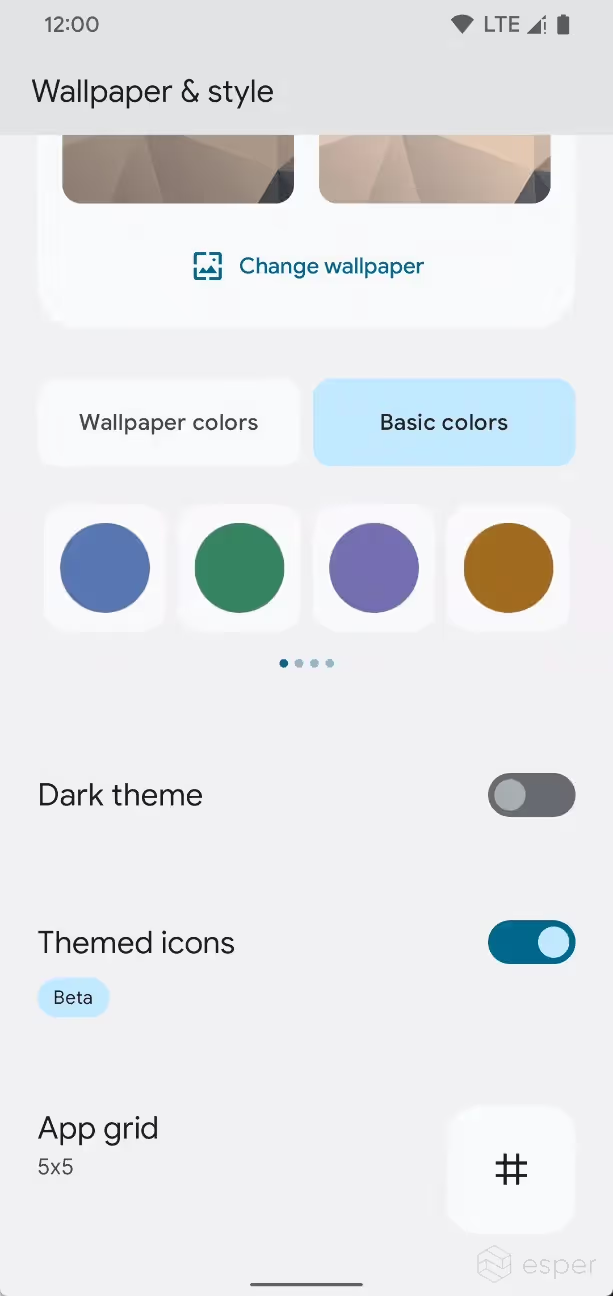

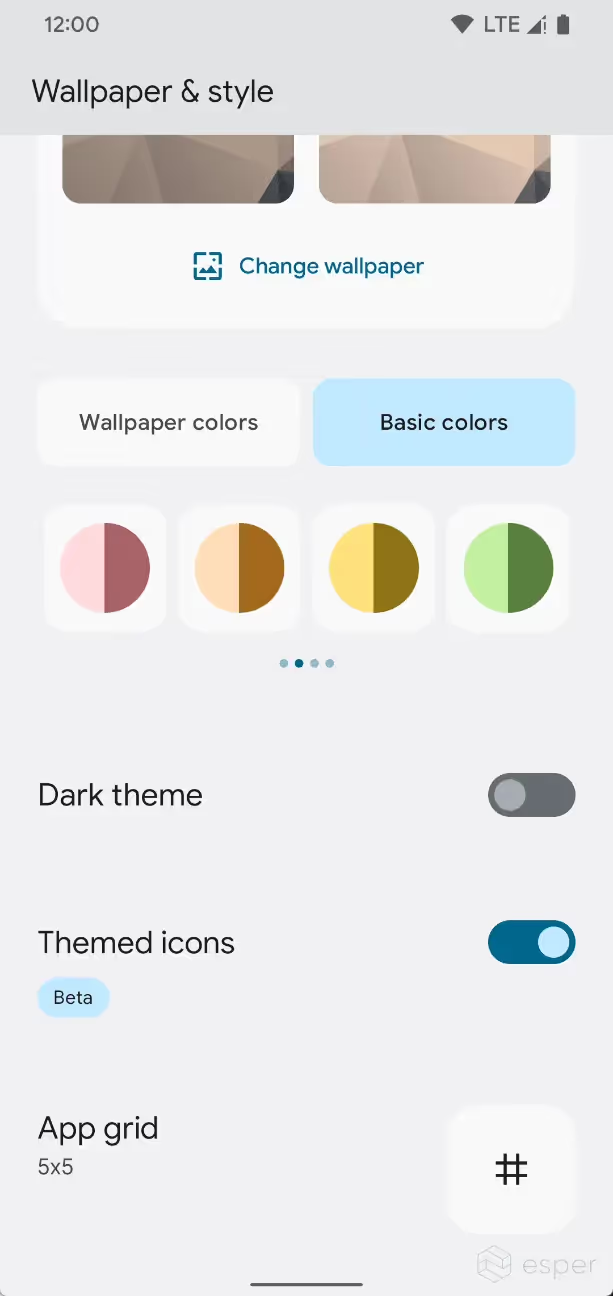

In Beta 1, Google is using these styles as strategies to generate a whole range of new theme options. In the “Wallpaper & style” app on Pixel devices, there are now up to 16 “wallpaper colors” and 16 “basic colors” to choose from.

These styles are employed as follows:

- Wallpaper colors

- Option #1, 5, 9, and 13 are based on TONAL_SPOT

- Option #2, 6, 10, and 14 are based on SPRITZ

- Option #3, 7, 11, and 15 are based on VIBRANT

- Option #4, 8, 12, and 16 are based on EXPRESSIVE

- Basic colors

- Option #1-4 are based on TONAL_SPOT

- Option #5-12 are based on RAINBOW

- Option #13-16 are based on FRUIT_SALAD

Running the following command will reveal the current mThemeStyle as well as the 5 tonal palette arrays:

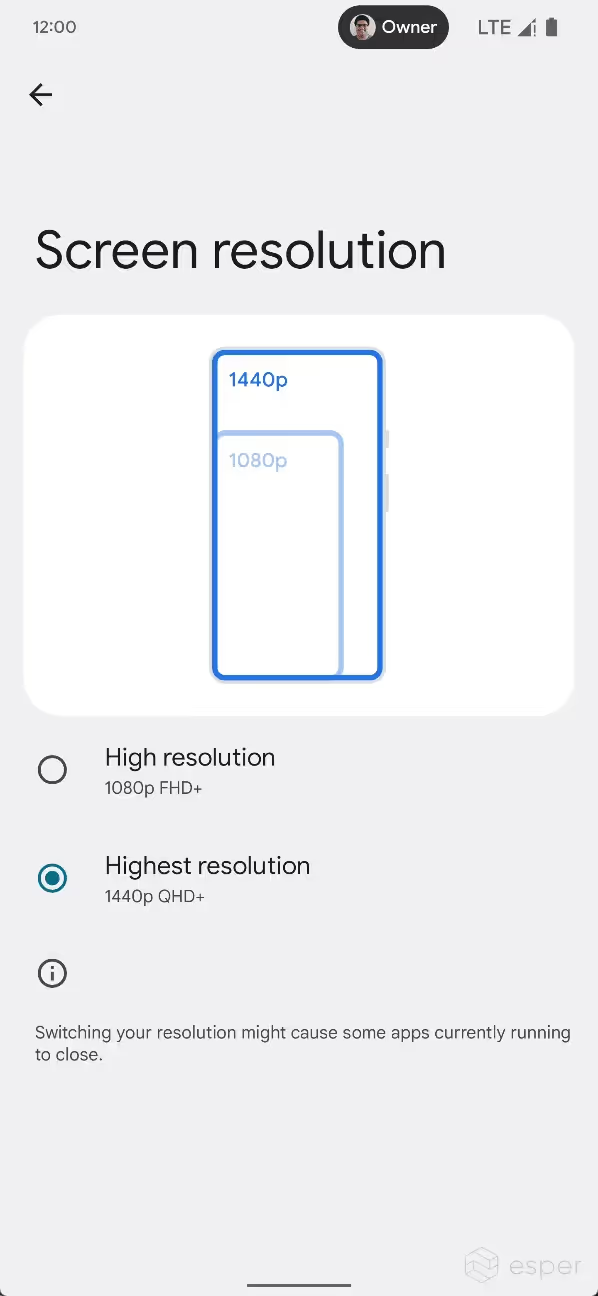

Resolution switching

Android 13 introduces support for switching the resolution in the Settings app. A new “Screen resolution” page will appear under Settings > “Display” on supported devices that lets the user choose between FHD+ (1080p) or QHD+ (1440p), the two most common screen resolutions seen on handhelds and tablets.

The availability of these options depends on the display modes exposed to Android. The logic is contained within the ScreenResolutionController class of Settings.

Under the hood, Google has tweaked Android’s display mode APIs so that the resolution and refresh rate can be persisted for each display in a multi-display device, such as foldables. In addition, new APIs can now be used to set the display mode (or only the resolution or refresh rate). These settings are persisted in the following values:

- Settings.Global.user_preferred_resolution_width

- Settings.Global.user_preferred_resolution_height

- Settings.Global.user_preferred_refresh_rate

Turn on dark mode at bedtime

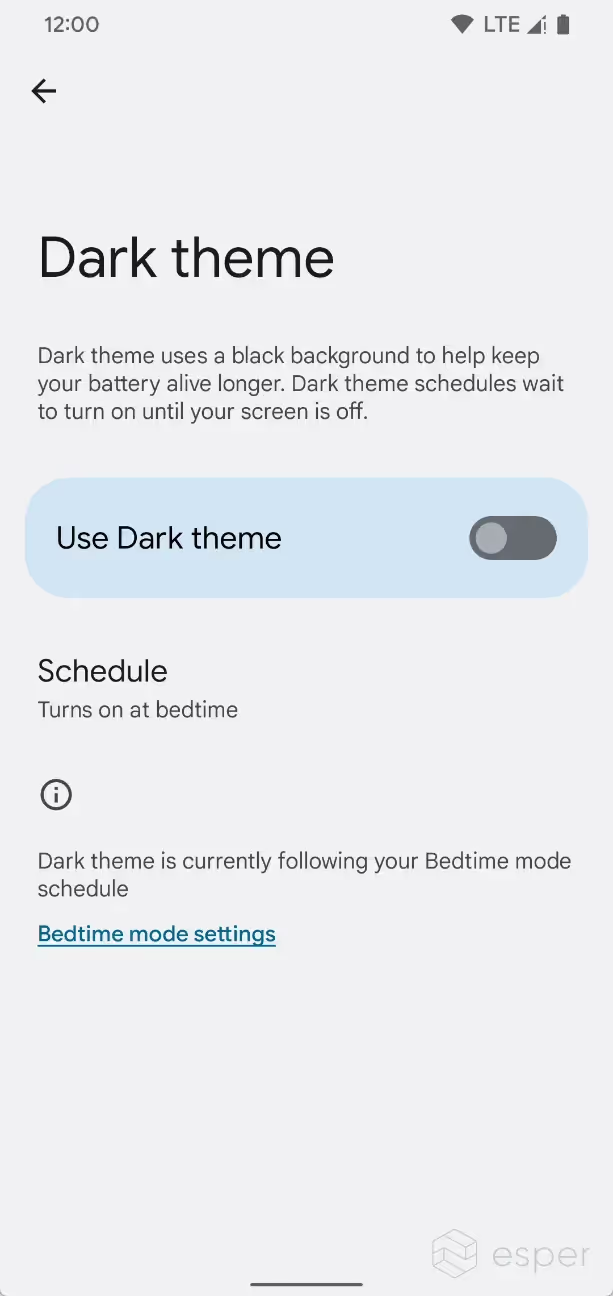

Android’s dark mode is adding a new trigger: bedtime. Users can activate dark mode at their configured bedtime schedule on supported devices. On GMS devices, the bedtime schedule is typically configured via Google’s Digital Wellbeing app.

Scheduling dark theme to turn on at bedtime in Android 13.

This feature was hidden from users in the earlier Android 13 preview builds but could be enabled by toggling the feature flag “settings_app_allow_dark_theme _activation_at_bedtime” in Developer Options. This feature flag could also be toggled by sending the following shell command:pre

As of Beta 2, the “turns on at bedtime” option is available to all users.

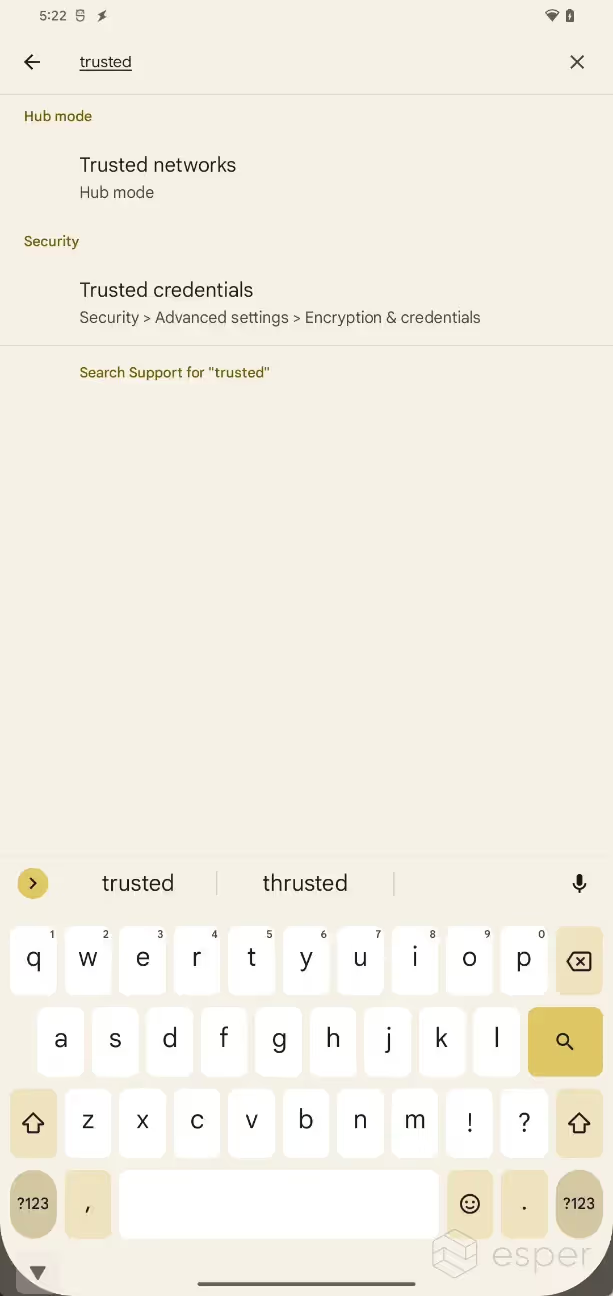

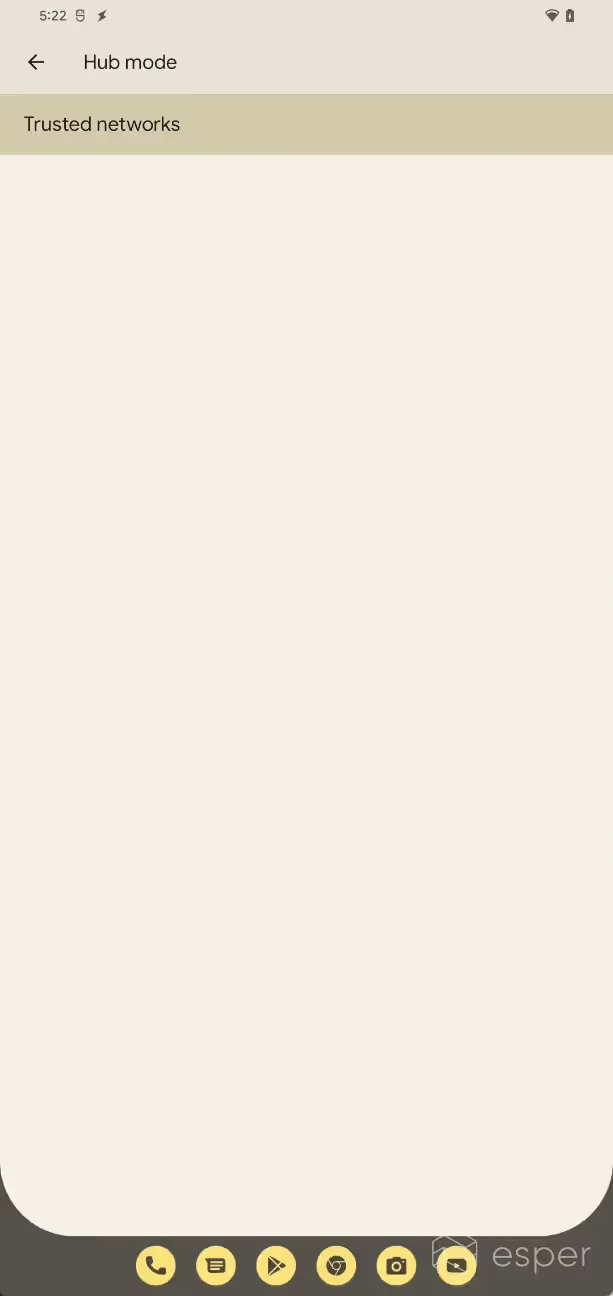

Hub mode

Google believes that tablets are the future of computing, so they’ve recently invested in a new tablet division at Android which has helped oversee some of the new features in Android 12L, the feature update for large screen devices. Some of the major changes in Android 12L focus on improving the overall experience of tablets, but in Android 13, Google is preparing to improve one particular use case.

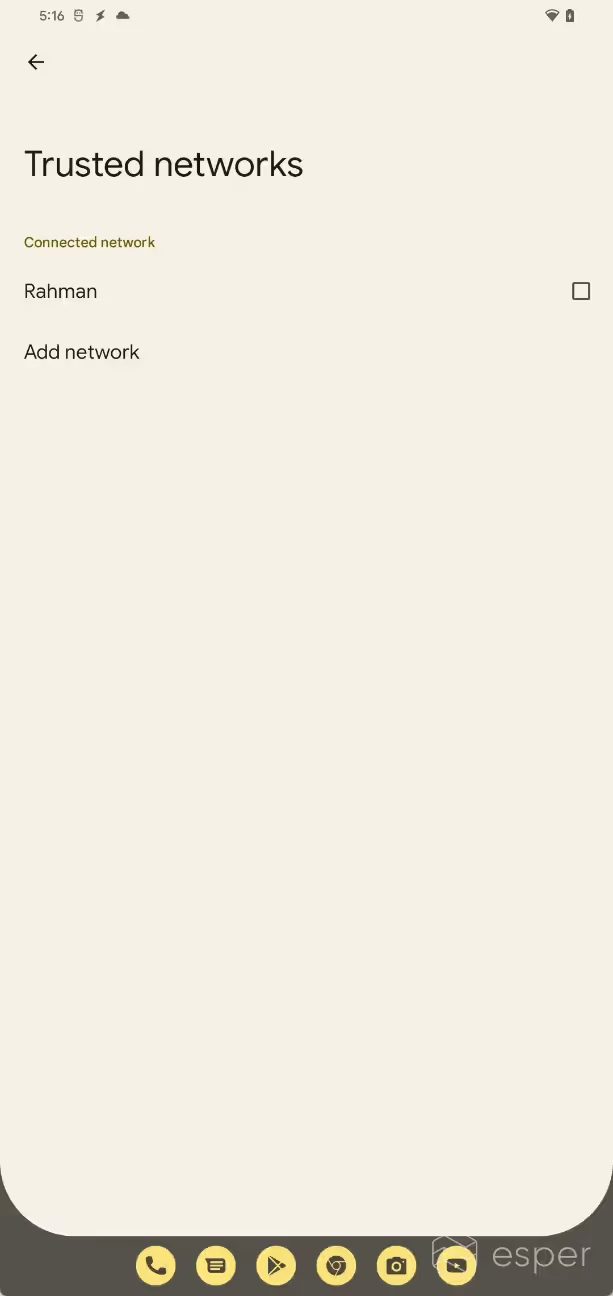

Android 13 Developer Preview 1 reveals early work on a new “hub mode” feature, referred to internally as “communal mode”, that will let users share apps between profiles on a common surface. Code reveals that users will be able to pick from a list of apps that support hub mode, though it isn’t clear what requirements an app needs to meet to support hub mode. Once selected, the apps will be accessible by multiple users on the common surface. The primary user can restrict which Wi-Fi APs the device has to be connected to in order for applications to be shared, though. These networks are considered “trusted networks”.

It isn’t yet entirely clear what form the common surface will take. Initially, we believed that the common surface would be the lock screen, which has seen some other multi-user improvements in Android 13. However, new code related to “dreams”, Android’s code-name for interactive screensavers, not only points towards a revamp of the old feature but also tie-ins with the new “hub mode”. Couple this with new dock-related code, both in Android 13 and in the kernel, suggests that Google is planning something big for tablets that are intended to be fixed in place on a dock.

Since hub mode is still a work-in-progress, we are not able to demonstrate the feature. Enabling the feature first requires that the build declare support for the feature ?android.software.communal_mode.’ Then, one needs to set SystemUI’s boolean flag ?config_communalServiceEnabled’ to true. From there, however, there are several missing pieces, including the communalSourceComponent and communalSourceConnector packages as well as much of the code for the common surface. We also couldn’t find the interface for adding applications to the allowlist for communal mode, which is stored in Settings.Secure.communal _mode_packages.

However, we were at least able to access the screen for choosing “trusted networks”.

Screen saver revamp

Google introduced screen savers to Android back in Android 4.2 Jelly Bean, but since the feature’s introduction, it has received few major enhancements. As an aside, screen savers used to be called “daydreams” but were renamed in Android 7.0 Nougat to avoid confusion with Daydream VR, the now-defunct phone-based VR platform. Google still refers to screen savers as “dreams” internally, though, which is important for us to note. That’s because Android 13 introduces a lot of new dream-related code in SystemUI, suggesting that significant changes are on the way.

New classes in Android 13 reveal work on a dream overlay service that is intended to allow “complications” to run on top of screen savers. In Wear OS land, a complication is a service that provides data to be overlaid on a watch face. It appears that dreams will borrow this concept, with some of the available complications including air quality, cast info, date, time, and weather.

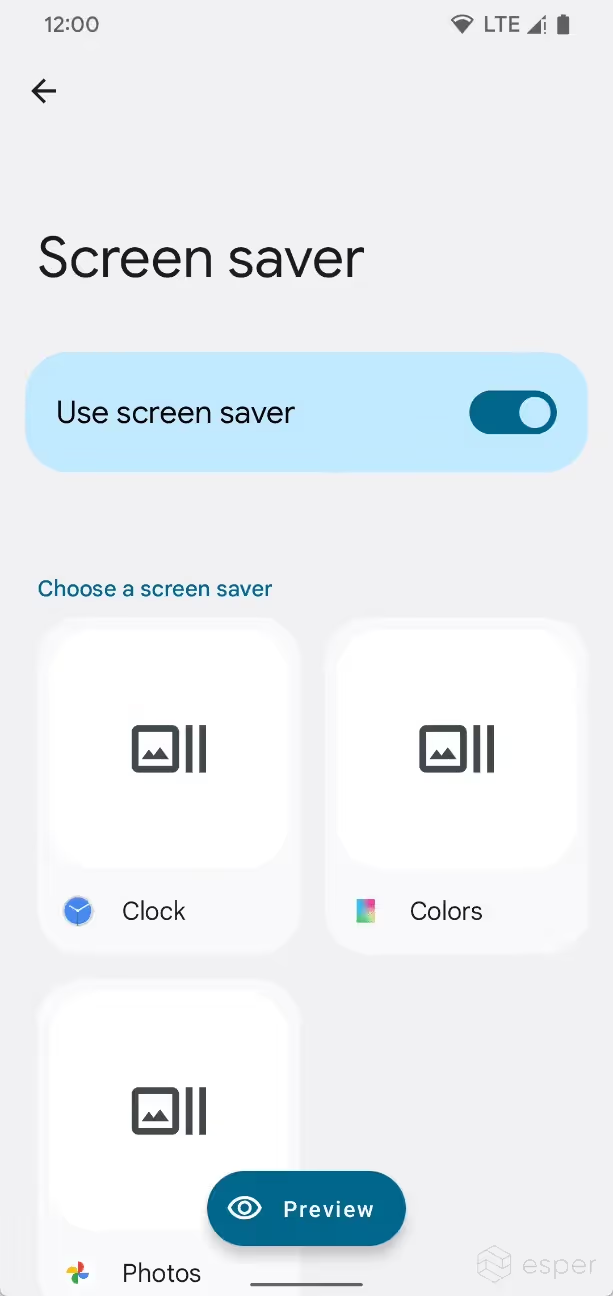

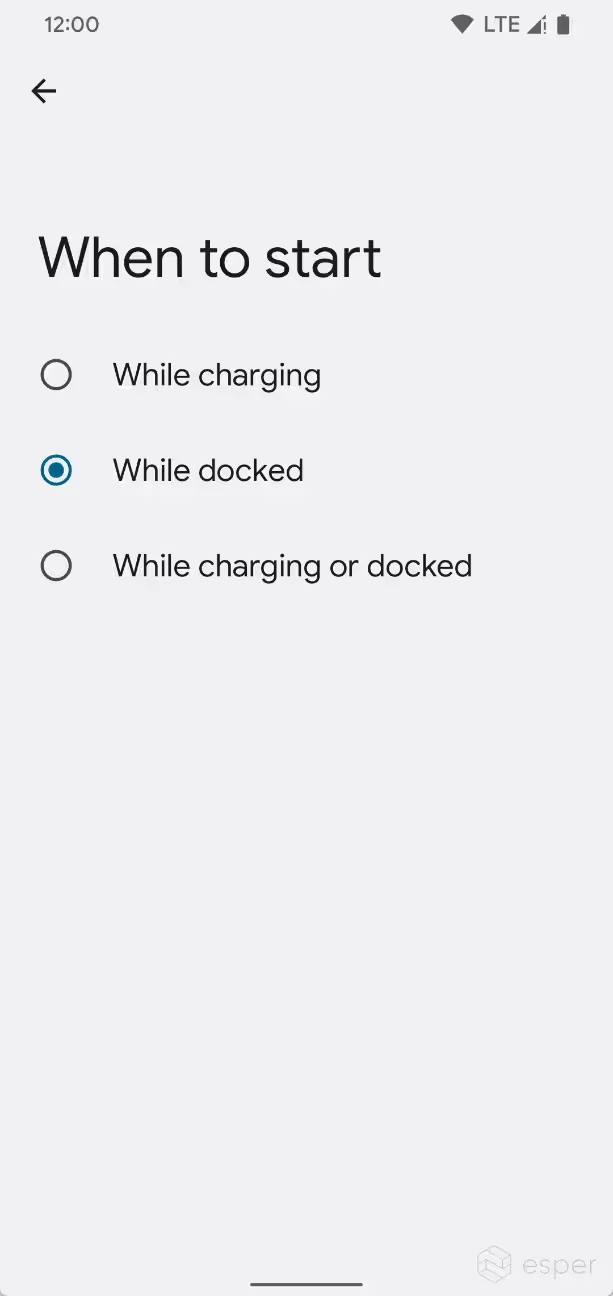

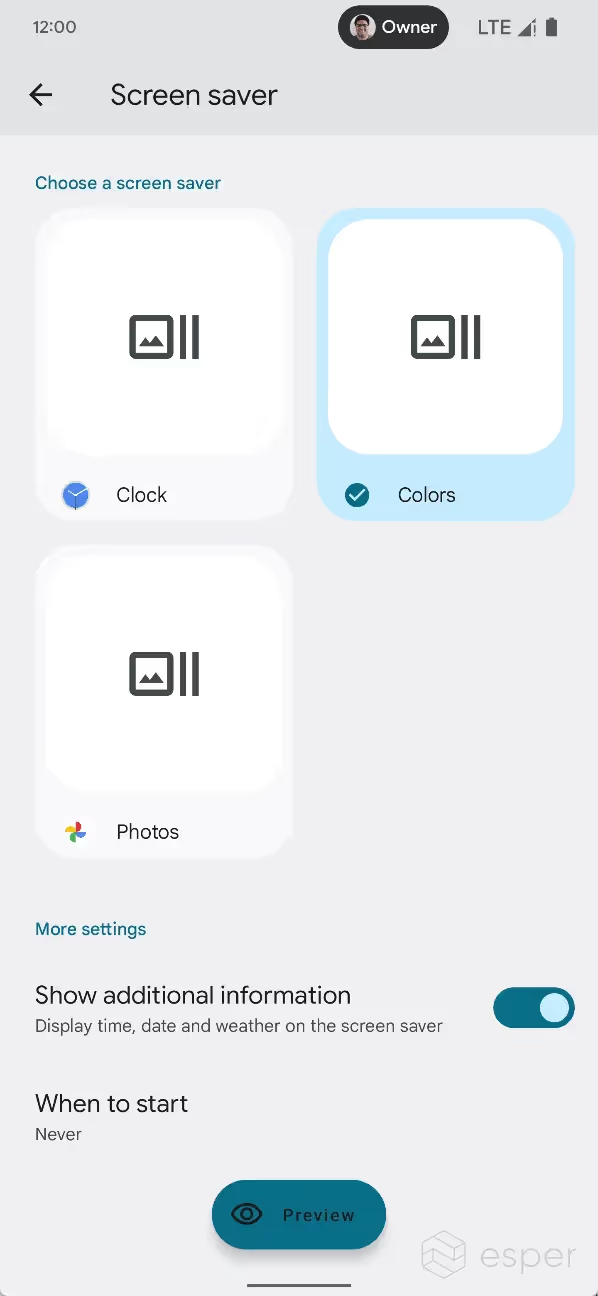

In Developer Preview 2, the screen saver settings page was revamped to show previews. The available screen savers are shown in a grid with a customize button at the center of each item. A preview button at the bottom lets users see what the screen saver is like. Meanwhile, Beta 1 introduces a toggle to turn off the feature, replacing the “never” option from “when to start.”

In addition, Google appears to be adding a page to the setup wizard so users can select a screen saver when setting up their device. No other changes to the settings were implemented, but it’s likely that Google is planning other enhancements to the screen saver experience.

However, we’ll have to wait for the company to release more preview builds to learn more. Given the evidence we’ve seen, however, we’re confident in saying that the company is preparing major enhancements to screen savers, though whether these changes will land in time for the final Android 13 release we cannot say.

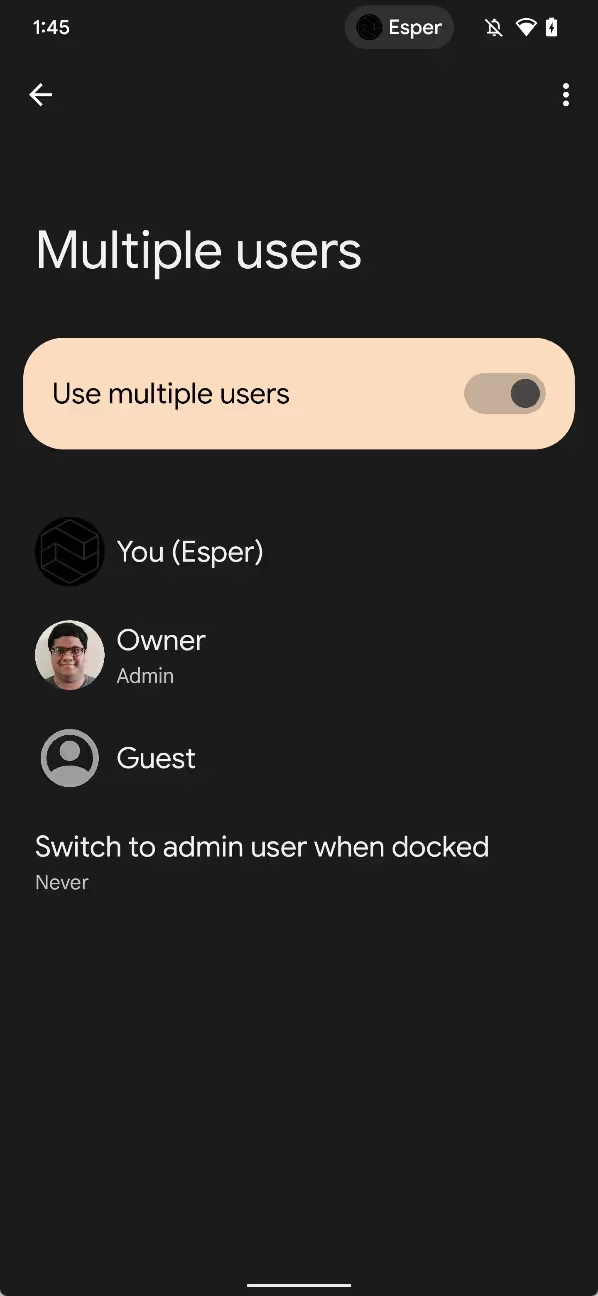

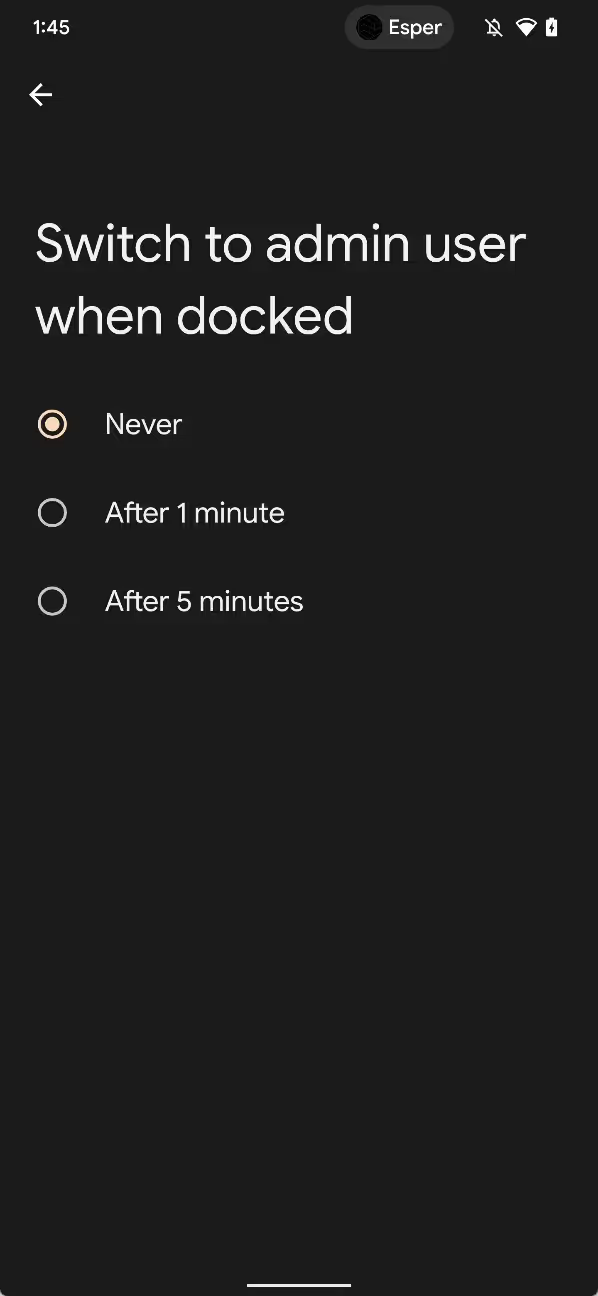

Switch to admin user when docked

The first Developer Preview of Android 13 revealed a new “hub mode” feature in development that will let users share apps between profiles. The second Developer Preview reveals a new setting related to this feature that will seemingly let secondary profiles automatically switch to the primary user after docking the device. Switching to the primary user presumably allows the device to then enter “hub mode”.

The new setting is called “switch to admin user when docked.” It’s available in Android 13’s multi-user settings, but it isn’t shown to users unless the framework config value ?config_enableTimeoutToUser ZeroWhenDocked’ is set to ?true’. The setting allows users to choose how long Android should wait before automatically switching to the primary user after the device is docked. Timeout values of “never”, “after 1 minute”, and “after 5 minutes” are currently supported.

NFC & NFC-F payment support for work profiles

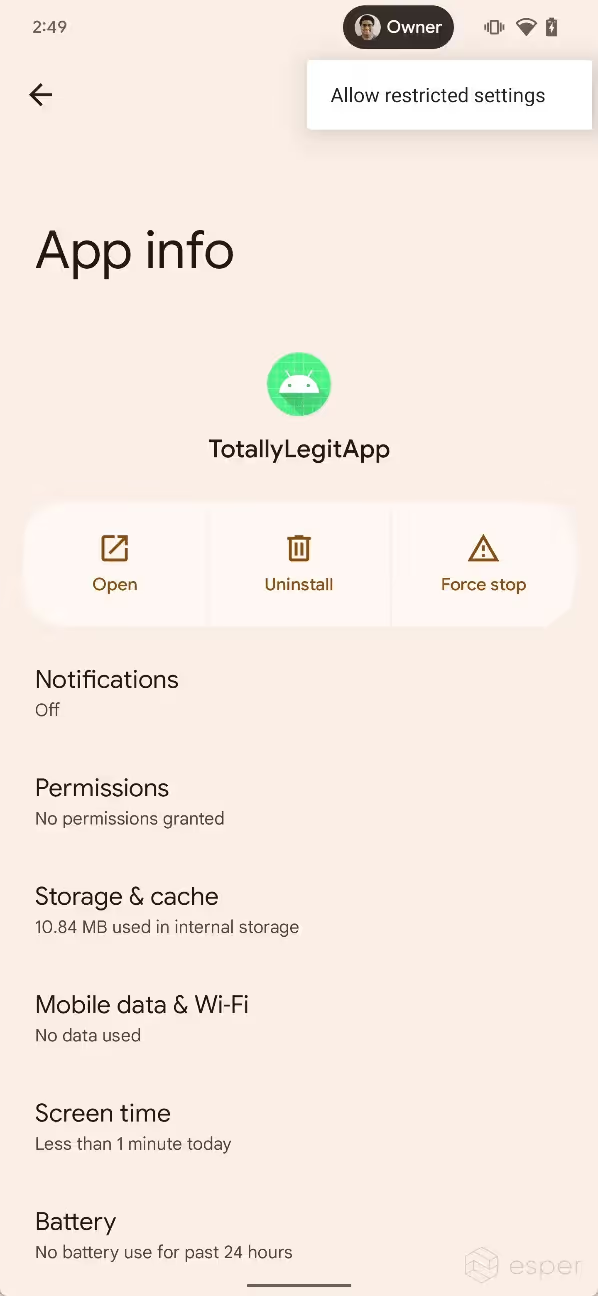

Android 13 introduces NFC payment support for work profiles. Previously, only the primary user could perform contactless payments and access Settings > Connection preferences > NFC > Contactless payments. Work profiles can now also use NFC-F (FeliCa) on supported devices.

WiFi Trust on First Use

Android 13 adds support for Trust On First Use (TOFU). When it is not possible to configure the Root CA certificate for a server, TOFU enables installing the Root CA certificate received from the server during initial connection to a new network. The user must approve installing the Root CA certificate. This simplifies configuring TLS-based EAP networks. TOFU can be enabled when configuring a new network in Settings > Network & Internet > Internet > Add network > Advanced options > WPA/WPA-2/WPA-3-Enterprise > CA certificate > Trust on First Use. Enterprise apps can configure WiFi to enable or disable TOFU through the enableTrustOnFirstUse API.

Game dashboard

Google has long recognized the mobile gaming industry for the money making goliath that it is, but it’s only recently that the company decided to develop new Android features that cater to mobile gaming. At the 2021 Google for Games Developer Summit, Google unveiled the Game Dashboard, a collection of tools and data to help gamers track their progress, share their gameplay, and tune their device’s performance.

Specifically, Google’s Game Dashboard integrates achievements and leaderboards data from Play Games, provides a shortcut to stream gameplay directly to YouTube, and has toggles to show a screenshot button, a screen recorder button, a Do Not Disturb button, and a FPS counter in the floating overlay that appears in-game. Lastly, there is also a setting to set the game mode, essentially a performance profile that optimizes the game’s settings to prolong battery life or maximize frame rate. While Android applies some interventions of its own, such as WindowManager backbuffer resizing to reduce GPU load, game developers largely define what happens when a particular game mode is set, including whether or not to support that particular mode, through the new Game Mode API.

The problem with Game Dashboard’s original implementation is that it is exclusive to the Pixel 6 series on Android 12. This means there are few users (relative to the entire Android user base) who can currently use it, which means game developers aren’t in a rush to support the Game Mode API. To solve this, Google is looking to expand the availability of Game Dashboard by decoupling it from SystemUIGoogle, the Pixel-exclusive version of AOSP’s SystemUI, and integrating it into Google Play Services. As Google Play Services is available on all GMS Android devices, Game Dashboard can be rolled out to far more devices.

Although Game Dashboard was introduced with Android 12, some of the APIs it relies on were marked as internal and could only be accessed by apps signed with the platform certificate. Since Google Play Services is signed by Google and not by OEMs, that means it would be unable to access the necessary internal APIs on OEM builds of Android 12. Thus, Game Dashboard in Google Play Services requires Android 13, which makes the necessary APIs available to system apps.

For example, SurfaceControlFpsListener, the API that’s used to get the FPS count of a task, is a hidden API in Android 12. In Android 13, this API has been raised to be a system API, which lets system apps access it as well as platform apps. This API specifically is guarded by a new permission called ACCESS_FPS_COUNTER, which has a protection level of “signature|privileged”. Hence, it can be granted to Google Play Services, which is typically bundled as a privileged system app.

In addition to ACCESS_FPS_COUNTER, Android 13 also introduces the MANAGE_GAME_ACTIVITY permission, which guards the new GameSession APIs used by the provider of a GameService. MANAGE_GAME_ACTIVITY also has a protection level of “signature|privileged”, allowing privileged system apps to hold it.

Lastly, the existing MANAGE_GAME_MODE permission has been changed from a “signature” permission to a “signature|privileged” permission. GameManager’s setGameMode API checks this permission before letting apps set the game mode.

Apart from these changes making it possible for privileged system apps to implement a game dashboard, Android 13 also enables game dashboard support on large screen devices like tablets. In Android 12L, the floating game overlay does not appear when the taskbar is visible. This is not the case in Android 13, which properly reports the taskbar state and appropriately registers the game dashboard listeners.

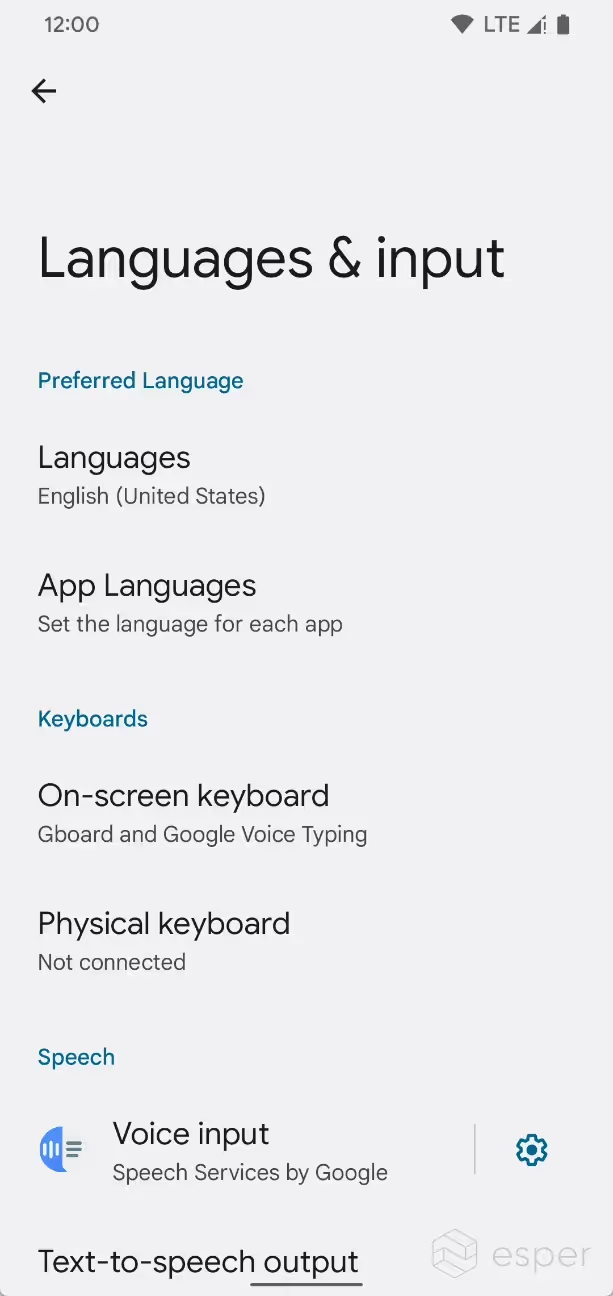

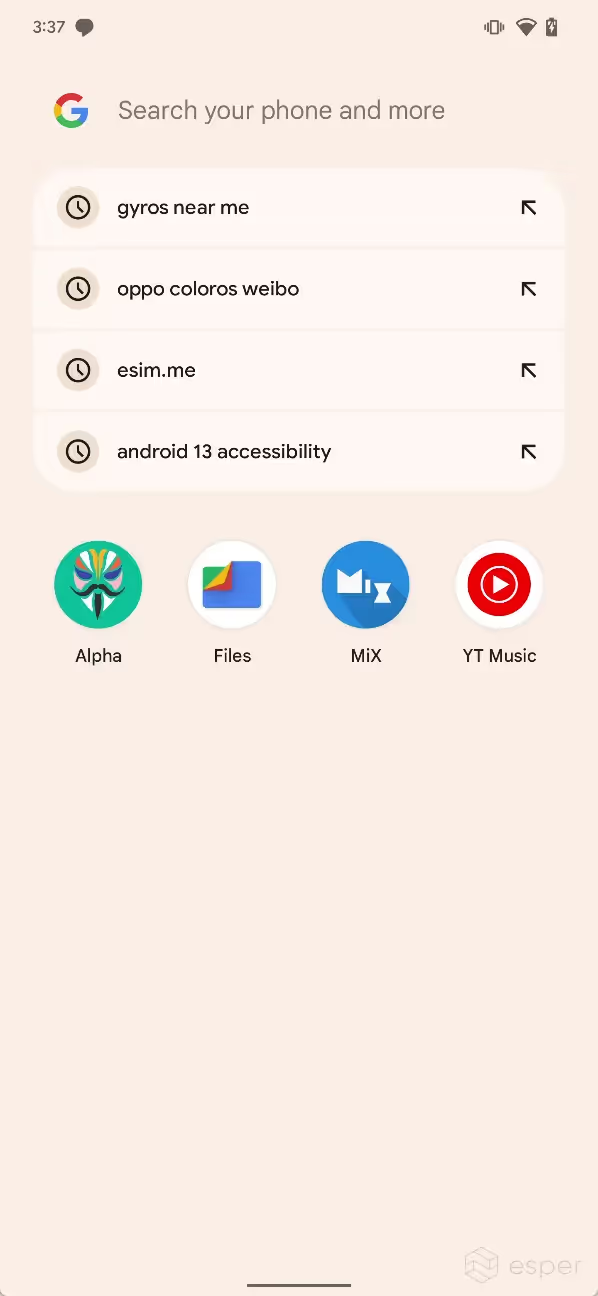

Per-app language preferences

In the Settings app under the System > Languages & input > Languages submenu, users can choose their preferred language. However, this language is applied system-wide, which may not be what multilingual users necessarily prefer. Some applications offer their own language selection feature, but not every app offers this. In order to reduce boilerplate code and improve compatibility when setting the app’s runtime language, Android 13 is introducing a new platform API called LocaleManager which can get or set the user’s preferred language option.

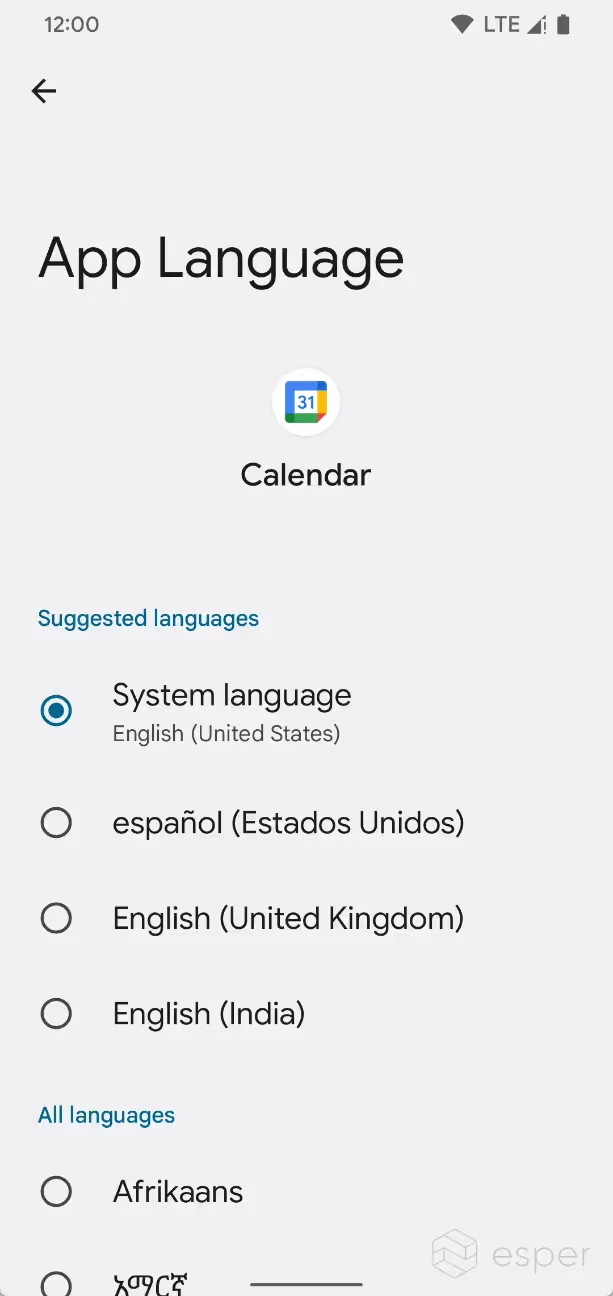

Users can access the new per-app language preferences in Android 13 by going to Settings > System > Languages & input > App Languages. Here, the user can set their preferred language for each app, provided those apps include strings for multiple languages. The app’s language can also be changed by going to Settings > Apps > All apps > {app} > Language.

Demonstrating per-app language preferences in Android 13

In order to help app developers test the per-app language feature, the first few Android 13 preview builds listed per-app language preferences for all apps by default. However, the list of languages that’s shown to the user may not match the list of languages that an app actually supports. Developers must list the languages their apps actually support in the locales_config.xml resource file and point to it in the manifest with the new android:localeConfig attribute. Apps that do not provide a locales_config.xml resource file will not be shown in the per-app language preferences page. The system can be forced to show all apps in the per-app language preferences page by disabling the “settings_app_locale _opt_in_enabled” feature flag in developer options.

Media Tap To Transfer

Android 13 ships with support for a “Media Tap To Transfer” feature. The feature is intended to let a “sender” device (like a smartphone) transfer media to a “receiver” device (like a tablet). The actual media transfer is handled by an external client, such as Google Play Services, which tells SystemUI about the status of the transfer. SystemUI then displays the status on both devices through a chip on top of the status bar.

Although the feature has “tap” in its name, it’s actually architected to be agnostic to any particular communication protocol. It’s up to the client to determine how the transfer is initiated, whether that be via NFC, Bluetooth, Ultra wideband, or a proprietary protocol.

Android 13 has a few new shell commands to prototype what the status bar chips look like, including:

The media tap to transfer chip is shown at the top of the screen after issuing the correct command.

System Photo Picker

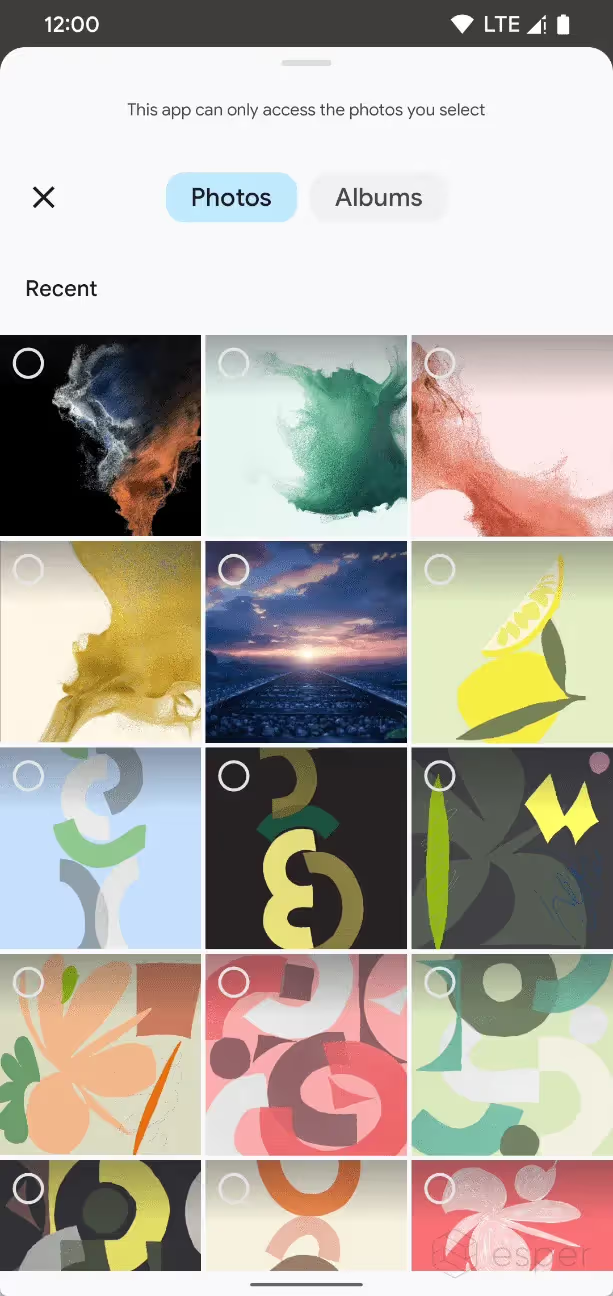

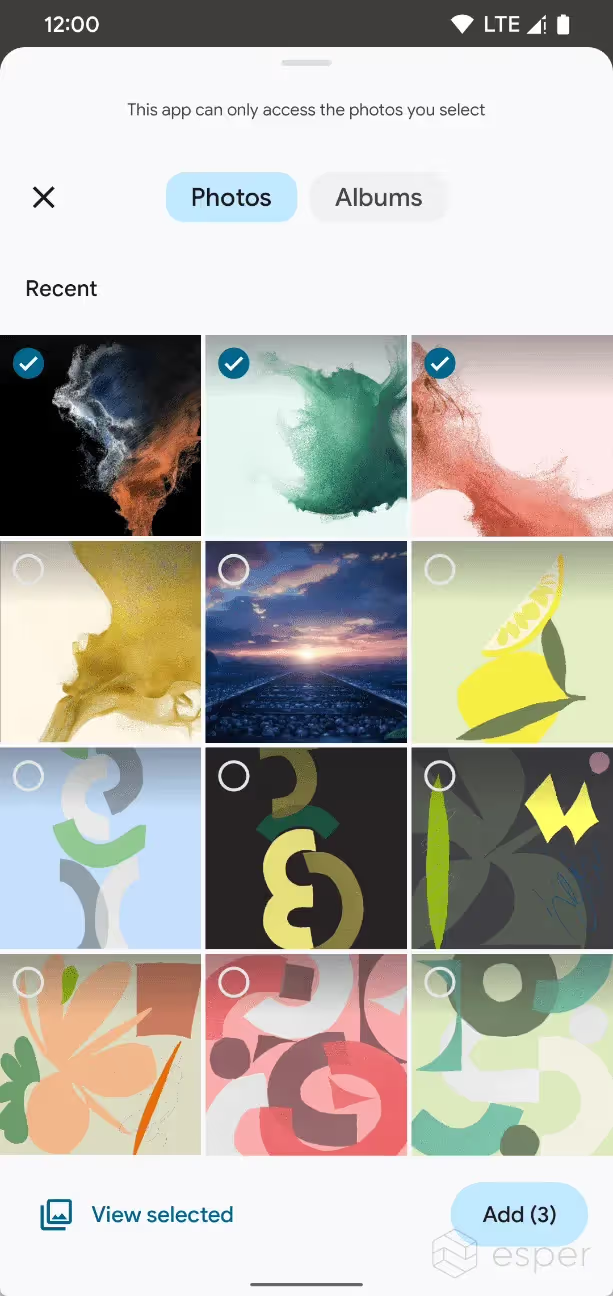

To prevent apps with the broad READ_EXTERNAL_STORAGE permission from accessing sensitive user files, Google introduced Scoped Storage in Android 10. Scoped Storage narrows storage access permissions to encourage apps to only request access to the specific file, types of files, directory, or directories they need, depending on their use case. Apps can use the media store API to access media files stored within well-defined collections, but they must hold the READ_EXTERNAL_STORAGE permission (pre-Android 13) or one of READ_MEDIA_AUDIO, READ_MEDIA_VIDEO, or READ_MEDIA_IMAGES (Android 13+) in order to access media files owned by other apps. Alternatively, they can use the Storage Access Framework (SAF) to load the system document picker to let the user pick which files they want to share with that app, which doesn’t require any permissions.

Android’s system document picker app — simply called “Files” — provides a barebones file picking experience. Android 13, however, is introducing a new system photo picker that extends the concept behind the Files app with a new experience for picking photos and videos. The new system photo picker will help protect photo and video privacy by making it easier for users to pick the specific photos and videos to share with an app. Like the Files app, the new system photo picker can share photos and videos stored locally or on cloud storage, though apps have to add support for acting as a cloud media provider. Google Photos, for example, will appear as a cloud media provider in a future update.

Apps can use the new photo picker APIs in Android 13 to prompt the user to pick which photos or videos to share with the app, without that app needing permission to view all media files.

The new photo picker experience is already available in Android 13 builds but will also gradually roll out to Android devices running Android 11 or higher (excluding Android Go Edition) through an update to the MediaProvider module, which contains the Media Storage APK with the system photo picker activity. According to Google, this feature is available on Android 11+ GMS devices with the Google Play system update for May.

The feature can also be manually enabled through the following shell commands on devices with a recent MediaProvider version:

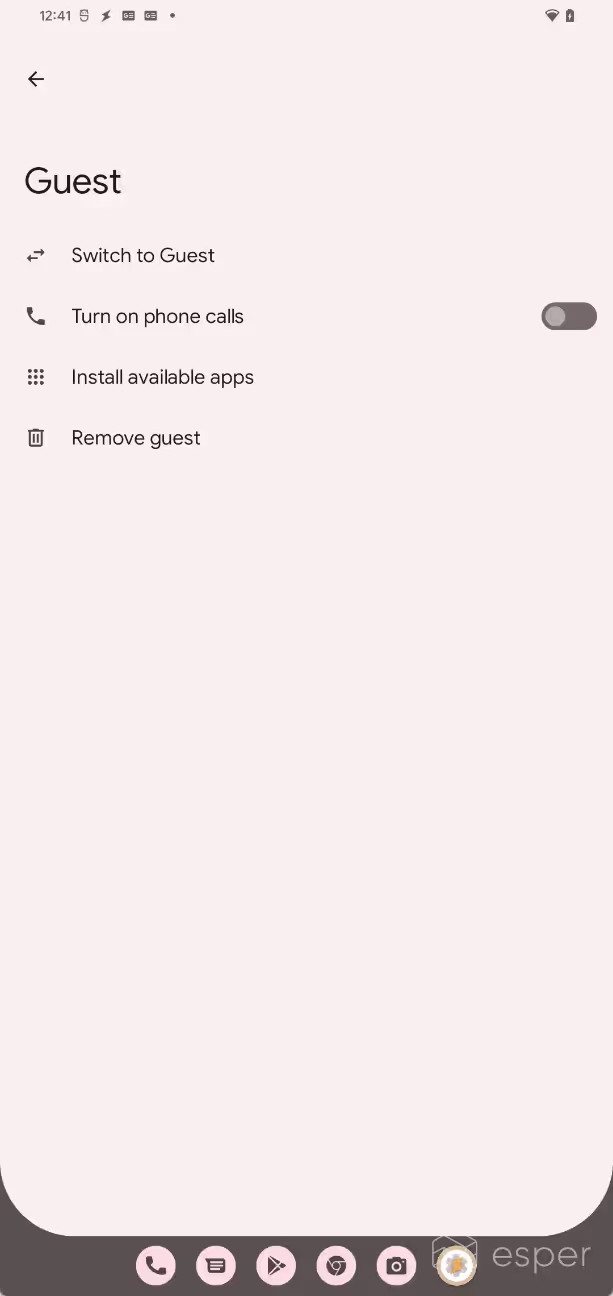

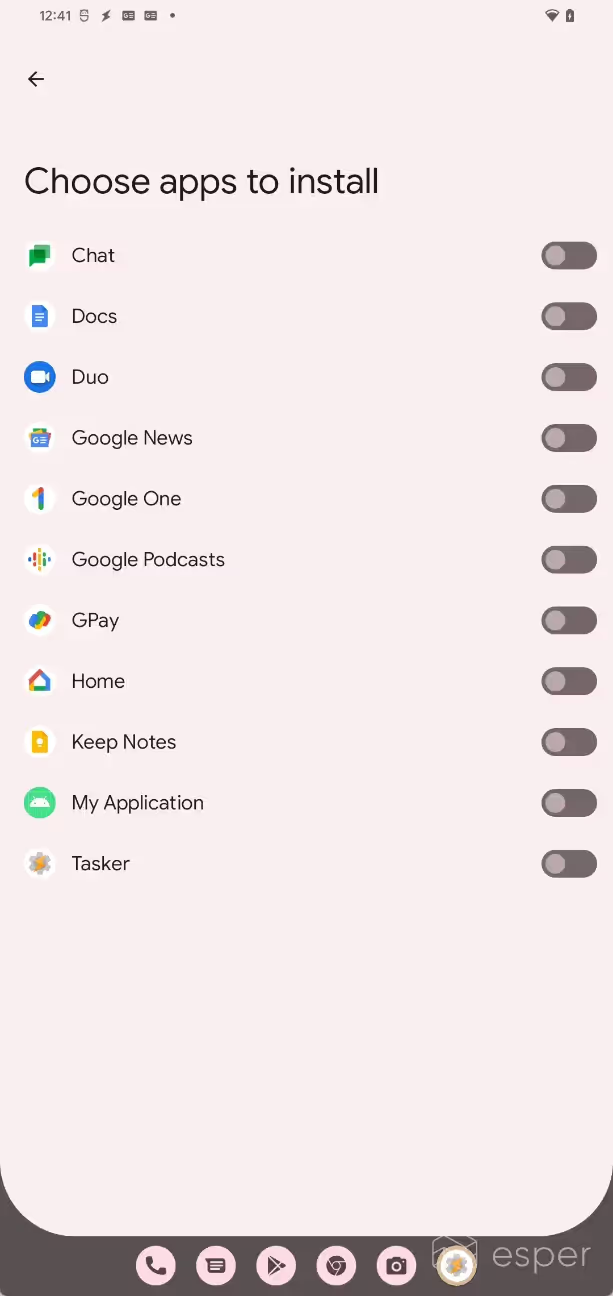

It’s easier to install apps to guest profiles

When creating a guest user in Android 13, the owner can choose which apps to install to the guest profile. No data is shared between the owner and guest profiles, however, which means that the guest profile will still need to sign in to those apps if need be.

This feature was hidden from users starting in Developer Preview 2.

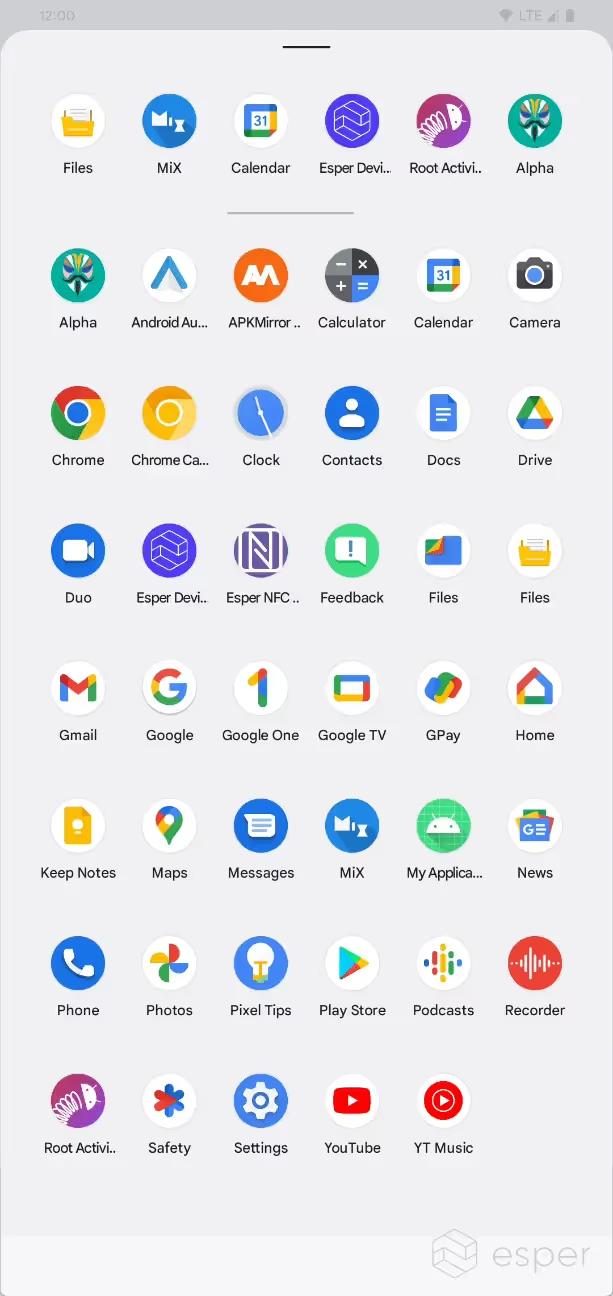

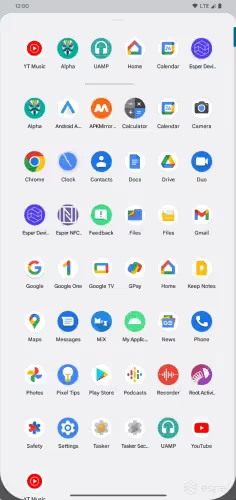

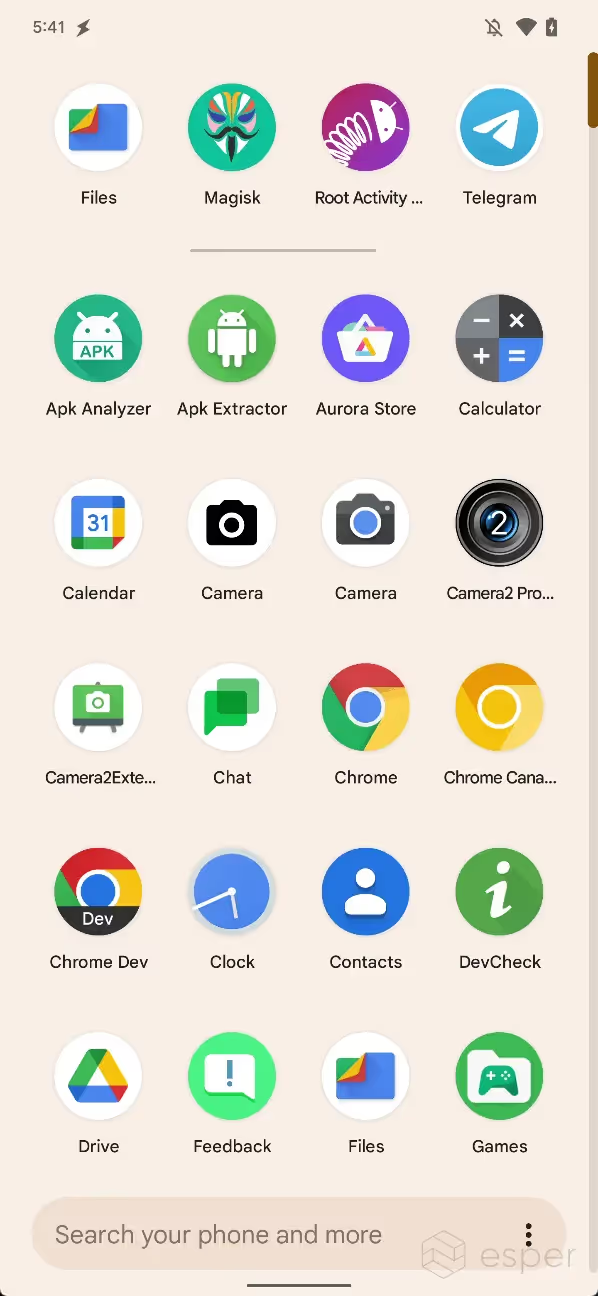

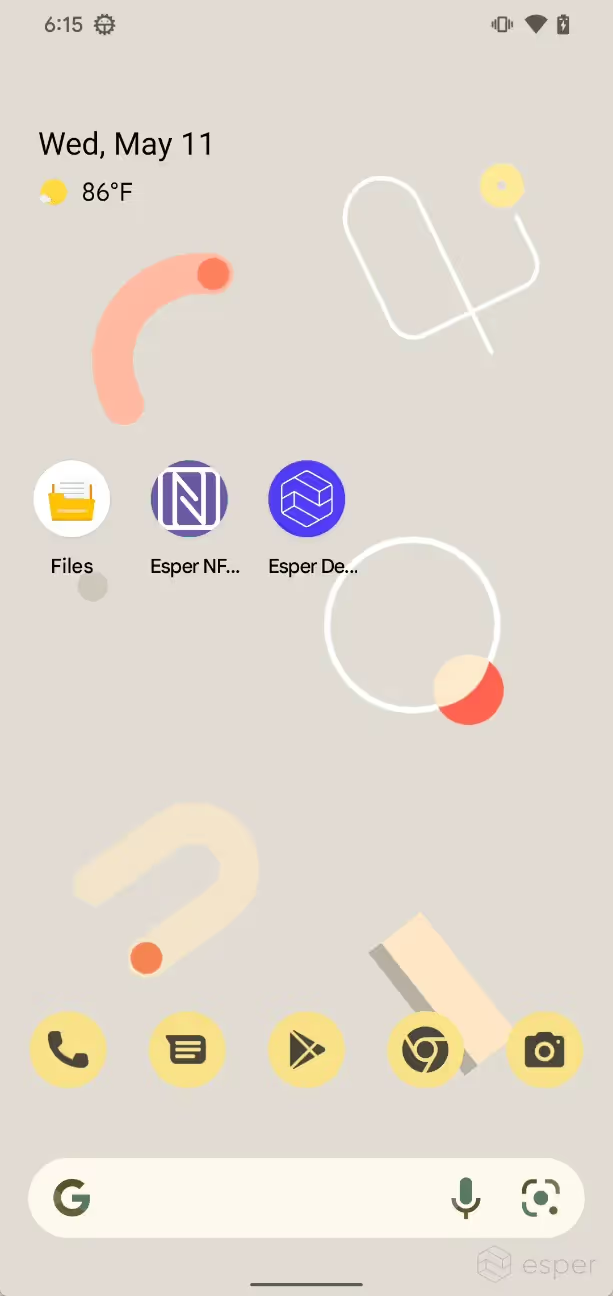

App drawer in the taskbar

The taskbar that Google introduced for large screen devices in Android 12L could only show up to 6 apps on the dock. In Android 13, an app drawer button has been added to the taskbar that lets users see and launch their installed apps.

This feature is controlled by the Launcher3 feature flag ENABLE_ALL_APPS_IN_TASKBAR and is enabled by default on large screen devices.

The taskbar on large screen devices now has an app drawer in Android 13.

Clipboard editor overlay

In Android 11, Google tweaked the screenshot experience by adding an overlay that sits in the bottom left corner of the screen. This overlay appears after taking a screenshot, and it contains a thumbnail previewing the screenshot, a share button, and an edit button to open the Markup activity.

In Android 13, Google has expanded this concept to clipboard content. Now, whenever the user copies text or images, a clipboard overlay will appear in the bottom left corner. This overlay contains a preview of the text or image that has been copied as well as a share button that, when tapped, opens the system share sheet. Tapping the image of text preview opens the Markup activity (for images) or a lightweight text editing activity (for text). If the text that’s been copied contains actionable information such as an address, phone number, or URL, then an additional chip may be shown to send the appropriate intent.

Android 13’s clipboard overlay in action.

Developers can optionally mark clipboard content as sensitive, preventing it from appearing in the preview. This is done by adding the EXTRA_IS_SENSITIVE flag to the ClipData’s ClipDescription before calling ClipboardManager#setPrimaryClip().

On devices with GMS, the clipboard overlay may show an additional button to initiate Nearby Share. This feature enables quickly sharing text or an image file that has been copied to the clipboard. This feature was announced at Google I/O 2022 but has not yet rolled out to users.

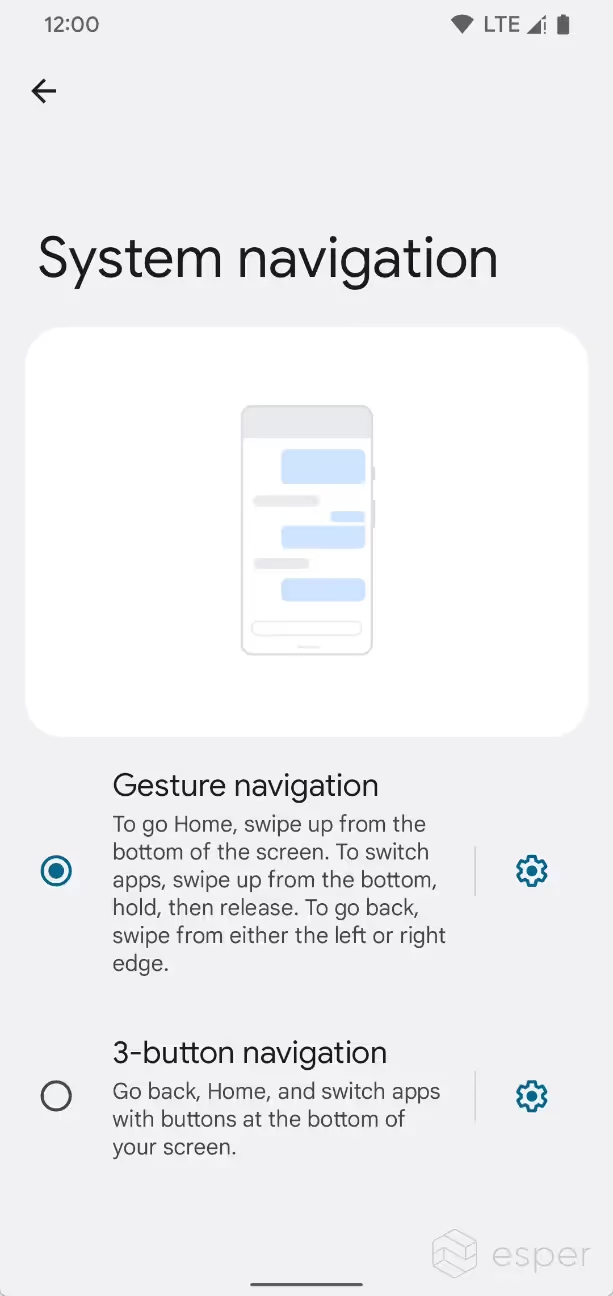

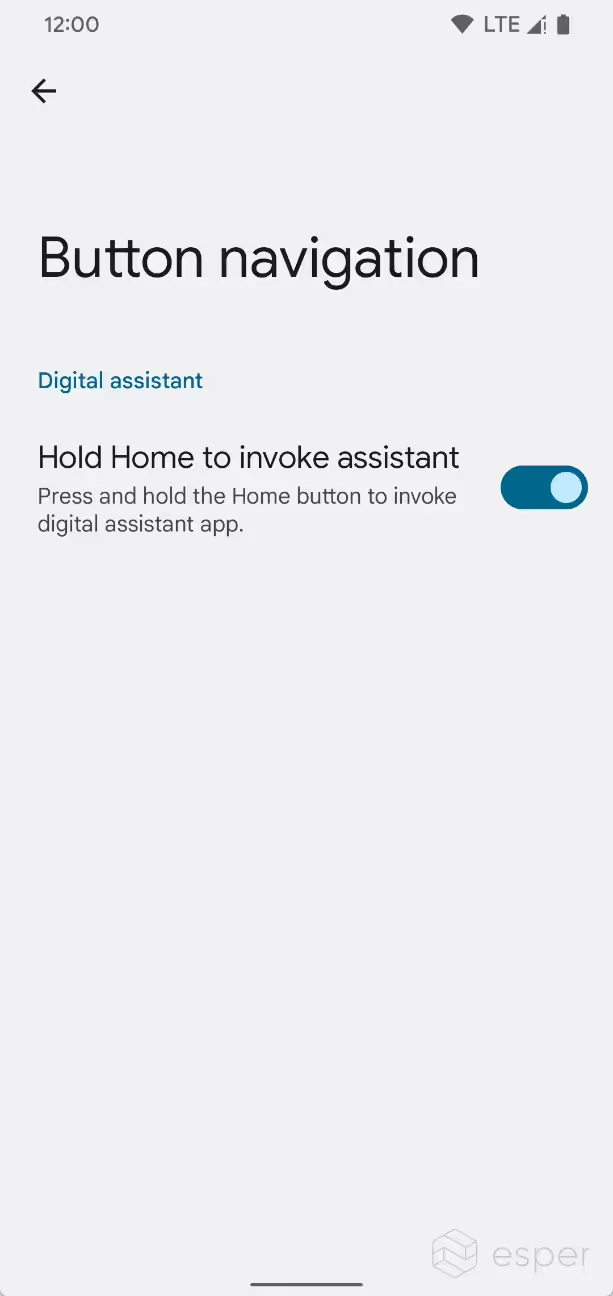

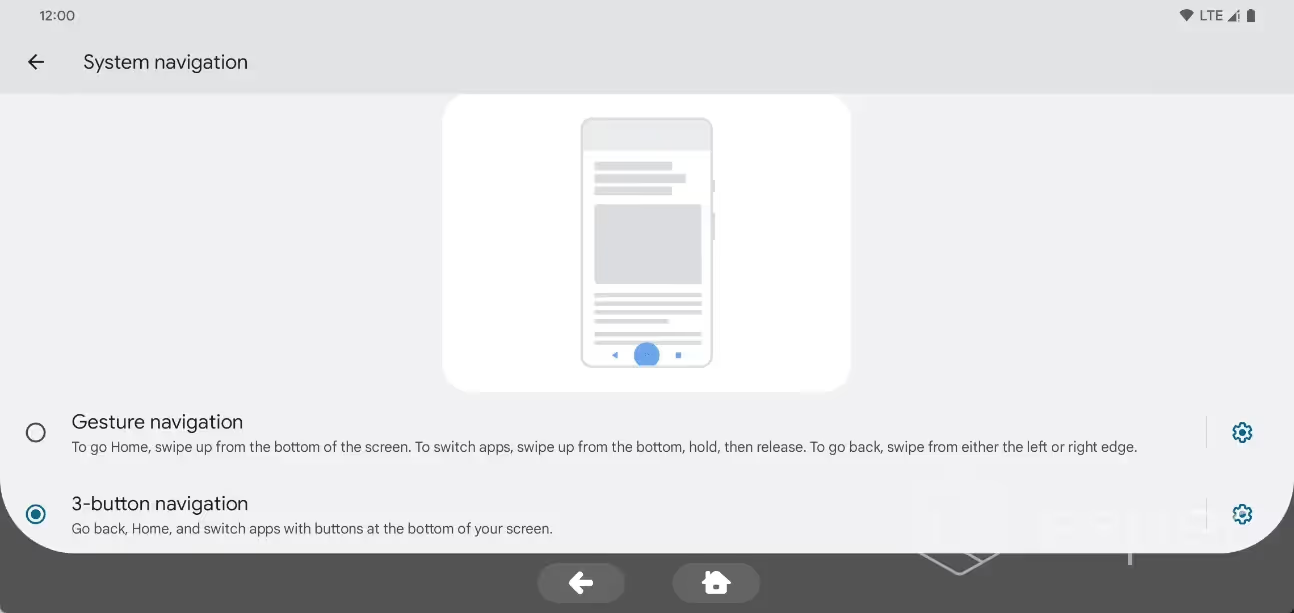

Disable the long-press home button action

Under Settings > System > Gestures > System navigation, a new submenu has been added for the 3-button navigation that lets you disable “hold Home to invoke assistant”. After disabling this feature, a long press of the home button will no longer launch the default assistant activity.

Drag to launch multiple instances of an app in split-screen

Android 13 supports dragging to launch multiple instances of the same activity in split-screen view. The MULTIPLE_TASK flag is applied to the launch intent to let activities supporting multiple instances show side-by-side.

Launch an app in split screen from its notification

Early Android 13 builds made it possible to launch an app in split-screen multitasking mode by long-pressing its notification and then dragging and dropping to either half of the screen. This feature was actually introduced in Android 12L but was disabled by default. Since it is still quite inconsistent and likely only intended for large screen devices, the feature was disabled by default in Beta 3.2 for handhelds. This video shows the feature in action.

Predictive back gesture navigation

Android 13 promises to make back navigation more “predictive”, though not in the sense of using machine learning to improve back gesture recognition as is already the case on Pixel devices. Instead, Android 13 is attempting to address the ambiguity of what happens when performing the back gesture. The feature will let users preview the destination or other result of a back gesture before they complete it, letting them decide whether they want to continue with the gesture or stay in the current view.

To complement this feature, the launcher is adding a new back-to-home transition animation that will make it very clear to the user that performing a back gesture will exit the app back to the launcher. The new back-to-home animation scales the app window as the user’s finger is swiping inward, similar to the swipe up to home animation. The user will see a snapshot of the home screen or app drawer as they’re swiping, indicating that completing the gesture will exit the app back to the launcher.

In order to make this new animation possible, Android 13 is changing the way apps handle back events. First of all, the KeyEvent#KEYCODE_BACK and OnBackPressed APIs are being deprecated. Instead, the system lets apps register back invocation callbacks through the new OnBackInvokedCallback platform API or OnBackPressedCallback API in the AppCompat (version 1.6.0-alpha03 or later) library. If the system detects there aren’t any registered handlers, then it can play out the new predictive back gesture animation because it can “predict” what to do when the user completes the back gesture. If there are layers that have registered handlers, on the other hand, then the system will invoke them in the reverse order in which they are registered.

Previously, the system wouldn’t always be able to predict what would happen when the user tries to go back, because individual activities could have their own back stacks that the system isn’t aware of and apps could override the behavior of back navigation. The way back events are handled in Android 13 enables a more intuitive back navigation experience while also letting apps continue to handle custom navigation.

Apps can opt in to the new predictive back gesture navigation system by setting the new enableOnBackInvokedCallback Manifest attribute to “true”. Then, in order to test the new back-to-home animation, developers can toggle “predictive back animations” in developer options. The new back dispatching behavior will be enabled by default for apps targeting Android 14 (API level 34).

A demo of the new back-to-home animation can be seen in this video from Google’s codelab.

Taskbar adds app predictions row and search bar

Google is working to bring feature parity between the taskbar’s app drawer on large screen devices and the app drawer on handheld devices. The taskbar’s new app drawer now shows a predictions row and will support showing a search bar. The former is enabled by default while the latter is controlled by a feature flag (ENABLE_ALL_APPS_ONE _SEARCH_IN_TASKBAR) during testing. However, the search bar currently doesn’t appear with this flag enabled in Beta 1.

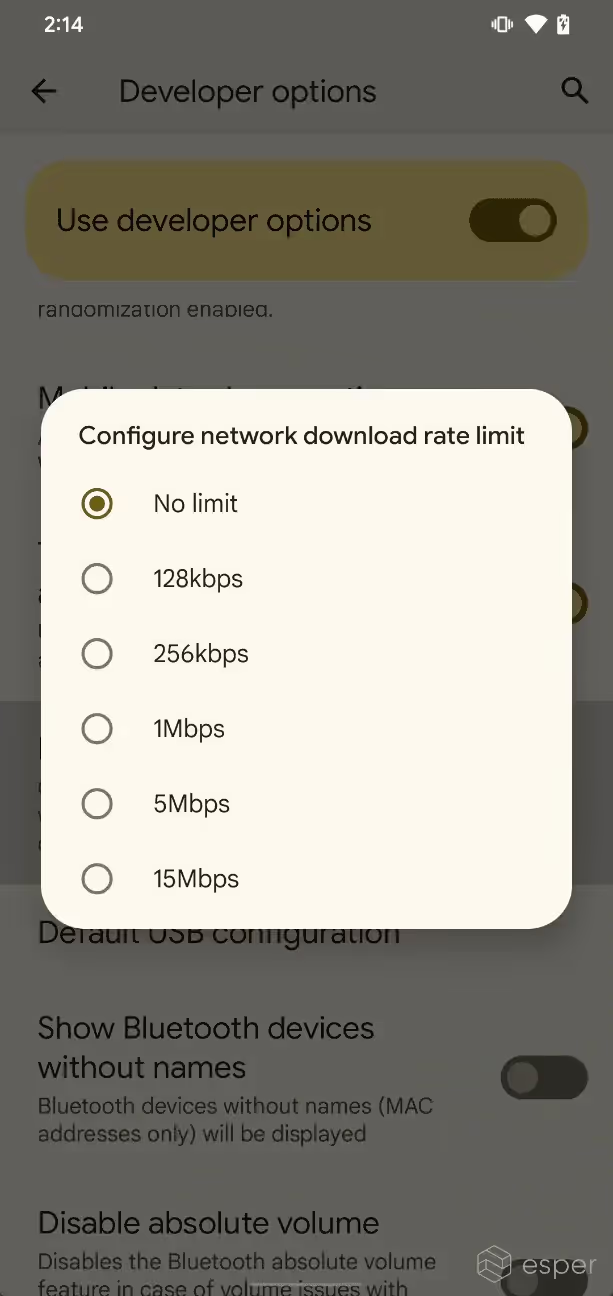

Bandwidth throttling

Simulating slow network conditions can be useful for development and debugging, but Android hasn’t provided an easy way to throttle network speeds until the latest Android 13 release. In Android 13, a new setting in Developer Options lets developers set a bandwidth rate limit for all networks capable of providing Internet access, whether that be Wi-Fi or cellular networks. This setting is called “network download rate limit” and has 6 options, ranging from “no limit” to “15Mbps.”

For more information on this feature, please refer to this article.

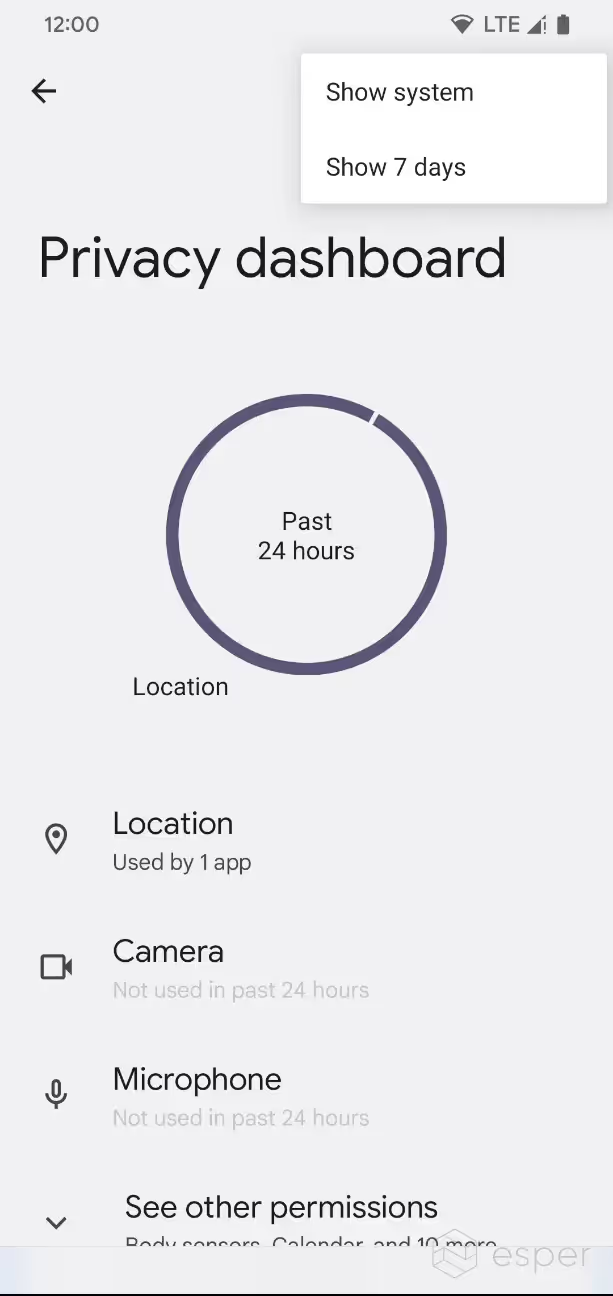

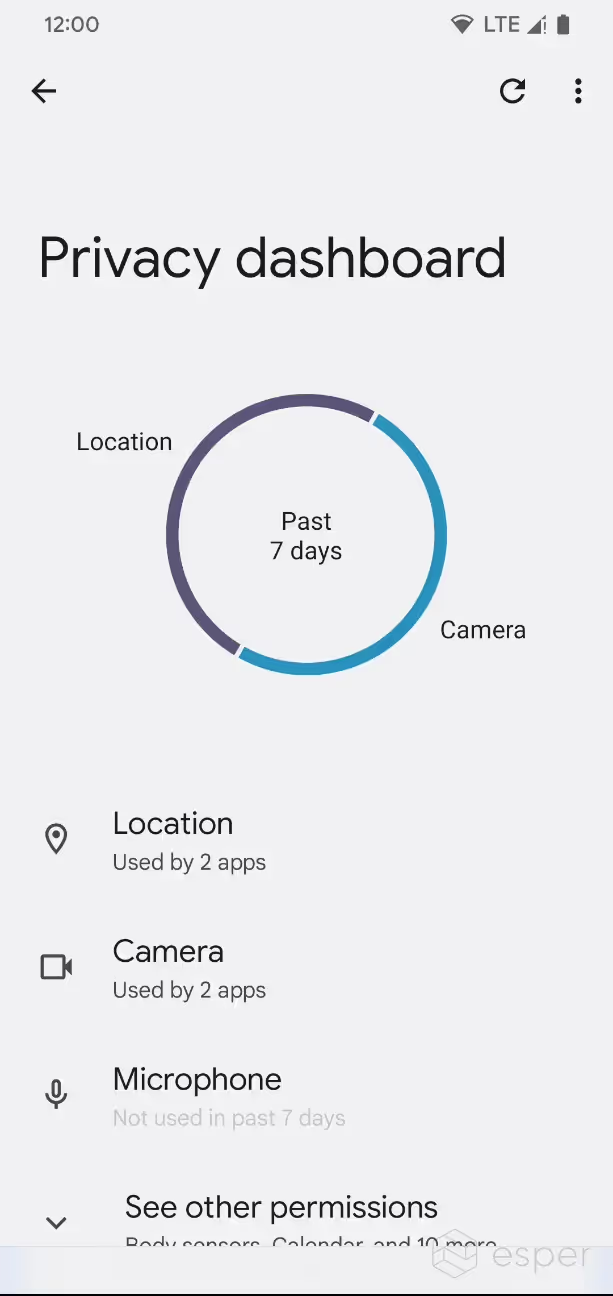

7-day view in privacy dashboard

Android 12 introduced the “Privacy dashboard” feature which lets users view the app that have accessed permissions marked as “dangerous” (ie. runtime permissions). The dashboard only shows data from the past 24 hours, but in Android 13, a new “show 7 days” button will show permissions access data from the past 7 days.

This feature is not enabled by default in current Android 13 preview builds, but Google confirmed at I/O that the feature will be available. The feature is currently controlled by a device_config flag that can be toggled using the following ADB shell command:

Clipboard auto clear

Android offers a clipboard service that’s available to all apps for placing and retrieving text. Many keyboard apps like Google’s Gboard extend the global clipboard with a database that stores multiple items. Gboard even automatically clears any clipboard item that’s older than 1 hour.

Although any app can technically clear the primary clip in the global clipboard (so long as they’re either the foreground app or the default input method on Android 10+), Android itself does not automatically clear the clipboard. This means that any clipboard item left in the global clipboard could be read by an app at a later time, though Android’s clipboard access toast message will likely alert the user to this fact.

Android 13, however, has added a clipboard auto clear feature. This feature will automatically clear the primary clip from the global clipboard after a set amount of time has passed. By default, the clipboard is cleared after 3600000 milliseconds has passed (60 minutes), matching Gboard’s functionality.

The logic for this new feature is contained within the ClipboardService class of services.jar. Here is a demonstration of the clipboard auto clear feature in Android 13 with a timeout of 5 seconds:

Control smart home devices without unlocking the device

Android 11 introduced the Quick Access Device Controls feature which lets users quickly view the status of and control smart home devices like lights, thermostats, and cameras. Apps can use the ControlsProviderService API to tell SystemUI which controls it can show in the Device Controls area. The device maker can choose where to surface the Device Controls area, but in AOSP Android 12, it can be opened through a shortcut on the lock screen or Quick Settings panel. However, if the user opens Device Controls while the device is locked, then they will only be able to see and not control any of their smart home devices.

In Android 13, however, apps can let users control their smart home devices without having them unlock their devices. The isAuthRequired method has been added to the Control class, and if it returns “true”, then users can interact with the control without authentication. This behavior can be set per-control, so developers do not need to expose all device controls offered by their app to interaction without authentication. The following video demonstrates the new API in action:

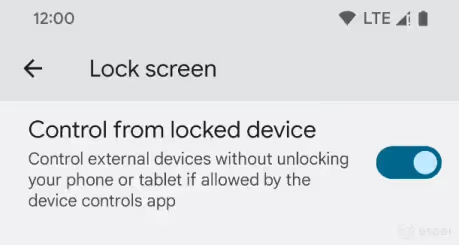

Starting with Beta 1, a new setting is available under Settings > Display > Lock screen called “control from locked device.” When enabled, users can “control external devices without unlocking your phone or tablet if allowed by the device controls app.”

QR code scanner shortcut

QR codes have been an indispensable tool during the COVID-19 pandemic, as they’re a cheap and highly accessible way for a business to lead users to a specific webpage without directly interacting with them. In light of the renewed importance of QR codes, Google is implementing a handy shortcut in Android 13 to launch a QR code scanner.

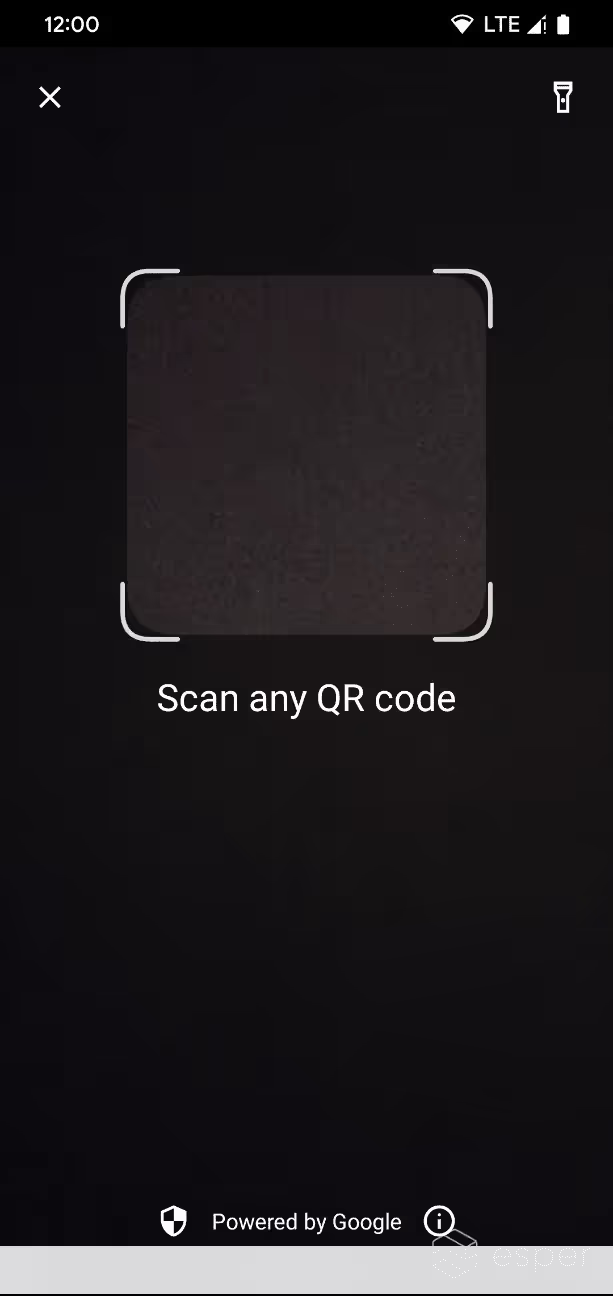

Specifically, Android 13 implements a new Quick Setting tile to launch a QR code scanner. Android 13 itself won’t ship with a QR code scanning component, but it will support launching a component that does. The new QRCodeScannerController class in SystemUI defines the logic, and the component that is launched is contained within the device_config value “default_qr_code_scanner”. On devices with GMS, Google Play Services manages device_config values, and hence sets the QR code scanner component as com.google.android.gms/ .mlkit.barcode.ui. PlatformBarcodeScanning ActivityProxy.

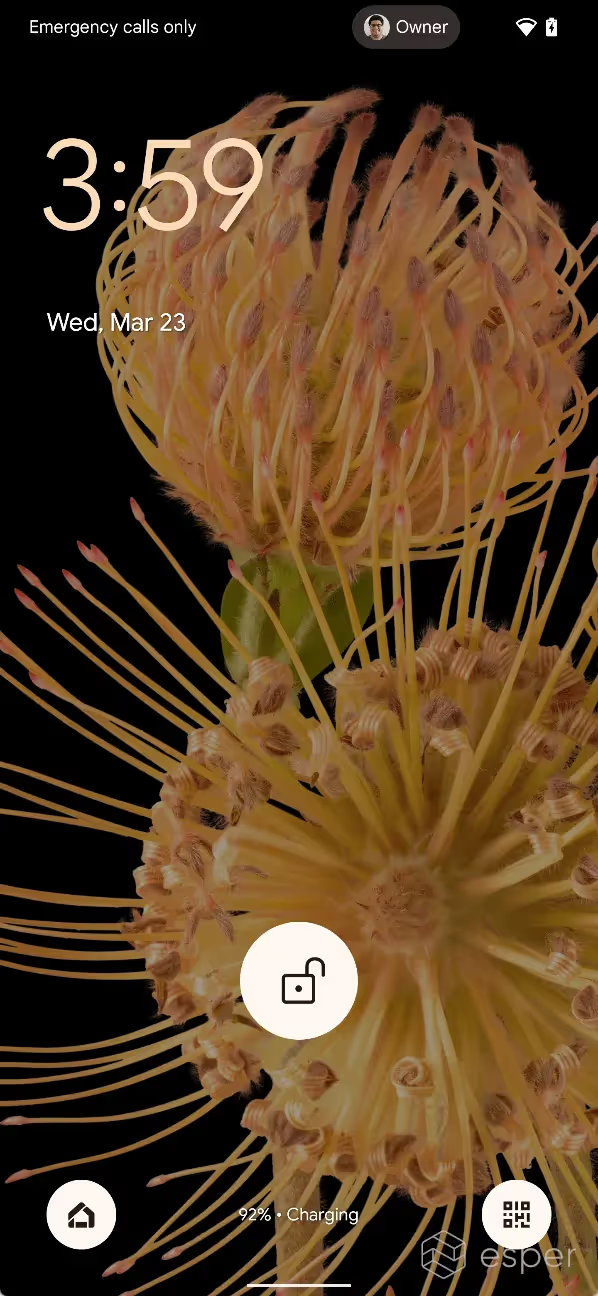

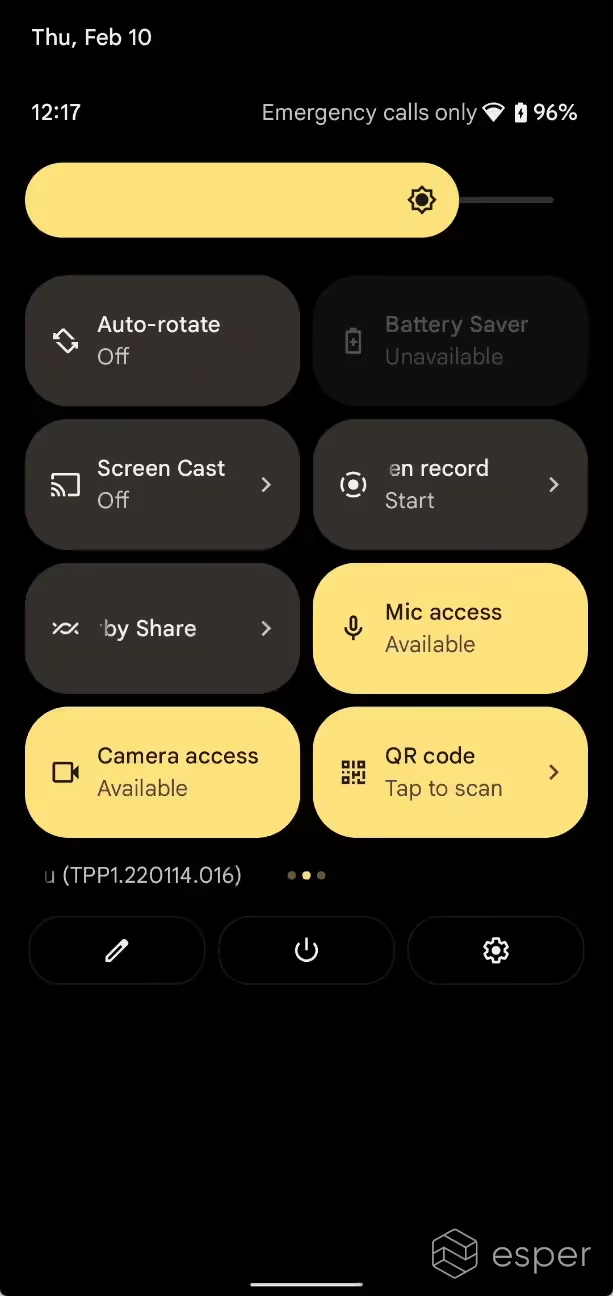

Left: QR code scanner shortcut on the lock screen.

Right: QR code scanner Quick Setting tile.

The Quick Setting tile is part of the default set of active Quick Settings tiles. Its title is “QR code” and its subtitle is “Tap to scan.” The tile is grayed out if no component is defined in the device_config value “default_qr_code_scanner”. Within the Settings.Secure.sysui_qs_tiles settings value that keeps track of the tiles selected by the current user, the value for the QR code scanner tile is “qr_code_scanner”.

Launching the default QR code scanner component provided by GMS.

There is also a lock screen entry point for the QR code scanner, which is controlled by the framework flag ‘config_enableQrCodeScanner OnLockScreen.’ This value is set to false by default. Currently, Android 13 does not provide a user-facing setting to control the visibility of the lock screen entry point.

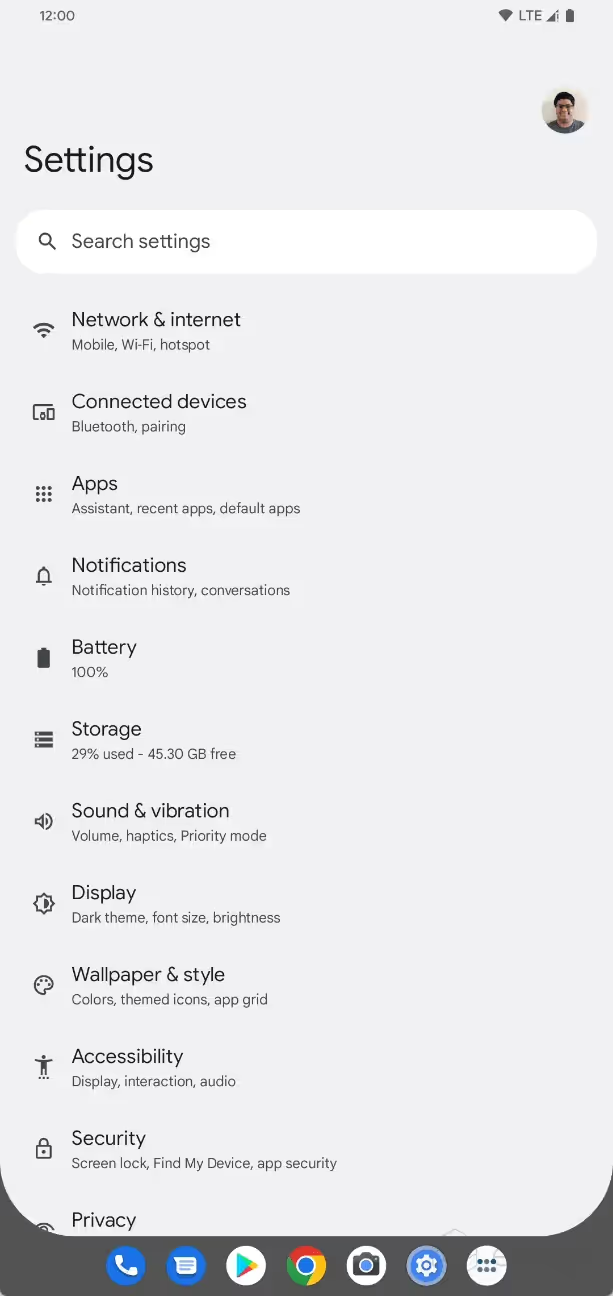

Unified Security & Privacy settings

During Google I/O, Google announced that it will introduce a unified Security & Privacy settings page in Android 13. This new settings page will consolidate all privacy and security settings in one place, and it will also provide a color-coded indicator of the user’s safety status and guidance on how to boost security. The “Security” settings page on Pixel devices already shows a color-coded indicator of the user’s safety status and provides guidance, but it does not integrate privacy settings.

.avif)

The new Security & Privacy settings page is contained within the PermissionController APK delivered through the PermissionController module. The component is com.google.android .permissioncontroller /com.android. permissioncontroller .safetycenter.ui. SafetyCenterActivity (for the Google-signed module), but the activity won’t launch unless the feature flag is enabled. This feature flag can be enabled by sending the following command:

While this makes the Security & Privacy settings page appear in top-level Settings, the page itself is not fully functional as of now. Google has yet to announce the full roll out of this feature, which will arrive via an update to the aforementioned Mainline module at a later date.

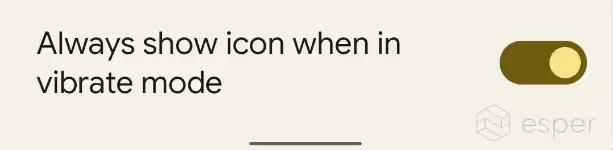

Toggle to show the vibrate icon in the status bar

Android places an icon in the status bar to reflect the sound mode, but in Android 12, the vibrate icon no longer showed when the device was in vibrate mode. Many users complained about this change, and in response, Google has added a toggle in Android 13 under Settings > Sound & vibration that restores the vibrate icon in the status bar when the device is in vibrate mode. The vibrate icon even appears in the status bar when on the lock screen. This toggle is available under “Sound & vibration” as “Always show icon when in vibrate mode” and its value is stored in Settings.Secure.status_bar _show_vibrate_icon.

This feature has been backported to Android 12 QPR3.

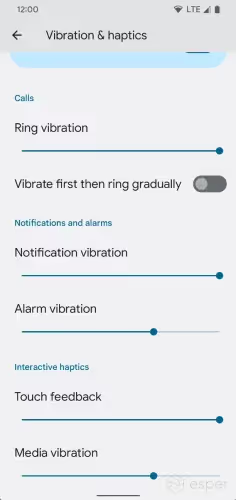

Vibration sliders for alarm and media vibrations

Under Settings > Sound & vibration > Vibration & haptics, sliders to configure the alarm and media vibration levels have been added.

In conjunction with this change, the Settings configuration flag controlling the supported intensity level (config_vibration_supported _intensity_levels) has been updated to be an integer, so device makers can specify how many distinct levels are supported.

What are the UI changes in Android 13?

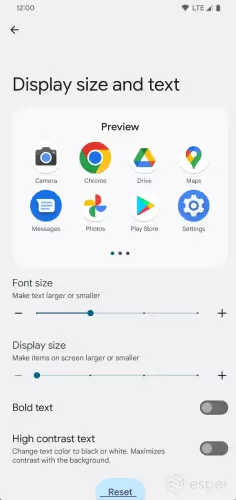

Consolidated font and display settings

The “font size” and “display size” settings under Settings > Display have been consolidated into a single page, called “display size and text.” The unified settings page also shows a preview for how changes to the font and display size affect icon and text scaling. It also includes two toggles previously found in Accessibility settings: “bold text” and “high contrast text.”

Low light clock when docked

Android has multiple features to display useful information while the device is idling, including a screen saver and ambient display. The former is set to receive a major revamp in Android 13 as part of Google’s overall effort to improve the experience of docked devices, while the latter is set to be joined by a simpler variant.

Android 13 includes a new “low light clock” that simply displays a TextClock view in a light shade of gray. This view is only shown when the device is docked, the ambient lighting is below a certain brightness threshold, and the SystemUI configuration value ?config_show_low_light_clock _when_docked’ is set to ?true.’

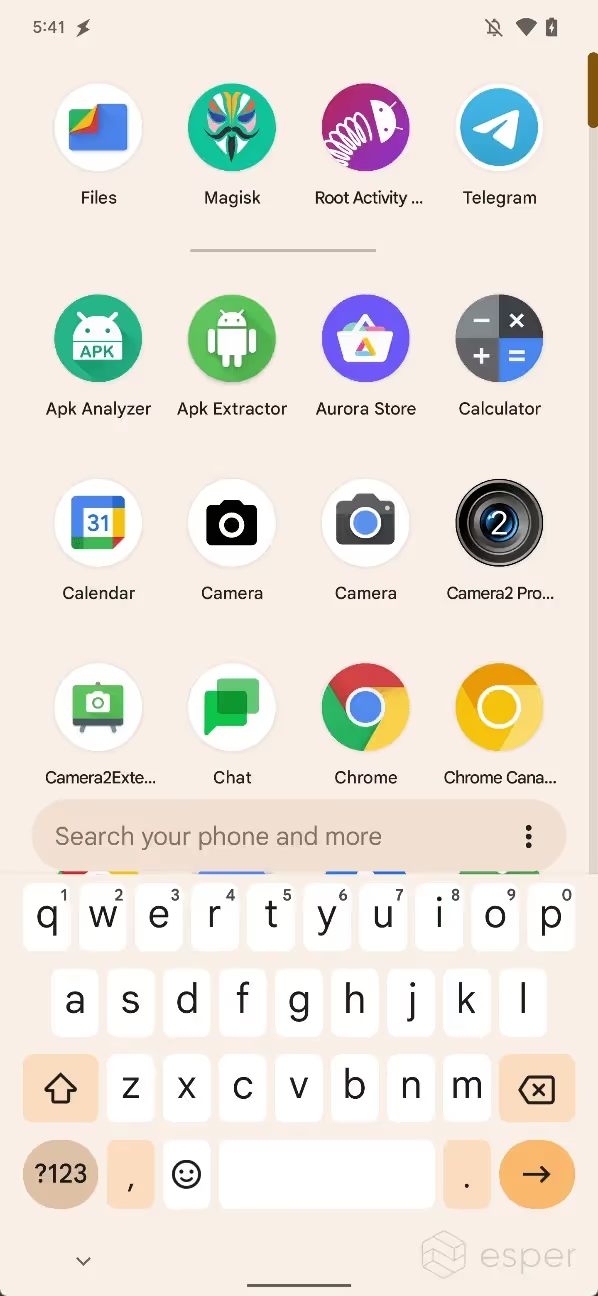

Bottom search bar in the launcher app drawer

Android 13 DP2 on Pixel has a new feature flag that, when enabled, shifts the search bar in the app drawer to the bottom of the screen. The search bar remains at the bottom until the keyboard is opened, after which it’ll shift to stay above the keyboard.

The “floating search bar” in Pixel Launcher on Android 13.

This feature is disabled by default but can be enabled by setting ENABLE_FLOATING_SEARCH_BAR to true. It remains to be seen if this behavior is exclusive to Google’s Pixel Launcher fork or if this will be available in AOSP Launcher3.

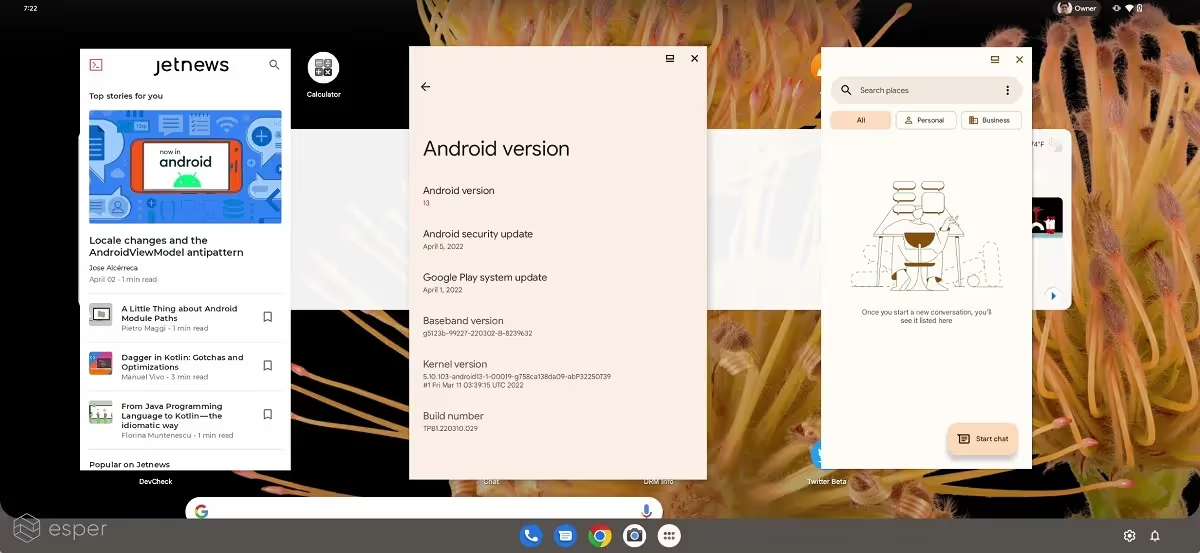

Custom interface for PCs

Android can run on a variety of hardware, including dedicated devices like kiosks, but Google only officially supports a handful of device types. These device types are defined in the Compatibility Definition Document (CDD), and they include handheld devices (like phones), televisions, watches, cars, and tablets. When building Android for a particular device, device makers need to declare the feature corresponding to the device type; for example, television device implementations are expected to declare the feature ?android.hardware.type.television’ to tell the system and apps that the device is a television.

Since Android apps can also run on Chromebooks, Google created the ?android.hardware.type.pc’ device type a few years back so apps can target traditional clamshell and desktop computing devices and the framework can recognize apps that have been designed for those form factors. However, it wasn’t until Android 12L that Google decided to revamp the UI for large screen devices, and in Android 13, Google is taking another step in that direction.

On PC devices, the launcher’s taskbar is tweaked to show dedicated buttons for notifications and quick settings. These buttons are persistently shown on the right side of the taskbar, where the 3-button navigation keys would ordinarily be displayed on other large screen devices.

Android 13’s taskbar gains dedicated buttons for notifications and quick settings on PC devices.

In addition, I noticed that all apps are launched in freeform multi-window mode by default. Freeform multi-window was introduced in Android 7.0 Nougat and to this day remains hidden behind a developer option. Google may be getting ready to enable freeform multitasking support by default on large screen devices like PCs, but this remains to be seen.

Kids mode for the navigation bar

Within Launcher3 is a new navigation bar mode called “kids mode.” When enabled on the large screen devices, the drawables and layout for the back and home icons are changed, the recents overview button is hidden, and the navigation bar is kept visible when apps enter immersive mode. When in immersive mode, the buttons fade after a few seconds until they’re pressed again.

This feature is controlled by the boolean value Settings.Secure.nav_bar_kids_mode.

Unified search bar for the home screen and app drawer

During the development of Android 12L, Google experimented with unifying the home screen and app drawer search experiences. This experiment was gated by the ENABLE_ONE_SEARCH flag, but it was removed from the Launcher3 codebase prior to the AOSP release.

This unified search bar returned in Android 13 with the release of Beta 1, but it was disabled by default. To enable it, the following command needed to be sent:

As of Beta 2, however, this search bar is now available by default.

Lock screen rotation enabled on large screen devices

Android’s framework configuration controlling lock screen rotation has been set to “false” by default for years, but it is now enabled by default. In Android 13, the lock screen will only rotate on large screen devices, however.

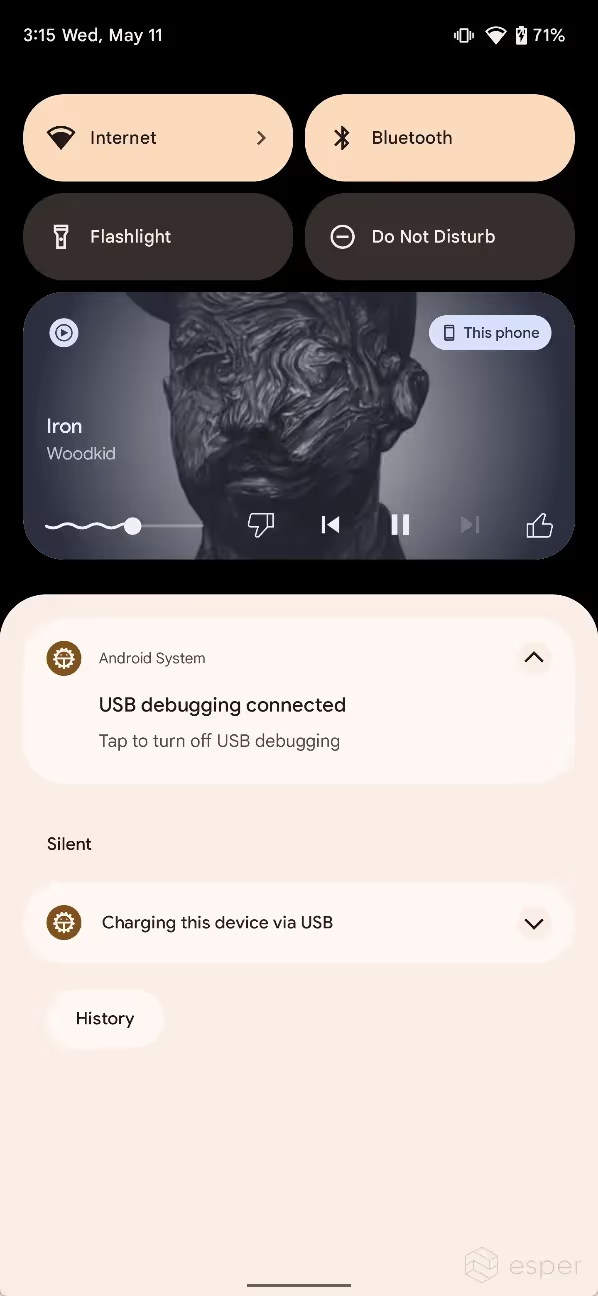

Redesigned media output picker UI

In Android 10, Google introduced an output picker that lets users switch audio output between supported audio sources, such as connected Bluetooth devices. This output picker is accessed by tapping the media output picker button in the top-right corner of the media player controls. Now in Android 13, Google has revamped the media output picker UI.

The highlight of the new media output picker UI is the larger volume slider for each connected device.

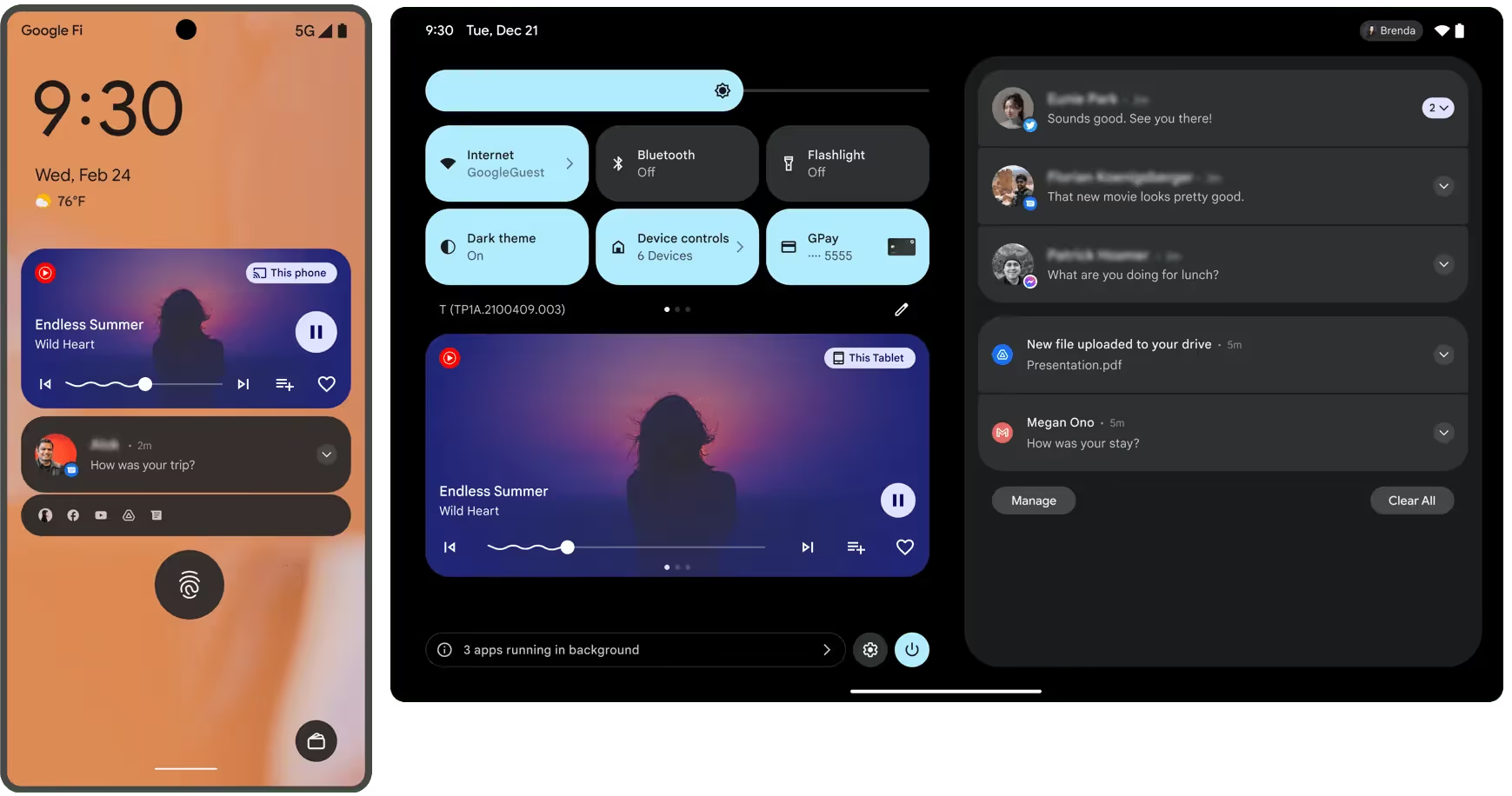

Redesigned media player UI

In Android 11, Google reworked the media player controls to support multiple sessions and integration with the notifications shade. Now in Android 13, Google has revamped the media player UI.

The new media player UI features a larger play/pause button that’s been shifted to the right side, a (squiggly) progress slider that’s at the bottom left in line with the rest of the media control buttons, and the media info on the left side. The album art is displayed in the background, and the color scheme of the media output switcher button is extracted from the album art.

The UI of the long-press context menu for the media player has also been updated. The shortcut to settings has been moved to a gear in the upper right corner, and the “hide” button is now filled.

Squiggly progress bar

The progress bar in the media player now shows a squiggly line up to the current timestamp.

In Beta 1, the squiggly progress bar was centered at the bottom of the media player. In Beta 2, the progress bar has been shortened and is now shown at the bottom left.

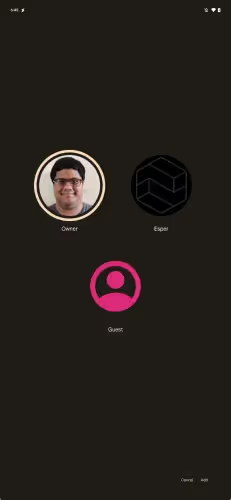

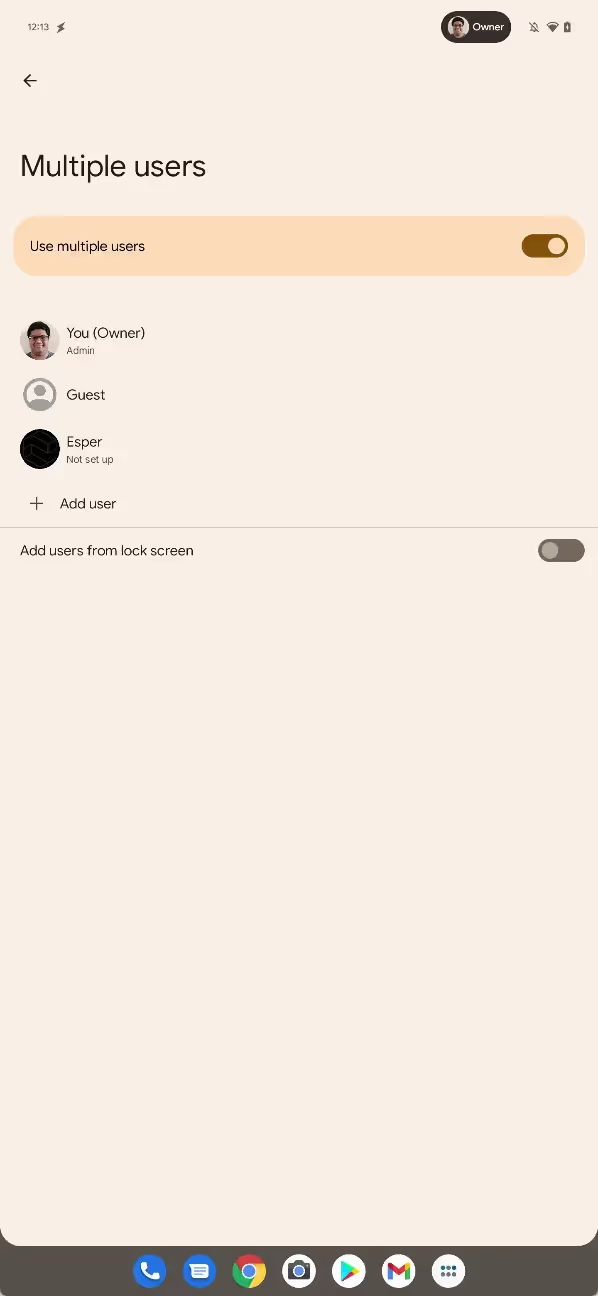

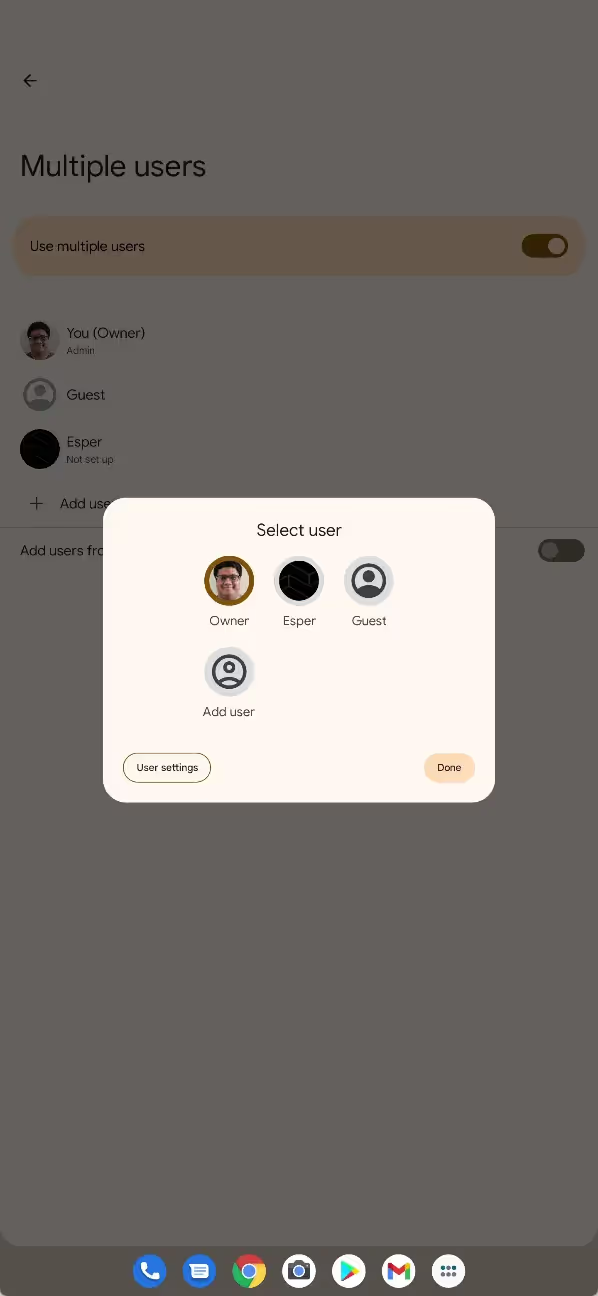

Fullscreen user profile switcher

In an effort to improve the experience of sharing a device, Google has introduced numerous improvements to the multi-user experience. One change that’s in development is a fullscreen user profile switcher.

This interface is likely intended for large screen devices that have a lot of screen real estate. It’s currently disabled by default but can be enabled through the configuration value config_enableFullscreenUserSwitcher.

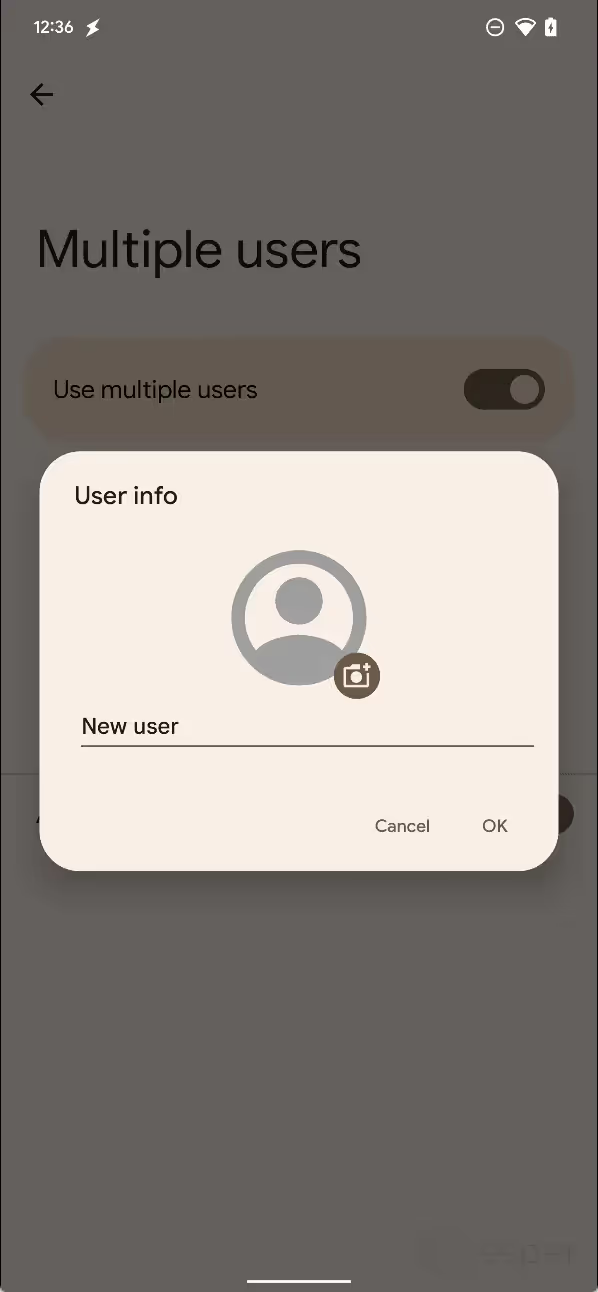

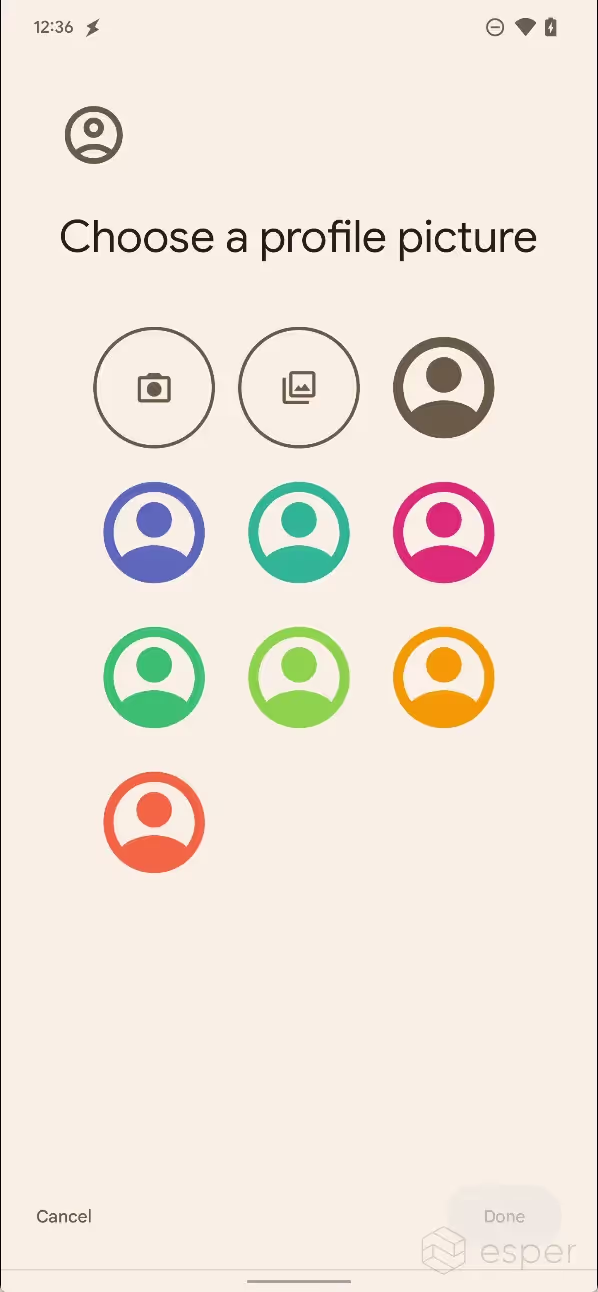

Revamped UI for adding a new user

The UI for creating a new profile has been redesigned in Android 13. Users now have a few options of varying colors to choose from when choosing a profile picture, or they can take a photo using the default camera app or choose an image from the gallery.

The new user creation UI in Android 13

Status bar user profile switcher

Google is experimenting with placing a status bar chip that displays the current user profile and, when tapped, opens the user profile switcher. This chip is not enabled by default in current Android 13 builds, but it can be enabled by setting the SystemUI flag flag_user_switcher_chip to true. Given the limited space available on smartphones, it’s likely this feature is intended for large screen devices like tablets.

Android 13’s status bar chip for the user profile switcher

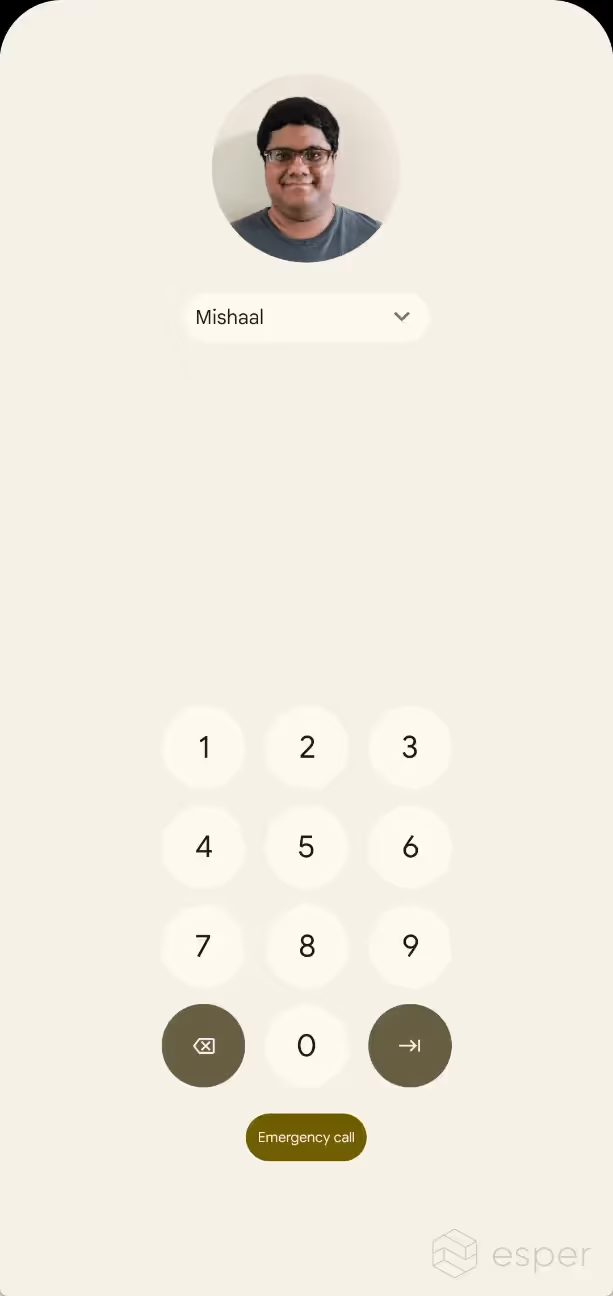

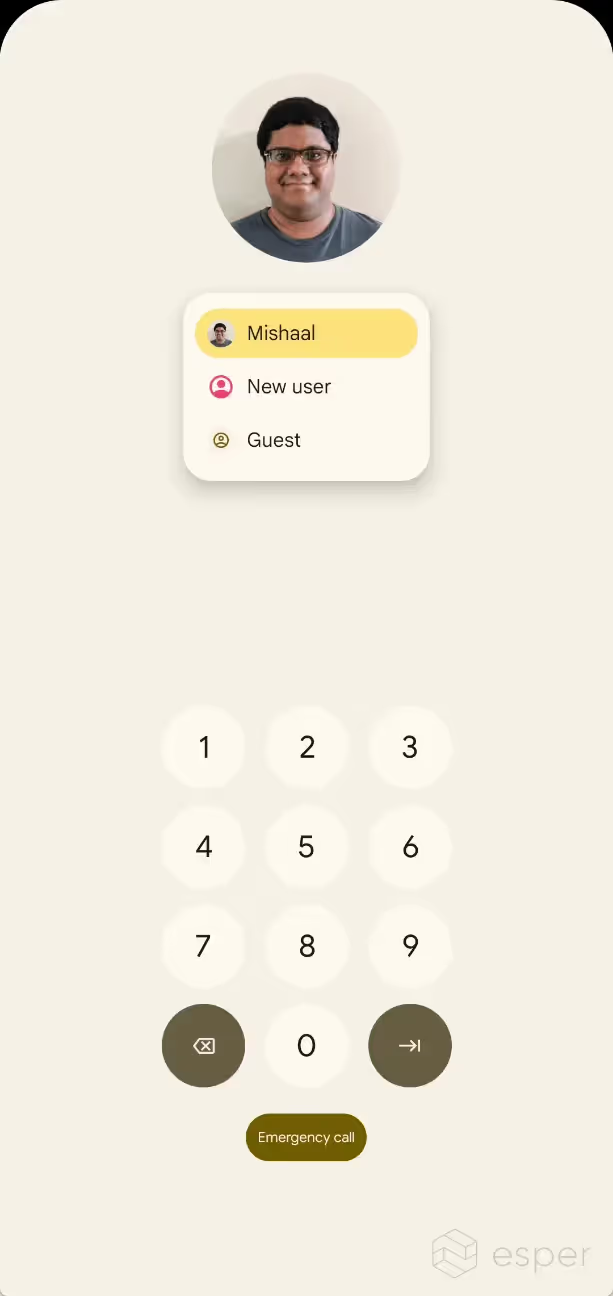

User switcher on the keyguard

In Android 13, the keyguard screen (ie. the lock screen PIN/password/pattern entry page) can show a large user profile switcher on the top (in portrait mode) or on the left (in landscape mode). This feature is disabled by default but is controlled by the SystemUI boolean ?config_enableBouncerUserSwitcher’.

Button rearrangement in the notification shade

Google has moved the power, settings, and profile switcher buttons in the notification shade. Previously, they were located directly underneath the Quick Settings panel. Now, they are located at the very bottom, tucked to the right.

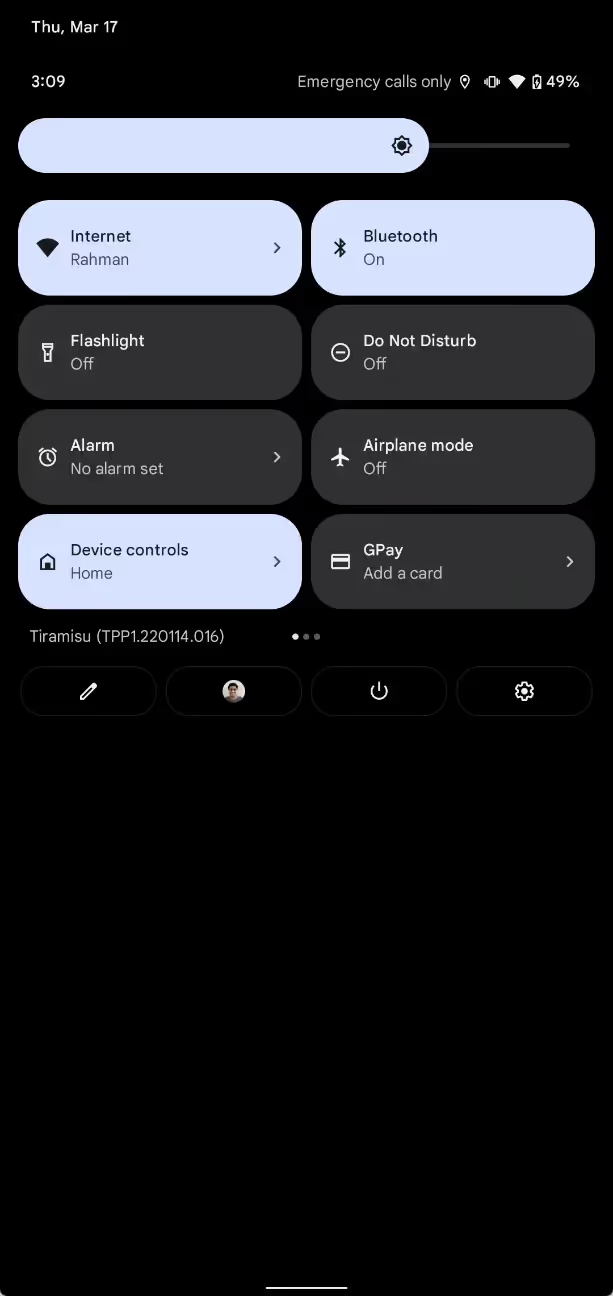

Left: notification shade layout in Android 13 DP1 and before.

Right: notification shade in Android 13 DP2 and later.

Ignore the difference in DPI!

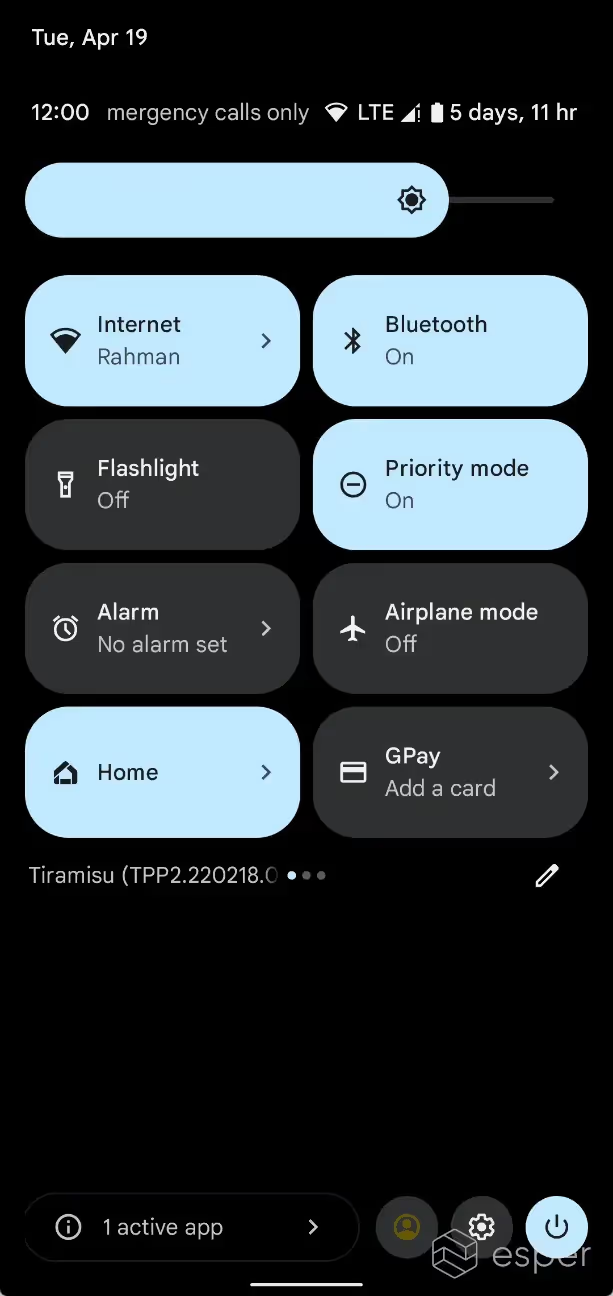

Do Not Disturb may be rebranded to Priority mode

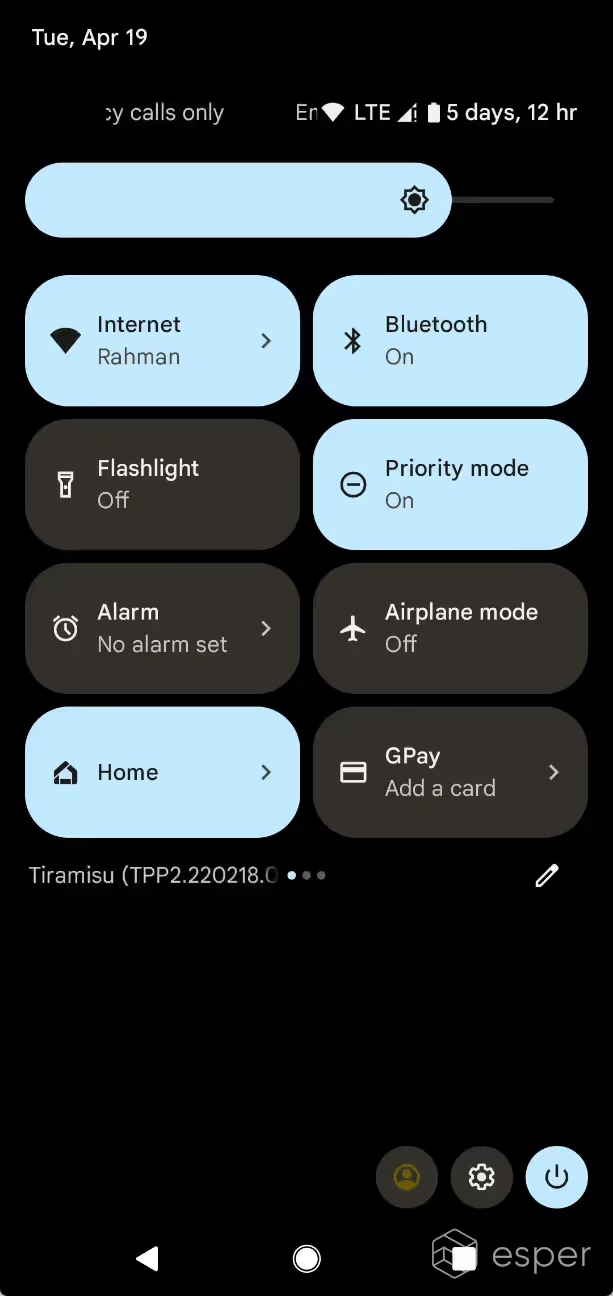

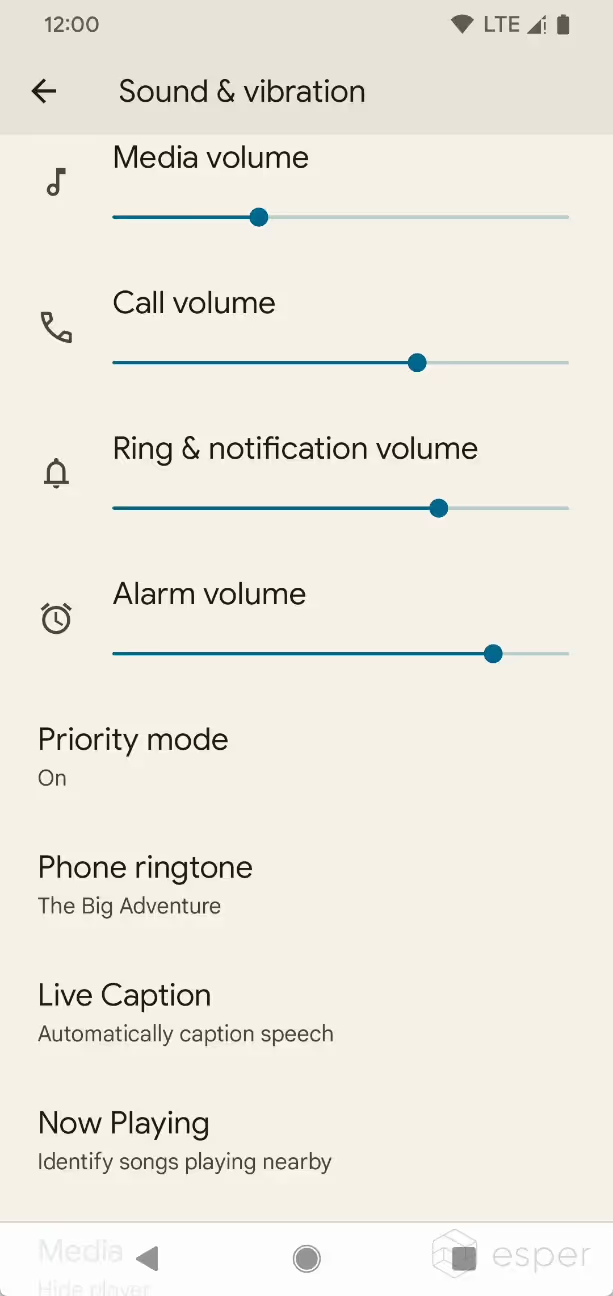

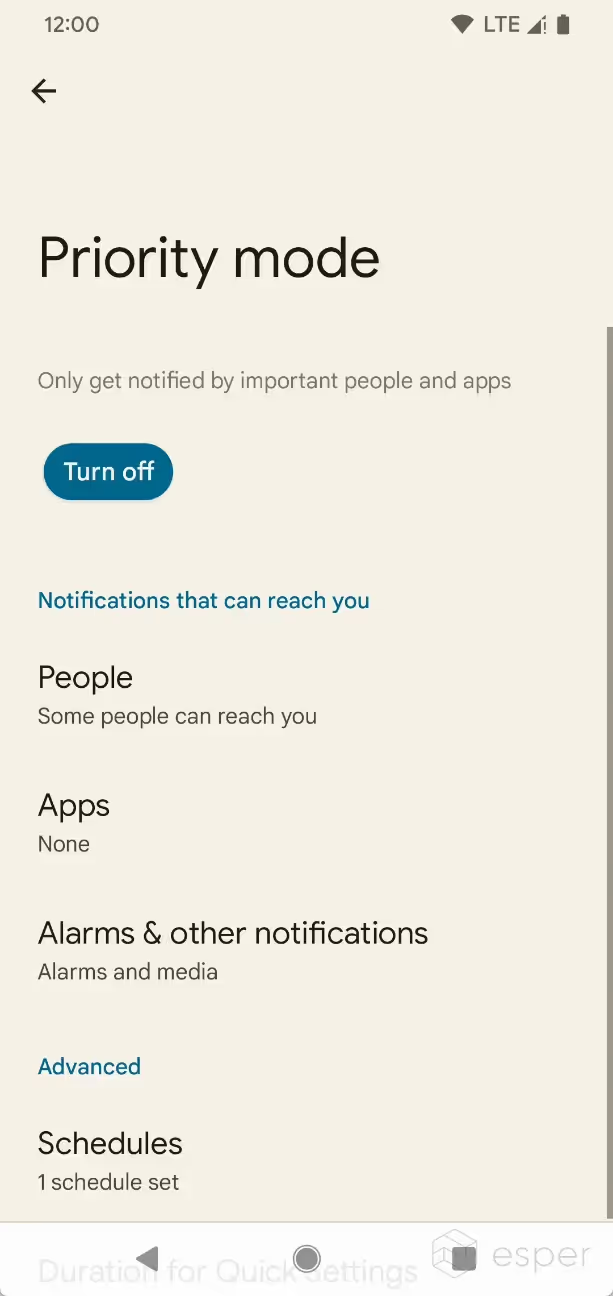

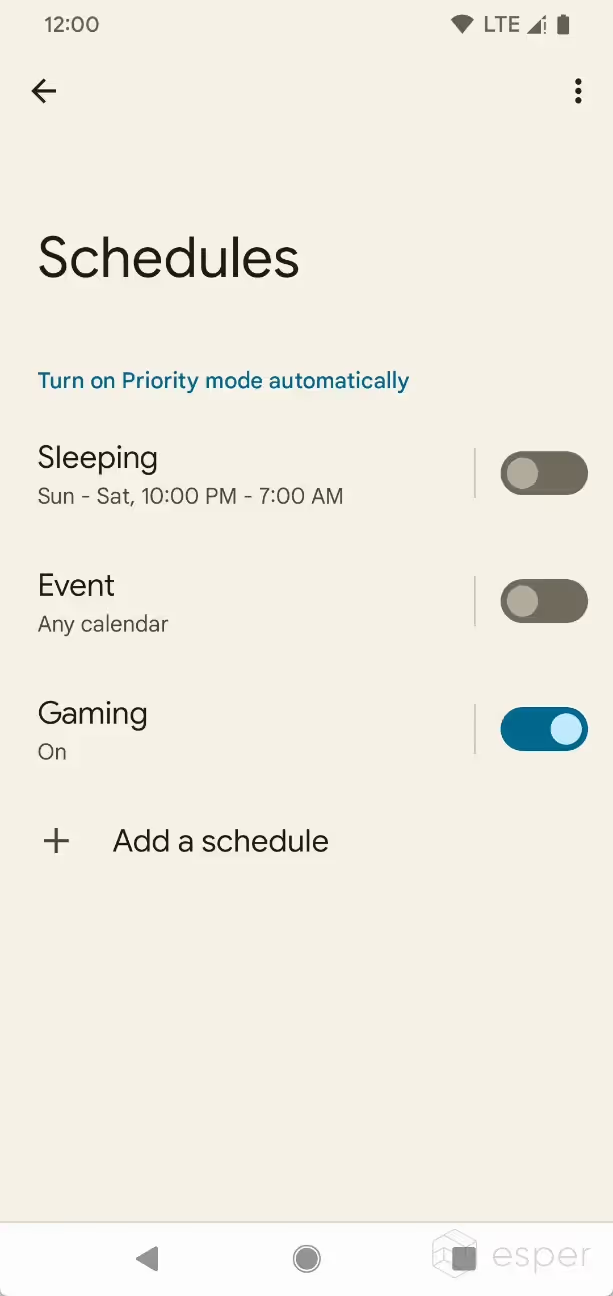

Do Not Disturb mode, the feature that lets users choose what apps and contacts can interrupt them, was renamed to Priority mode in Developer Preview 2. Apart from the branding change, the schedules page has been redesigned to use switches instead of toggles and now shows summaries for schedules and calendar events (instead of just whether they’re “on” or “off”). Schedules list the days and times for which they’re active, while calendar events show what events they’re triggered on.

Do Not Disturb mode was rebranded to Priority Mode in Developer Preview 2.

Android 13 Beta 1 brought back the original Do Not Disturb branding, so it seems that the “Priority mode” branding isn’t here to stay.

Enabling silent mode disabled all haptics

When setting the sound mode to “silent”, all haptics were disabled in the Android 13 developer previews, even those for interactions (such as gesture navigation). On Android 12L, “vibration & haptics” are similarly grayed out with a warning that says “vibration & haptics are unavailable because [the] phone is set to silent”, but in our testing, haptics for interactions still worked. This is not the case in the Android 13 developer previews, however. Fortunately, this change has been reverted in the Android 13 beta release.

What are the behavioral changes in Android 13?

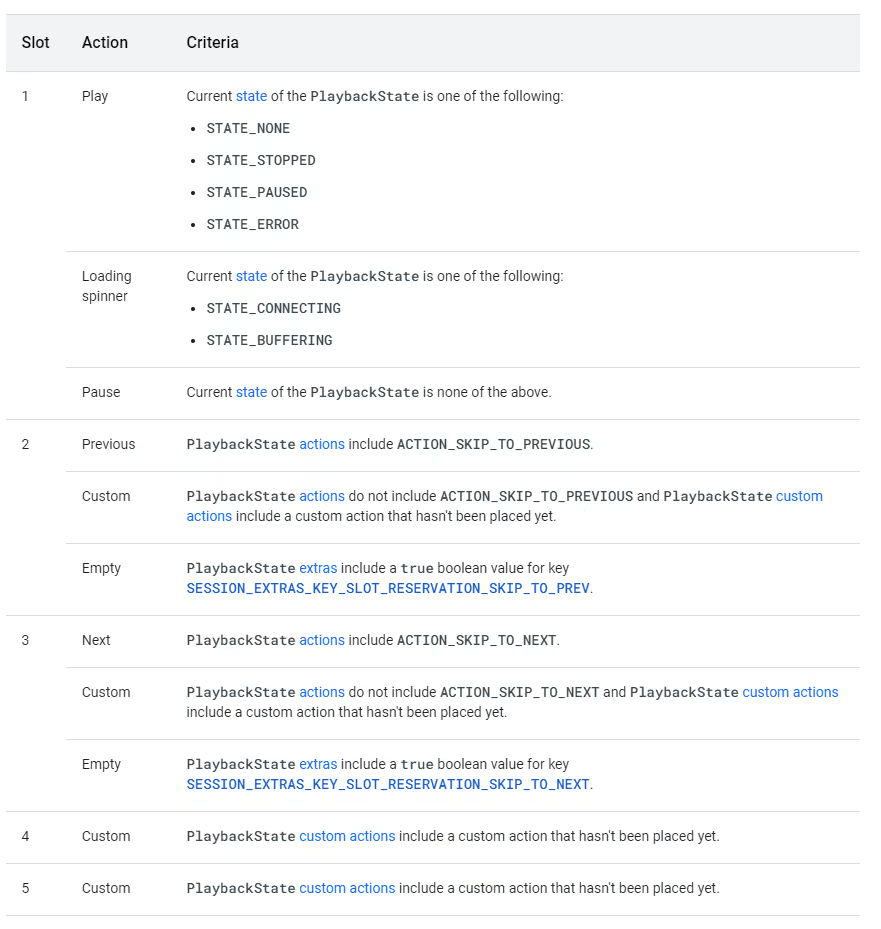

Media controls are now derived from PlaybackState

MediaStyle is a notification style used for media playback notifications. In its expanded form, it can show up to 5 notification actions which can be chosen by the media application. Prior to Android 13, the system displays media controls based on the list of notification actions added to the MediaStyle notification.

Starting with Android 13, the system will derive media controls from PlaybackState actions rather than the MediaStyle notification. If an app doesn’t include a PlaybackState or targets an older SDK version, then the system will fall back to displaying actions from the MediaStyle notification. This change aligns how media controls are rendered across Android platforms.

Android 13 can show up to five action buttons based on the PlaybackState. In its compact state, the media notification will only show the first three action slots. The following table lists the action slots and the criteria the system uses to display each slot.

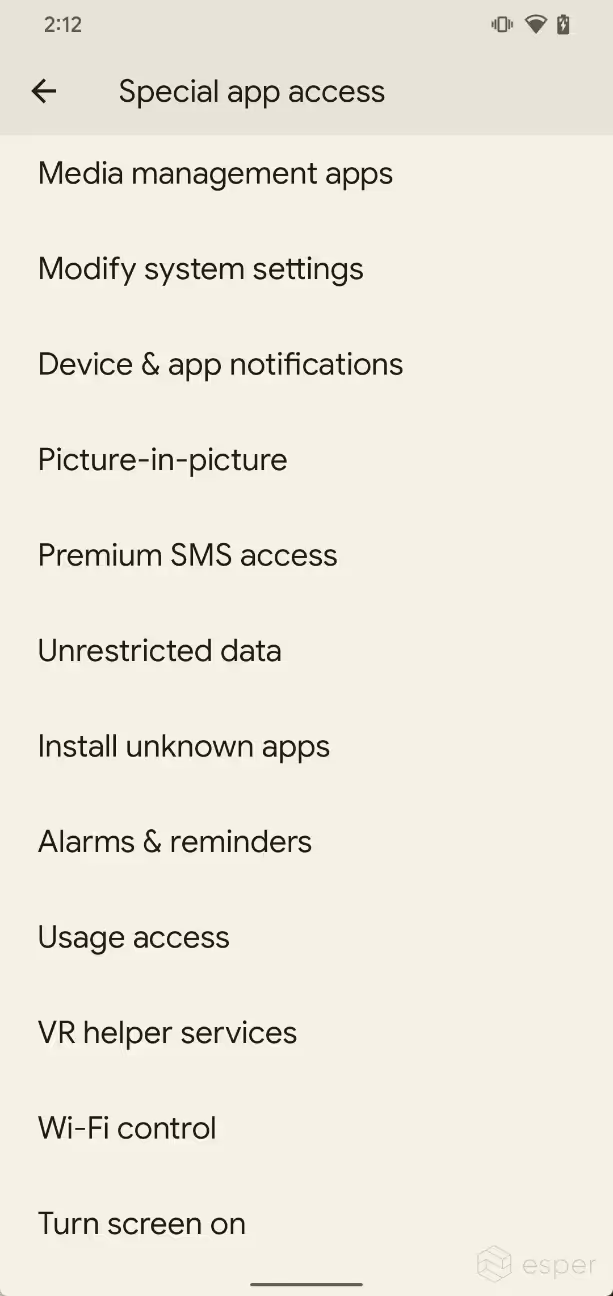

Control an app’s ability to turn on the screen

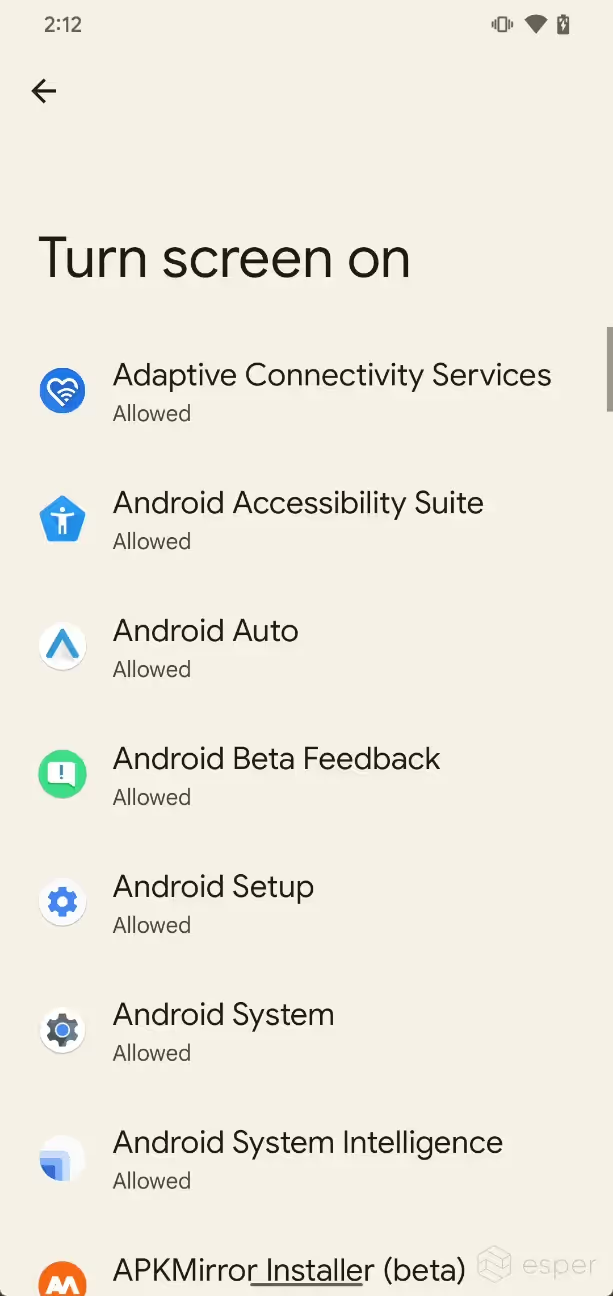

A new appop permission has been added to Android 13 that lets users control whether or not an application can turn on the screen. Users can go to “Settings > Apps > Special app access > Turn screen on” to choose which apps can turn the screen on. All apps that hold the WAKE_LOCK permission appear in this list, save for SystemUI.

Defer boot completed broadcasts for background restricted apps

Android allows applications to start up at boot by listening for the ACTION_BOOT_COMPLETED or ACTION_LOCKED_BOOT_COMPLETED broadcasts, which are both automatically sent by the system. Android also lets users place apps into a “restricted” state that limits the amount of work they can do while running in the background. However, apps placed in this “restricted” state are still able to receive the ACTION_BOOT_COMPLETED and ACTION_LOCKED_BOOT_COMPLETED broadcasts. This will change in Android 13.

Android 13 will defer the ACTION_BOOT_COMPLETED and ACTION_LOCKED_BOOT_COMPLETED broadcasts for apps that have been placed in the “restricted” state and are targeting API level 33 or higher. These broadcasts will be delivered after any process in the UID is started, which includes things like widgets or Quick Settings tiles.

App developers can test this behavior in one of two ways. First, developers can go to Settings > Developer options > App Compatibility Changes and enable the DEFER_BOOT_COMPLETED _BROADCAST_CHANGE_ID option. This is enabled by default for apps targeting Android 13 or higher. Alternatively, developers can manually change the device_config flag that controls this behavior as follows:

where N can be 0 (don’t defer), 1 (defer for all apps), 2 (defer for UIDs that are background restricted), or 4 (defer for UIDs that have targetSdkVersion T+).

These conditions can be combined by bit-OR-ing the values. By default, the flag is set to ?6’ to defer the ACTION_BOOT_COMPLETED and ACTION_LOCKED_BOOT_COMPLETED broadcasts for all apps that are both background restricted and targeting Android 13.

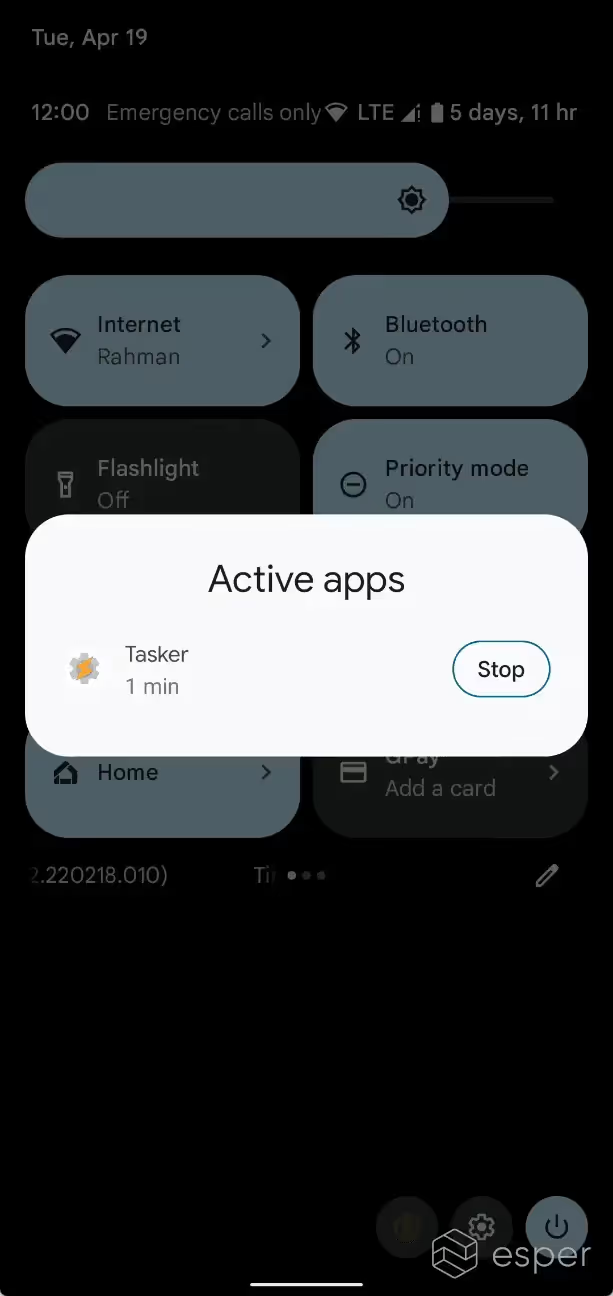

Foreground service manager and notifications for long-running foreground services

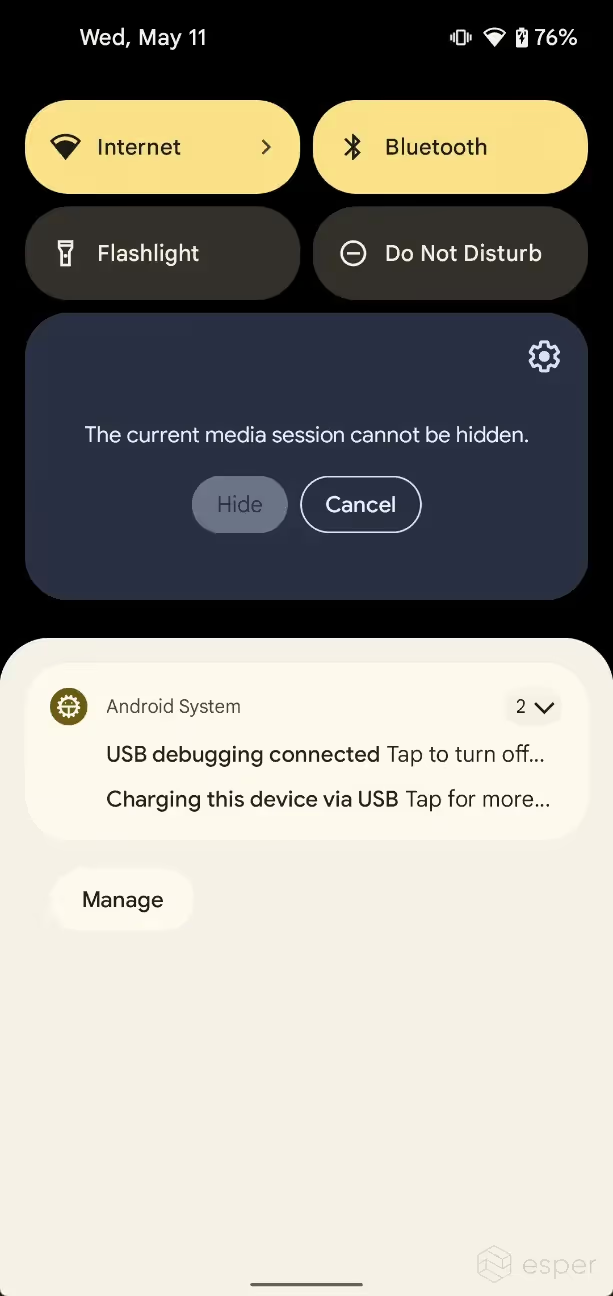

Android 13’s new Foreground Services (FGS) Task Manager shows the list of apps that are currently running a foreground service. This list, called Active apps, can be accessed by pulling down the notification drawer and tapping on the affordance. Each app will have a “stop” button next to it.

Left: an image of the expanded status bar with the foreground service manager at the bottom left.

Right: the foreground service manager dialog

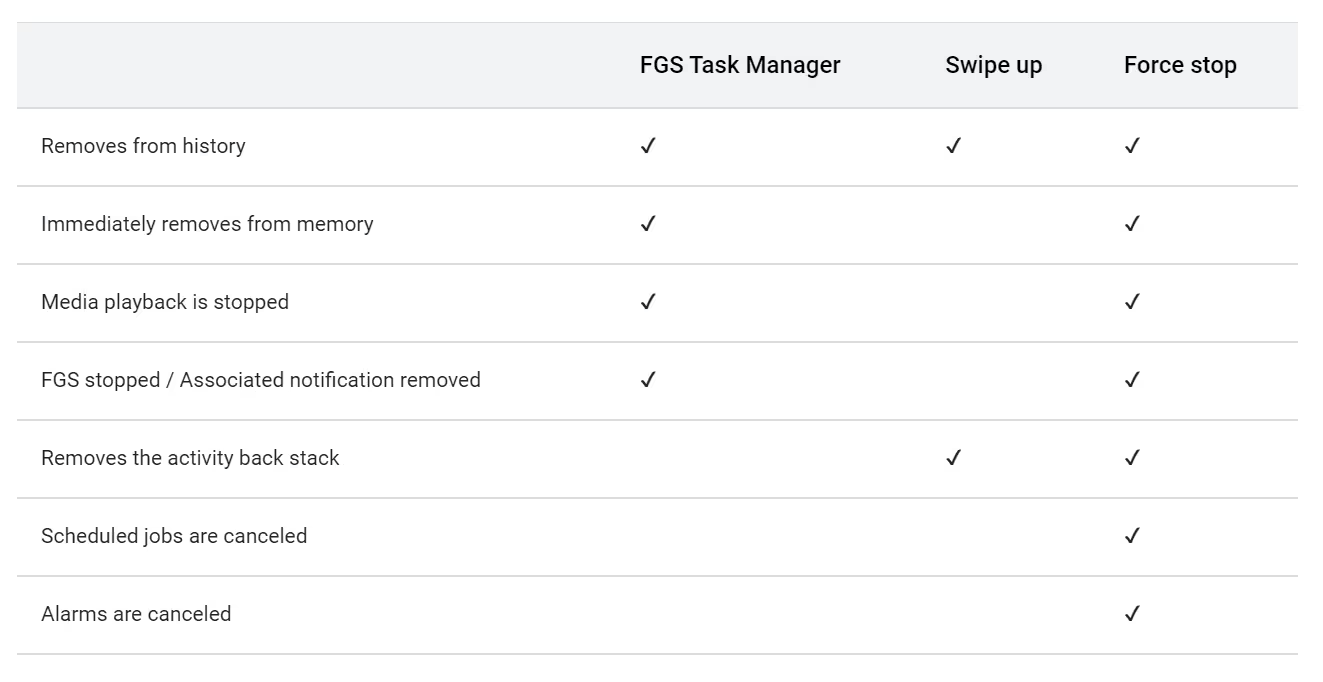

The FGS Task Manager lets users stop foreground services regardless of target SDK version. Here’s how stopping an app via FGS Task Manager compared to swiping up from the recents screen or pressing “force stop” in settings.

The system will send a notification to the user inviting them to interact with the FGS Task Manager after any app’s foreground service has been running for at least 20 hours within a 24-hour window. This notification will read as “[app] is running in the background for a long time. Tap to review.” However, it will not appear if the foreground service is of type FOREGROUND_SERVICE_TYPE _MEDIA_PLAYBACK or FOREGROUND_SERVICE _TYPE_LOCATION.

Certain applications are exempted from appearing in the FGS Task Manager. These include system-level apps, safety apps holding the ROLE_EMERGENCY role, and all apps when the device is in demo mode. Certain apps cannot be closed by the user even if they appear in the FGS Task Manager, including device owner apps, profile owner apps, persistent apps, and apps that have the ROLE_DIALER role.

With the addition of a dedicated space for foreground service notifications, Google says that Android 13 will let users dismiss foreground service notifications. After dismissing the notification, users will be able to find them in the FGS Task Manager. Also, whenever there’s a change in the list of running foreground service notifications, a dot appears next to the affordance hinting to the user that they should review the list once more.

For more information on the new system notification for long-running foreground services, visit this page. For more information on the new foreground services task manager, visit this page.

High-priority FCM quota decoupled from app standby buckets

FCM, short for Firebase Cloud Messaging, is the preferred API to deliver messages to GMS Android devices. Developers have the option to either send a notification message, which results in a notification being posted on behalf of the client app, a data message, which client apps can process and respond to in a number of ways, or a notification message with a data payload. Developers can set the priority of these messages to be “normal priority” or “high priority” depending on their needs. Normal priority messages are delivered immediately when the device is awake but may be delayed when the device is in doze mode, while high priority messages are delivered immediately, waking the device from doze mode if necessary.

Google Play Services, the system app that implements FCM on Android, is exempt from doze mode on GMS Android devices, which is how high priority FCM messages are able to be delivered immediately. Due to the potentially adverse effect that high priority FCM messages can have on battery life, FCM places some restrictions on message delivery in order to preserve battery life.

For example, FCM may not deliver messages to apps when there are too many messages pending for an app, when the device hasn’t connected to FCM in over a month, or when the app was manually put into the background restricted state by the user. In Android 9, Google introduced app standby buckets, further restricting the behavior of apps based on how recently and how frequently they’re used. Apps that are placed into the active or working set buckets face no restrictions to FCM message delivery, while apps placed in the frequent, rare, or restricted buckets have a daily quota of high priority FCM messages. This, however, changes in Android 13.

Android 13 decouples the high priority FCM quota from app standby buckets. As a result, developers sending high priority FCM messages that result in display notifications will see an improvement in the timeline based on message delivery, regardless of app standby buckets. However, Google warns that apps that don’t successfully post notifications in response to receiving high priority FCMs may see that some of their high priority messages have been downgraded to normal priority messages.

Job priorities

Android’s JobInfo API lets apps submit info to the JobScheduler about the conditions that need to be met for the app’s job to run. Apps can specify the kind of network their job requires, the charging status, the storage status, and other conditions. Android 13 expands these options with a job priority API, which lets apps indicate their preference for when their own jobs should be executed.

The scheduler uses the priority to sort jobs for the calling app, and it also applies different policies based on the priority. There are 5 priorities ranked from lowest to highest: PRIORITY_MIN, PRIORITY_LOW, PRIORITY_ DEFAULT, PRIORITY_HIGH, and PRIORITY_MAX.

- PRIORITY_MIN: For tasks that the user should have no expectation or knowledge of, such as uploading analytics. May be deferred to ensure there’s sufficient quota for higher priority tasks.

- PRIORITY_LOW: For tasks that provide some minimal benefit to the user, such as prefetching data the user hasn’t requested. May still be deferred to ensure there’s sufficient quota for higher priority tasks.

- PRIORITY_DEFAULT: The default priority level for all regular jobs. These have a maximum execution time of 10 minutes and receive the standard job management policy.

- PRIORITY_HIGH: For tasks that should be executed lest the user think something is wrong. These jobs have a maximum execution time of 4 minutes, assuming all constraints are satisfied and the system is under ideal load conditions.

- PRIORITY_MAX: For tasks that should be run ahead of all others, such as processing a text message to show as a notification. Only Expedited Jobs (EJs) can be set to this priority.

Notifications for excessive background battery use

When an app consumes a lot of battery life in the background during the past 24 hours, Android 13 will show a notification warning the user about the excessive background battery usage. Android will show this warning for any app the system detects high battery usage from, regardless of target SDK version. If the app has a notification associated with a foreground service, though, the warning won’t be shown until the user dismisses the notification or the foreground service finishes, and only if the app continues to consume a lot of battery life. Once the warning has been shown for an app, it won’t appear for another 24 hours.

Android 13 measures an app’s impact on battery life by analyzing the work it does through foreground services, Work tasks (including expedited work), broadcast receivers, and background services.

Prefetch jobs that run right before an app’s launch

Apps can use Android’s JobScheduler API to schedule jobs that should run sometime in the future. The Android framework decides when to execute the job, but apps can submit info to the scheduler specifying the conditions under which the job should be run. Apps can mark jobs as “prefetch” jobs using JobInfo.Builder.setPrefetch() which tells the scheduler that the job is “designed to prefetch content that will make a material improvement to the experience of the specific user of the device.” The system uses this signal to let prefetch jobs opportunistically use free or excess data, such as “allowing a JobInfo#NETWORK_TYPE_UNMETERED job run over a metered network when there’s a surplus of metered data available.” A job to fetch top headlines of interest to the current user is an example of the kind of work that should be done using prefetch jobs.

In Android 13, the system will estimate the next time an app will be launched so it can run prefetch jobs prior to the next app launch. Internally, the UsageStats API has been updated with an EstimatedLaunchTime ChangedListener, which is used by PrefetchController to subscribe to updates for when the system thinks the user will next launch the app. If a prefetch job hasn’t started by the time the app has been opened (ie. is on TOP), then the job is deferred until the app has been closed. Apps cannot get around this by scheduling a prefetch job with a deadline, as apps targeting Android 13 are not allowed to set deadlines for prefetch jobs. Prefetch jobs are allowed to run for apps with active widgets, though.

The Android Resource Economy

With every new release, Google further restricts what apps running in the background can do, and Android 13 is no exception. Instead of creating a foreground service, Google encourages developers to use APIs like WorkManager, JobScheduler, and AlarmManager to queue tasks, depending on when the task needs to be executed and whether the device has access to GMS. For the WorkManager API in particular, there’s a hard limit of 50 tasks that can be scheduled. While the OS does intelligently decide when to run tasks, it does not intelligently decide how many tasks an app can queue or whether a certain task is more necessary to run.

Starting in Android 13, however, a new system called The Android Resource Economy (TARE) will manage how apps queue tasks. TARE will delegate “credits” to apps that they can then “spend” on queuing tasks. The total number of “credits” that TARE will assign (called the “balance”) depends on factors such as the current battery level of the device, whereas the number of “credits” it takes to queue a task will depend on what that task is for.

From a cursory analysis, it seems that the EconomyManager in Android’s framework lists how many Android Resource Credits each job takes, the maximum number of credits in circulation for AlarmManager and JobScheduler respectively, and other information pertinent to TARE. For example, the following “ActionBills” are listed, alongside how many “credits” it takes to queue a task:

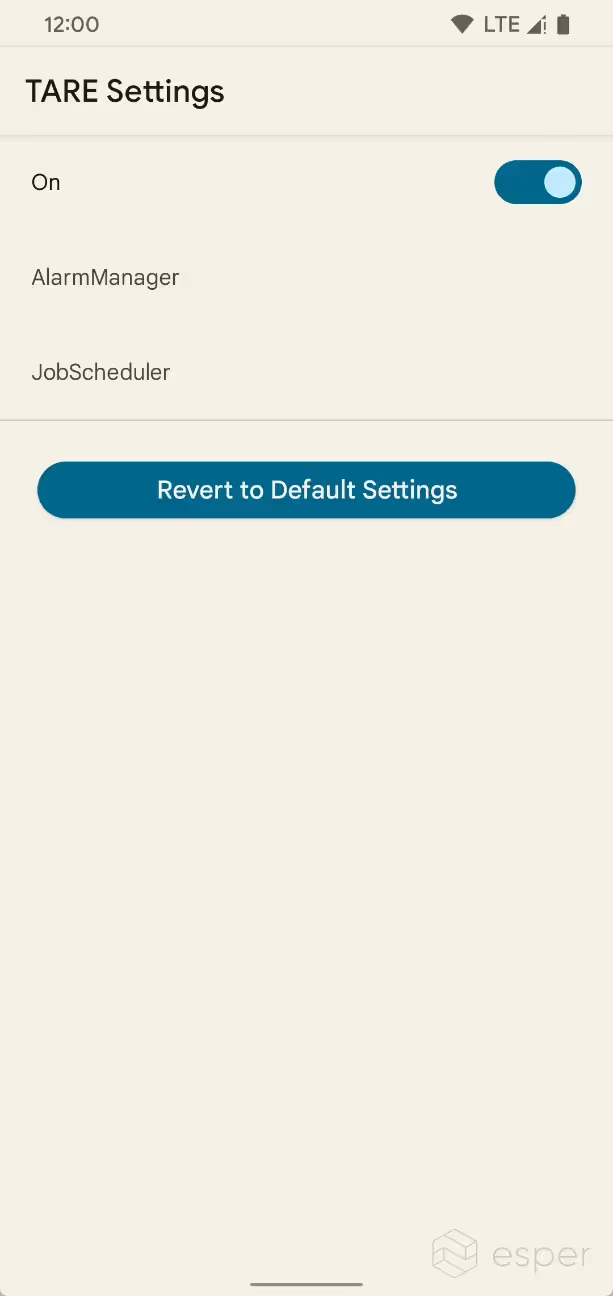

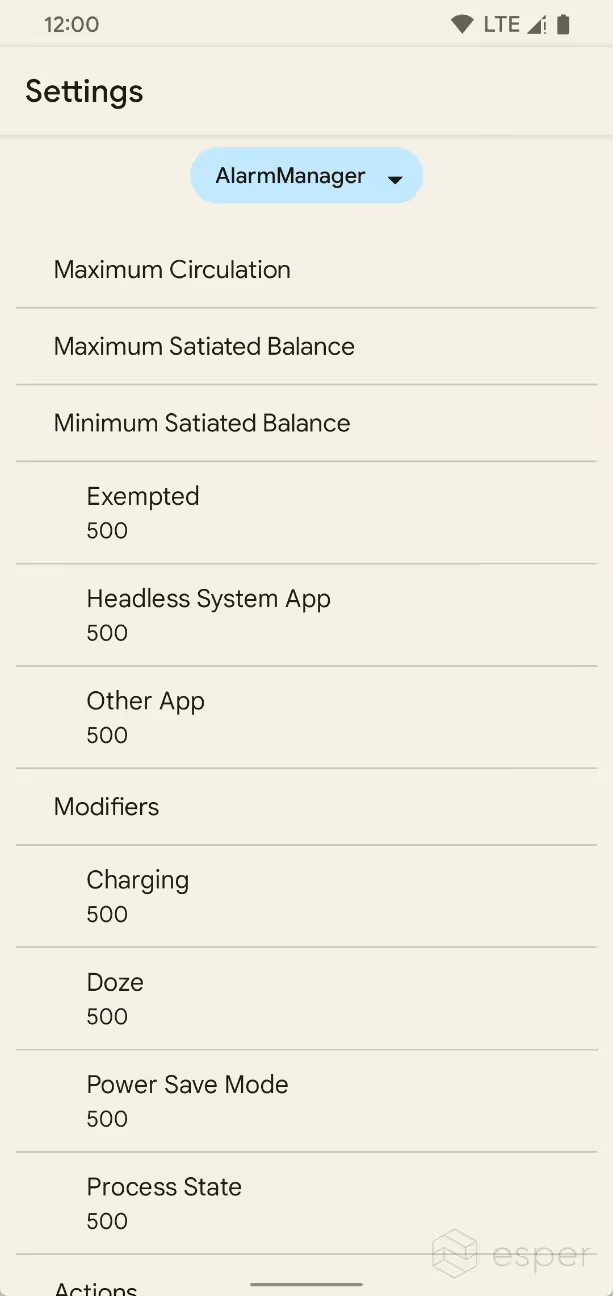

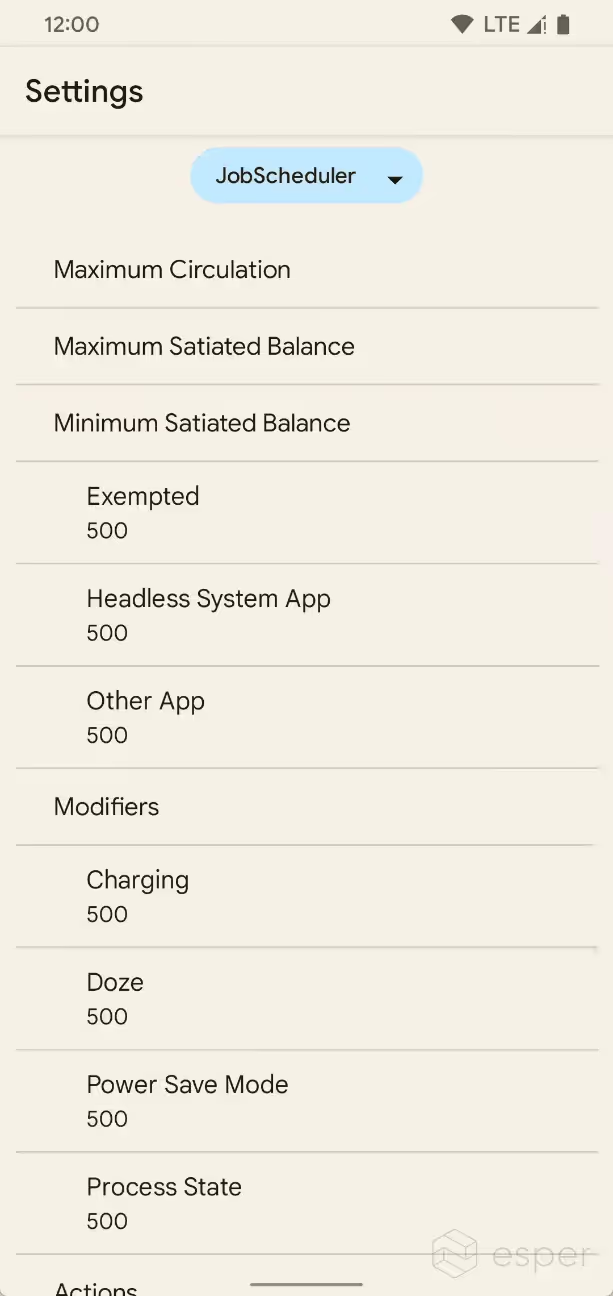

TARE is controlled by the Settings.Global.enable_tare boolean, while the AlarmManager and JobScheduler constants are stored in Settings.Global.tare_alarm _manager_constants and Settings.Global.tare _job_schedule_constants respectively. TARE settings can also be viewed in Developer Options. Starting in Beta 1, TARE settings now supports editing the system’s parameters directly without the use of the command line.

Also in Beta 1 is a big revamp to the way TARE works under the hood. One of the biggest changes in the first Beta is the separation of “supply” from “allocation” of Android Resource Credits. Previously, the credits that apps could accrue to “spend” on tasks was limited by the “balances” already accrued by other apps. There was a “maximum circulation” of credits that limited how many credits could be allocated to all apps. The “maximum circulation” has been removed and replaced with a “consumption limit” that limits the credits that can be consumed across all apps within a single discharge cycle. This lets apps accrue credits regardless of the balances of other apps. The consumption limit scales with the battery level, so the lower the battery level, the fewer actions that can be performed.

Updated rules for putting apps in the restricted App Standby Bucket

Android 9 introduced App Standby Buckets, which define what restrictions are placed on an app based on how recently and how frequently the app is used. Android 9 launched with 4 buckets: active, working set, frequent, and rare. Android 12 introduced a fifth bucket called restricted, which holds apps that consume a great deal of system resources or exhibit undesirable behavior. Once placed into the restricted bucket, apps can only run jobs once per day in a 10-minute batched session, run fewer expedited jobs, and invoke one alarm per day. Unlike with the other buckets, these restrictions apply even when the device is charging but are loosened if the device is idle and on an unmetered network.

Android 13 updates the rules that the system uses to decide whether to place an app in the restricted App Standby Bucket. If an app exhibits any of the following behavior, then the system places the app in the bucket:

- The user doesn’t interact with the app for 8 days.

- The app invokes too many broadcasts or bindings in a 24-hour period.

- The app drains a significant amount of battery life during a 24-hour period. The system looks at work done through jobs, broadcast receivers, and background services when deciding the impact on battery life. The system also looks at whether the app’s process has been cached in memory.

If the user interacts with the app in one of a number of ways, then the system will take the app out of the restricted bucket and put it into a different bucket. The user may taps on a notification sent by the app, perform an action in a widget belonging to the app, affect a foreground service by pressing a media button, connect to the app through Android Automotive OS, or interact with another app that binds to a service of the app in question. If the app has a visible PiP window or is active on screen, then it is also removed from the restricted bucket.

Apps that meet the following criteria are exempted from entering the restricted bucket in the first place:

- Has active widgets

- Has the SCHEDULE_EXACT_ALARM, ACCESS_BACKGROUND_LOCATION, or ACCESS_FINE_LOCATION permission

- Has an in-progress and active MediaSession

All system and system-bound apps, companion device apps, apps running on a device in demo mode, device owner apps, profile owner apps, persistent apps, VPN apps, apps with the ROLE_DIALER role, and apps that the user has explicitly designated to provide “unrestricted” functionality in settings are also exempted from entering the restricted bucket (and all other battery-preserving measures introduced in Android 13).

Hardware camera and microphone toggle support

Android 12 added toggles in Quick Settings and Privacy settings to enable or disable camera and microphone access for all apps. Developers can call the SensorPrivacyManager API introduced with Android 12 to check if either toggle is supported on the device, and in Android 13, this API has been updated so developers can check whether the device supports a software or hardware toggle.

Hardware switches for camera and microphone access are typically not found on smartphones, but they do appear in many television and smart display products. These devices may have 2-way or 3-way hardware switches on the product itself or on a remote, but in Android 12, the toggle state of these switches wouldn’t be reflected in Android’s built-in camera and microphone toggles. This, however, will change in Android 13, which supports propagating the hardware switch state.

Devices with hardware camera and microphone switches should set the ?config_supportsHardwareCamToggle’ and ?config_supportsHardwareMicToggle’ framework values to ?true’.

Non-matching intents are blocked

Prior to Android 13, apps can send an intent to an exported component of another app even if the intent doesn’t match an <intent-filter> element in the receiving app. This made it the responsibility of the receiving app to sanitize the intent, but many often didn’t. To tighten security, Android 13 will block non-matching intents that are sent to apps targeting Android 13 or higher, regardless of the target SDK version of the app sending the intent. This essentially makes intent filters actually act like filters for explicit intents.

Android 13 will not enforce intent matching if the component doesn’t declare any <intent-filter> elements, if the intent originates from within the same app, or if the intent originates from the system UID or root user.

One-time access to device logs

Through the logcat command line tool accessed through ADB shell, developers can read the low-level system log files that record what’s happening to apps and service on the device. This is immensely useful for debugging and is why logcat integration is a key feature of Android Studio. Android apps can also read these low-level system log files through the logcat command, but they must hold the android.permission.READ_LOGS permission in order to do so. This permission has a protection level of signature|privileged|development, hence it can be granted to apps that were signed by the same certificate as the framework, have been added to a priv-app allowlist file, or were manually granted the permission via the ?pm grant’ command. Once granted access to this permission, apps can read low-level system log files without restrictions.