Google I/O 2022 just concluded earlier this week. The developer conference was Google’s biggest event of the year, and it was filled with keynotes and technical sessions containing new information on Android and Google’s other products and services. Given the importance of Google I/O to Android developers, both at the application and platform level, this post recaps all of the major (and many minor) announcements from the big developer conference.

Note that Google I/O isn’t strictly about announcing brand new products and services, as many teams take the opportunity to summarize what they’ve been working on in the past several months. As a result, this post will only focus on things that have never been announced before, in an effort to keep the post from becoming too lengthy. If you want the full rundown of everything Google discussed at I/O — both old and new — then you’re welcome to check out the full I/O program and read the blogs on the Android Developers and Google Developers sites just as I did, assuming you have a lot of free time to kill. If you don't, then keep reading.

What's new in Android?

Android 13 Beta 2

Google launched the second beta for Android 13 at Google I/O, right on schedule. The beta is available for all supported Pixel devices, that being the Pixel 4 and newer. Users and developers alike can enroll in the Android Beta program to have the release roll out to their Pixel devices over the air. Multiple OEMs including ASUS, Lenovo, OnePlus, OPPO, Realme, and Xiaomi also announced their own Developer Preview/Beta programs.

Following the release of Android 13 Beta 2, I took a look at what’s new and summarized the changes in my Android 13 deep dive article. Here are a few of the more important changes Google announced with Beta 2:

- Bandwidth throttling can now be configured in developer options

- BLE Audio’s broadcast audio feature is now supported

- Control an app’s ability to turn on the screen

- Google Play will eventually restrict which apps can use the permission to schedule exact alarms

- Per-app language settings are back, and developers can specify which languages their apps support

- Predictive back gesture navigation

- Settings for spatial audio and head tracking

- Unified Security & Privacy settings page

Android Studio

Google released Android Studio Dolphin Beta and Android Studio Electric Eel Canary at Google I/O.

Android Studio Dolphin Beta includes the following features and improvements:

- Viewing Compose animations and coordinate them with Animation Preview. You can also freeze a specific animation.

- Defining annotation classes with multiple Compose preview definitions and using them to generate previews. This can be used to preview multiple devices, fonts, and themes at once without repeating definitions for every composable.

- Tracking recomposition counts for composables in the Layout inspector. Recomposition countries and skip counts can also be shown in the Component Tree and Attributes panels.

- The Wear OS Emulator Pairing Assistant shows Wear Devices in the Device Manager and enables simplified pairing of multiple watch emulators with a single phone. Wear-specific emulator buttons can simulate main buttons, palm buttons, and tilt buttons. Tiles, watch faces, and complications can be launched directly from Android Studio by creating run/debug configurations.

- Diagnosing app issues with Logcat V2, the ground-up rebuilt Logcat tool with new formatting that makes it easier to find useful information.

- To simplify instrumentation tests, Gradle Managed Devices has been introduced. After describing the virtual devices as part of the build, Gradle will handle SDK downloading, device provisioning and setup, test execution, and teardown.

Android Studio Electric Eel Canary previews the following features:

- Viewing dependency insights from the Google Play SDK Index, including Lint warnings for SDKs that have been marked as outdated.

- Seeing Firebase Crashlytics reports within the context of your local source code using the new App Quality Insights window.

- Using the new resizable Android Emulator to test configuration changes in a single build. The resizable emulator can be created by selecting the “Resizable” type in the Device Manager’s “Create device” flow.

- Using Live Edit to have code changes made to composables immediately reflected in the Compose Preview and app running on the Android Emulator or connected device

- Opening the Layout Validation panel to check for Visual Lint issues.

- Emulating Bluetooth connections in the Android Emulator (31.3.8+) on Android 13 builds.

- Mirroring your device’s display directly to Android Studio so you can interact with your device from the Running Devices window. To enable this feature, go to Preferences > Experimental and select Device Mirroring, then plug in your device to begin streaming.

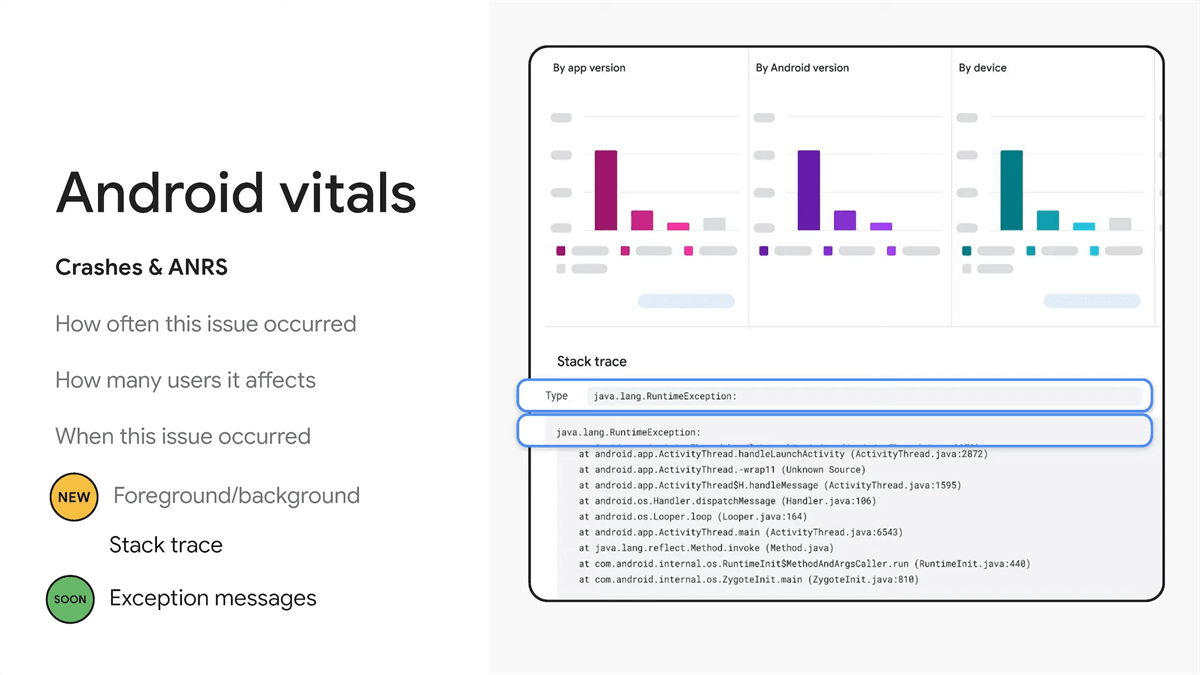

Android Vitals

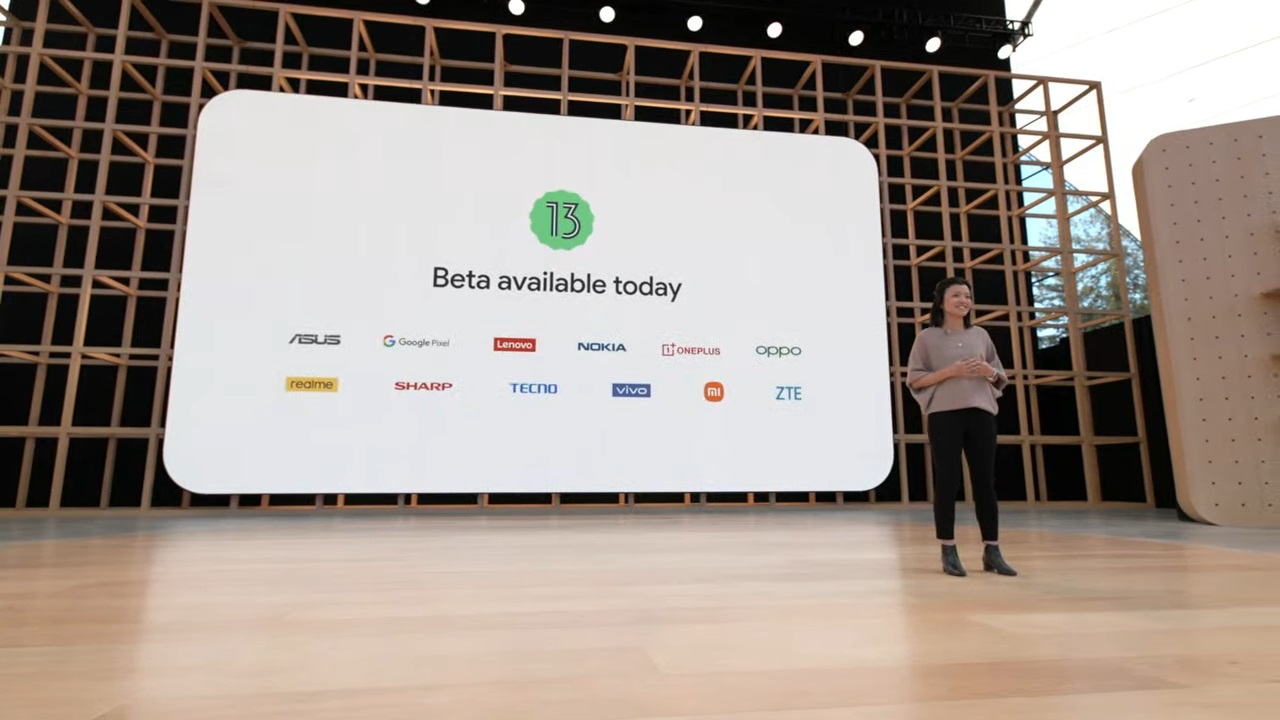

Google announced in the “app quality on Google Play” session that it’s bringing Android Vitals outside the Google Play Console. The new Reporting API offers programmatic access to three years of history of the four core Vitals metrics and issues data, including crash and ANR rates, counts, clusters, and stack traces.

Furthermore, Google is working on making it easier to use Android Vitals with other crash reporting tools, especially Crashlytics from Firebase. You can now link your Play app and your Crashlytics app to see Play track information in Crashlytics. Soon, issue clusters will have the same name in both Vitals and Crashlytics, making it easier to use both products together. In addition, key vitals will soon be surfaced in the Crashlytics dashboard if you’ve linked accounts.

Lastly, Vitals can now show whether a crash happened in the foreground or background. You can also see the stack trace for the crash, though the exception message is redacted because it might contain PII. However, soon Vitals will show exception messages while ensuring that user data remains private by redacting PII.

ARCore

Google has introduced the new ARCore Geospatial API. It enables developers to build features on top of Google Maps’ innovative global localization feature, which is how Live View is able to blend the digital and physical worlds so seamlessly. Developers from companies like Lime, Telstra, Accenture, DOCOMO, and Curiosity are already making use of the Geospatial API, which is available wherever Street View is available.

Background work in Android

In the “best practices for running background work on Android” talk, Google developers mostly focused on sharing tips on how to run background work while considering the constraints of mobile hardware. However, they also talked about some new changes in Android 13, including the updated rules the system follows for putting apps into the restricted App Standby Bucket, the new job priorities API, the ability to schedule prefetch jobs that the system runs right before an app’s launch, improvements to expedited jobs, and the foreground service task manager.

As you can see, I covered many of these changes before in my Android 13 deep dive, but there was one change that I didn’t spot: the decoupling of the high-priority FCM quota from app standby buckets. In Android 13, developers sending high-priority messages that result in display notifications will see an improvement in the timeline based on message delivery, regardless of app standby buckets. Apps that don’t successfully post notifications in response to high-priority FCMs, however, may see that some of their high-priority messages have been downgraded to regular priority messages.

During the talk, Google alluded to the addition of the Android resource economy (TARE), but didn’t go into any sort of detail. Google said that, for a long time, Android has effectively allowed the concept of infinite work despite the fact that mobile devices have finite resources. Since resources are limited, Google will be tweaking policies to better reflect the limited nature of these resources.

Camera API

In the “what’s new in Android Camera” talk, Google continued pushing developers to utilize the CameraX library, touting new improvements to the API. These improvements include a new preview stabilization feature in Android 13 that is available to both CameraX and Camera2, which developers can use to improve the quality of the preview output stream. This will let apps avoid differences between what a user sees in the preview and the outcome after they capture an image, basically what you see is what you get (WYSIWYG).

Meanwhile, the introduction of jitter reduction will provide smoother previews by syncing the camera frames for display during preview with the camera sensor output, avoiding dropped frames. Apps can also use high stream configuration in 60fps in addition to 30fps.

Google also talked about its plans to improve the Extensions API for CameraX. Google currently supports five extensions, including Bokeh, HDR, Night, Face retouch, and Auto. Google says that it will launch new extensions and experimental features first through CameraX since it can be updated more quickly (it’s a support library and not a platform API, after all).

Furthermore, Google outlined its plans on what it’ll do when an OEM doesn’t provide their own extensions. Google currently works with OEMs to provide extensions to the Camera APIs, but for devices that don’t have an extension enabled (such as many entry-level devices), Google will be providing their own extensions. These extensions will be a fully software implementation unlike OEM’s which are hardware-optimized. The goal is to bring extensions to entry-level devices, starting with a bokeh portrait extension in CameraX.

Google also talked about the need to build platform support for HDR video capture. HDR provides a richer video experience by allowing for more vibrant colors, brighter highlights, and darker shadows, but HDR capture support is typically limited to just the native camera app. Android 13 standardizes support for HDR capture in the platform and exposes those capabilities to developers, with HDR10 as the base HDR format. This is a feature I already covered, but Google’s talk goes into a bit more detail.

In addition to HDR capture support, Android 13 also introduces new developer APIs for capturing and accessing HDR buffers. Since transcoding and editing HDR videos can be difficult, Google is adding support for these use cases to the Media3 Jetpack library. The new transformer APIs will let apps tonemap HDR videos to SDR, for example.

Speaking of the Media3 Jetpack library, Google will update it later this year to make it easier for developers to support HDR in their apps and to take advantage of the underlying hardware. Google announced that in collaboration with Qualcomm, developers will be able to utilize the HDR capabilities of premium devices with Snapdragon chipsets through Media3.

Firebase

The Firebase team at Google I/O introduced Firebase Extension events, which lets developers extend the functionality of extensions with their own code. New third-party extensions for marketing, search, and payment processing were introduced from Snap, Stream, RevenueCat, and Typesense. These extensions are now available in the Firebase Emulator Suite.

Firebase web apps built with Next.js and Angular Universal can now be deployed with a single command: ‘firebase deploy’. Support for other web frameworks is coming in the future.

All Firebase plugins for Flutter have been moved to general availability, complete with documentation, code snippets, and customer support.

Crashlytics support for Flutter apps has been updated to drastically reduce the number of steps it takes to get started, log on-demand fatal errors in apps and receive those alerts from Crashlytics, and group Flutter crashes more intuitively.

Firebase also added better support for the Swift language, App Check integration with the new Play Integrity API, new alerts in Performance Monitoring, and new features in App Distribution, including simplified group access, auto-deletion, bulk tester management, and the ability to notify testers of a new version from within the app.

Flutter

Flutter 3 was launched at Google I/O, adding stable support for macOS and Linux desktop apps, improvements to Firebase integration, much faster compilation speeds on Apple Silicon Macs, the Casual Games Toolkit, and other new productivity and performance features. Flutter 3 also coincides with the release of Dart SDK version 2.17.

Google Assistant

The Assistant SDK lets developer create App Actions, which enable the user to speak simple voice commands to launch key app functionality. During the “Google Assistant functionality across Android devices” session at Google I/O, Google announced that it will soon expand App Actions support to Wear OS. The company says that health and fitness built-in indexes (BIIs) used in existing mobile apps will be enabled on Wear OS devices, so users can trigger the same expected integrations.

Google is also expanding developer access to the Shortcuts API for Android for Cars, making it easier to integrate voice access in apps built for Android Auto and Android Automotive.

Meanwhile, smarter custom intents enable the Assistant to better detect broader instances of user queries through machine learning, but without any natural language understanding (NLU) heavy lift.

Lastly, Google announced Brandless Queries and App Install Suggestions, two features that are available for apps with App Actions. If a user asks the Assistant to do some action that your app can handle, but the user didn’t mention the name of your app in their query or they don’t have your app installed, the Assistant can still help them. With Brandless Queries, the Assistant can infer what app would best fulfill the user’s request and route the user to that app. With App Install Suggestions, the Assistant will direct users to your app’s Play Store listing to encourage them to install the app to access that functionality.

Google Home & Matter

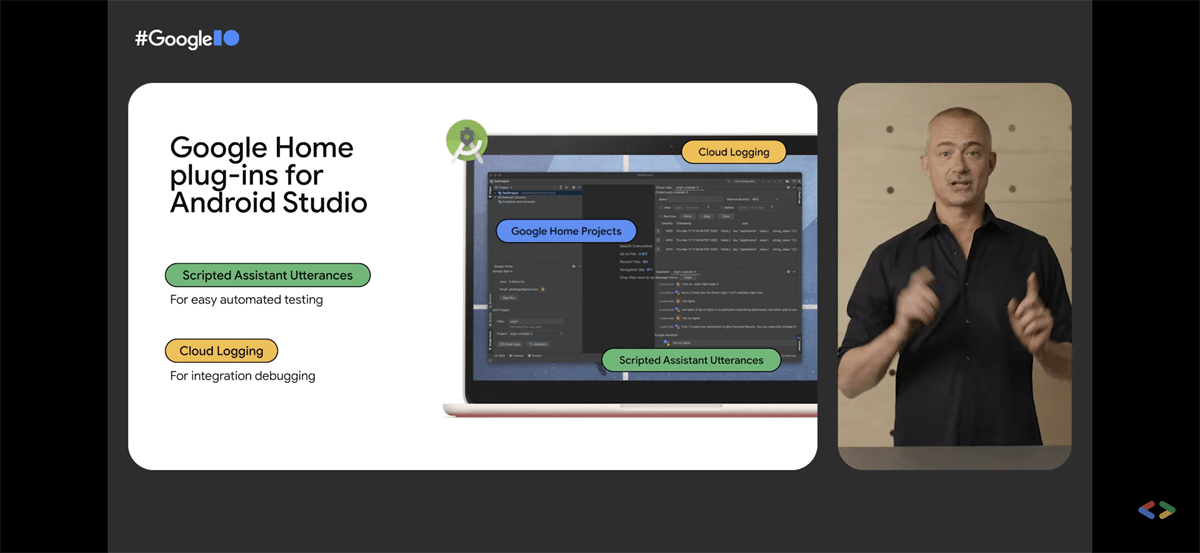

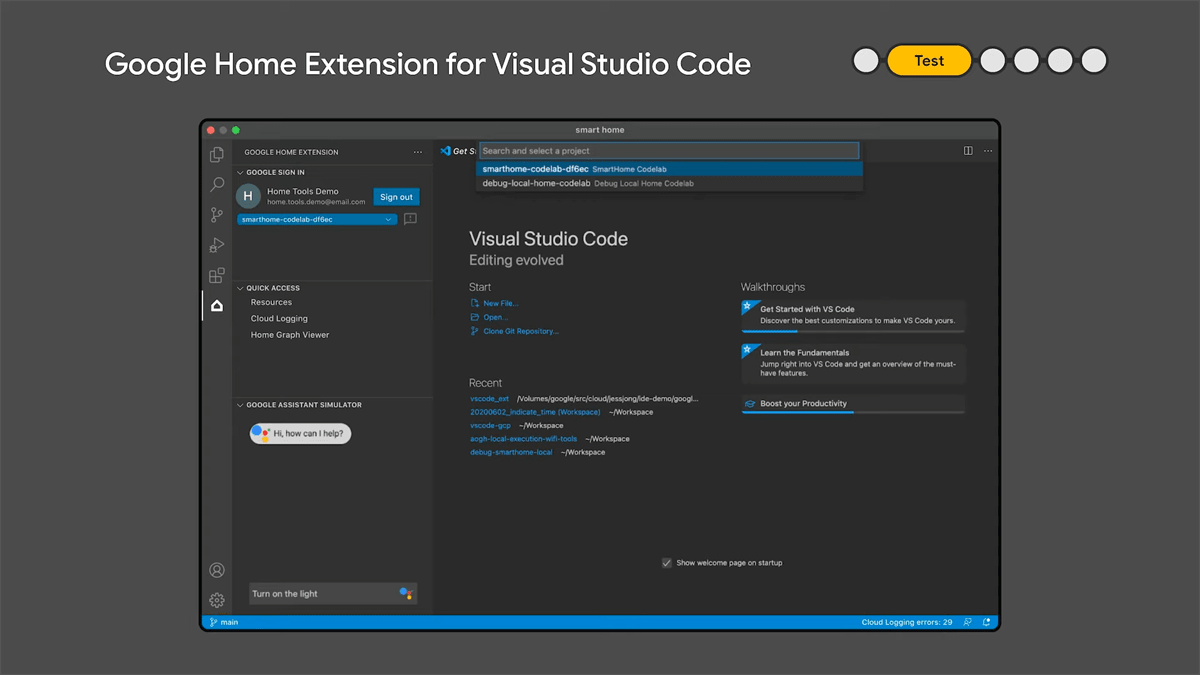

At the “building smart home apps with Google Home Mobile SDK” and “building, testing, and deploying smart home devices for Google Home” talks at Google I/O, Google announced a set of solutions to help developers build high-quality mobile apps for the smart home. These initiatives include the Google Home Developer Center, a one-stop shop for Google Home developers to learn and build apps, new Matter APIs in Google Play Services to easily commission and share Matter devices with Google Home and other apps and platforms, and new Google Home Tools in Android Studio to build and test apps.

The Developer Center has Google Home plugins for Android Studio and Visual Studio Code. These plugins let you type commands for Google Assistant directly into the editor to test how your apps and devices interact with Google Home. They also let you review cloud logs in real time so you can debug your integration more easily.

At Google I/O, Google revealed how developers can build apps and devices that use Matter. Matter promises easy setup, interoperability, and standardization for the smart home industry. It relies on local connectivity protocols like thread and WiFi.

To build apps using Matter, Google provides the Google Home Sample Application for Matter that developers can use as a reference to build their own smart home application. It provides examples of how to use the new Google Play Services APIs for Matter. The app acts as a commissioning client as it lets you commission a smart home device to the Google Fabric. (A Fabric is a term of the Matter protocol that represents a shared domain of trust, established via a common set of cryptographic keys, among devices in the home network that enables them to communicate with each other.) Once added, the device can be shared with other fabrics owned by other apps, enabling the multi-admin feature of Matter, by opening a temporary commissioning window so the device can be commissioned to an additional fabric. Apps can register as a commissioner by adding an intent filter.

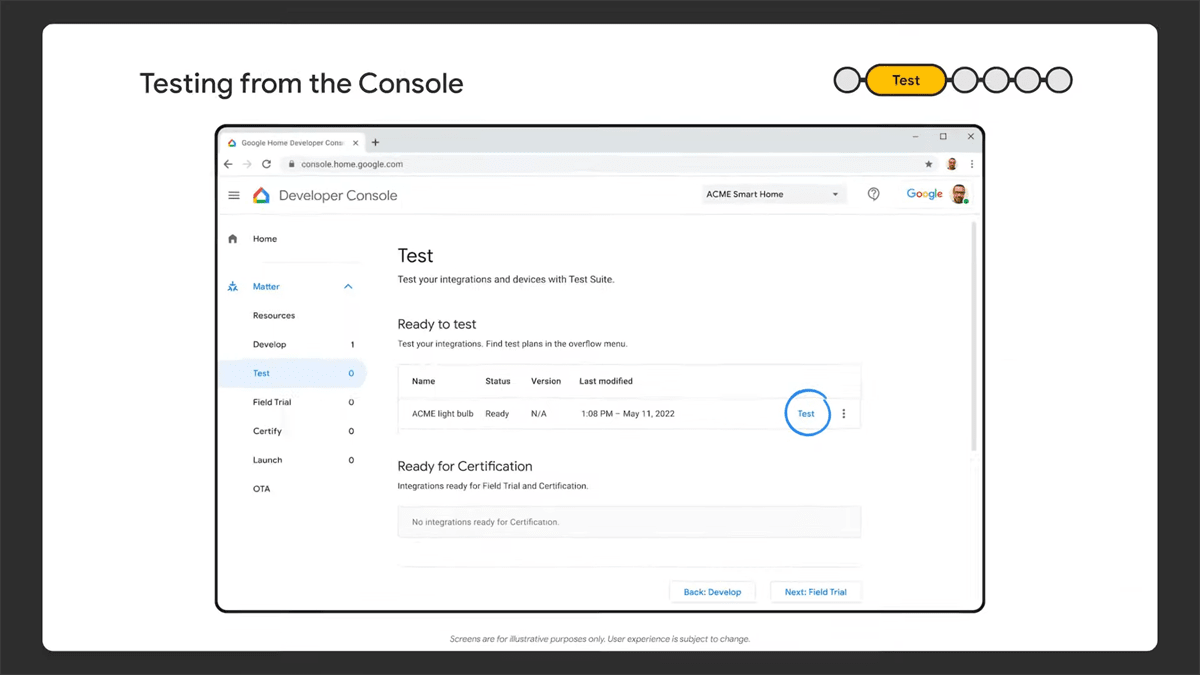

The Google Home Test Suite on the Developer Console will let you see all the devices you’ve integrated with Matter, configure a development or certification test, and start the test. You can then dogfood your product by running field trials, which the Console helps you plan and execute. After submitting your field trial, the Google Home team will review and approve your plan. Then you can start shipping your beta devices to your testers, and once you’re confident your device is market ready, you need to go through the Matter certification process with the Connectivity Standards Alliance. You will need to follow Google’s validation process to use the extra services Google offers, such as device firmware updates, the Works with Google Home badge, and intelligence clusters.

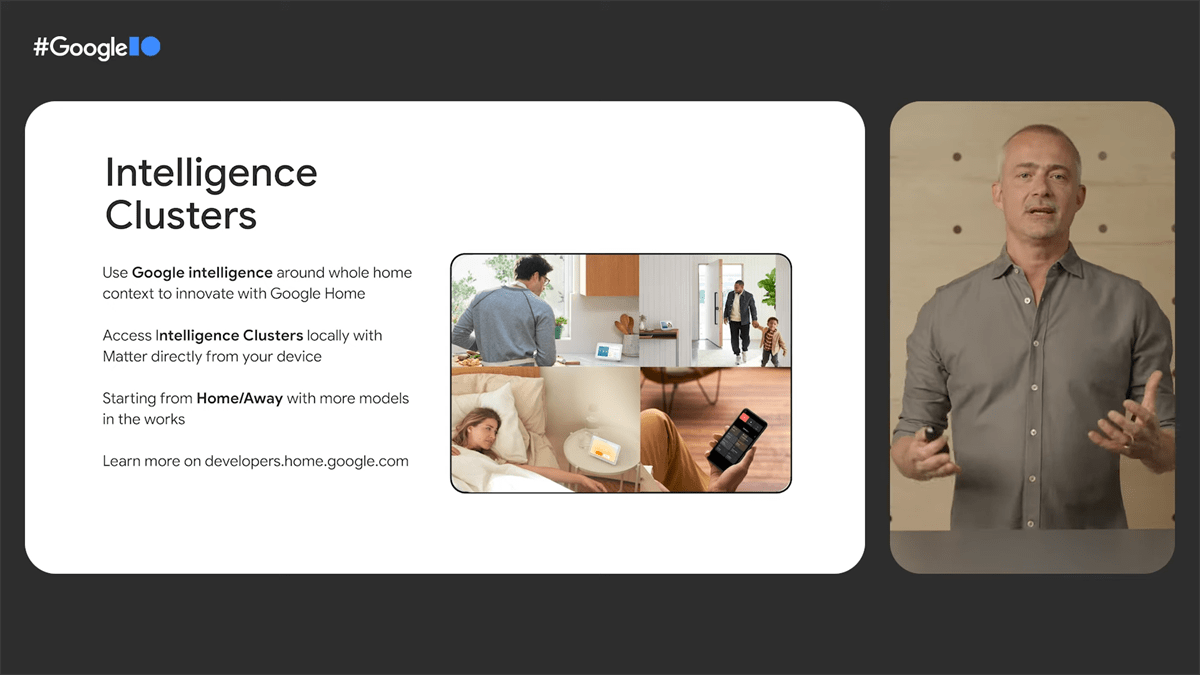

Intelligence clusters let developers access Google Intelligence about the home locally and directly on Matter devices, using a similar structure to clusters within Matter. Google’s Home/Away intelligence is used by millions of users to power routines already, and developers will be able to build their own automations based on home presence. Google will be providing a device SDK that abstracts away the low-level connectivity and permissioning. Google is building guardrails into intelligence clusters so that user information is encrypted and processed locally, and only with the user’s consent.

The Google Home Developer Center is available today, while the Google Home Developer Console will launch in June along with the Matter API.

Google Play Console

The Google Play Console has been overhauled to show install data for all device models, complete with filtering options, so you can quickly see what’s important at a glance. You can also better differentiate between product variants and more details on the targeting status for each device model (eg. if it’s partially supported, partially excluded, etc.) There are also new filters for on device attributes like OpenGL extensions and shared libraries. The new details page for each device has dynamic filters to explore variants based on device attributes you’re interested in.

In addition, reach & devices now support revenue metrics, so you can decide where to invest your efforts. For example, if only a fraction of your revenue comes from devices with low RAM hardware, then you can decide if it’s worth to continue supporting those devices. You can also view revenue growth metrics to plan ahead; you can view growth trends in revenue for a region and decide if you want to go forward with a rewrite, for example.

Google Play Store

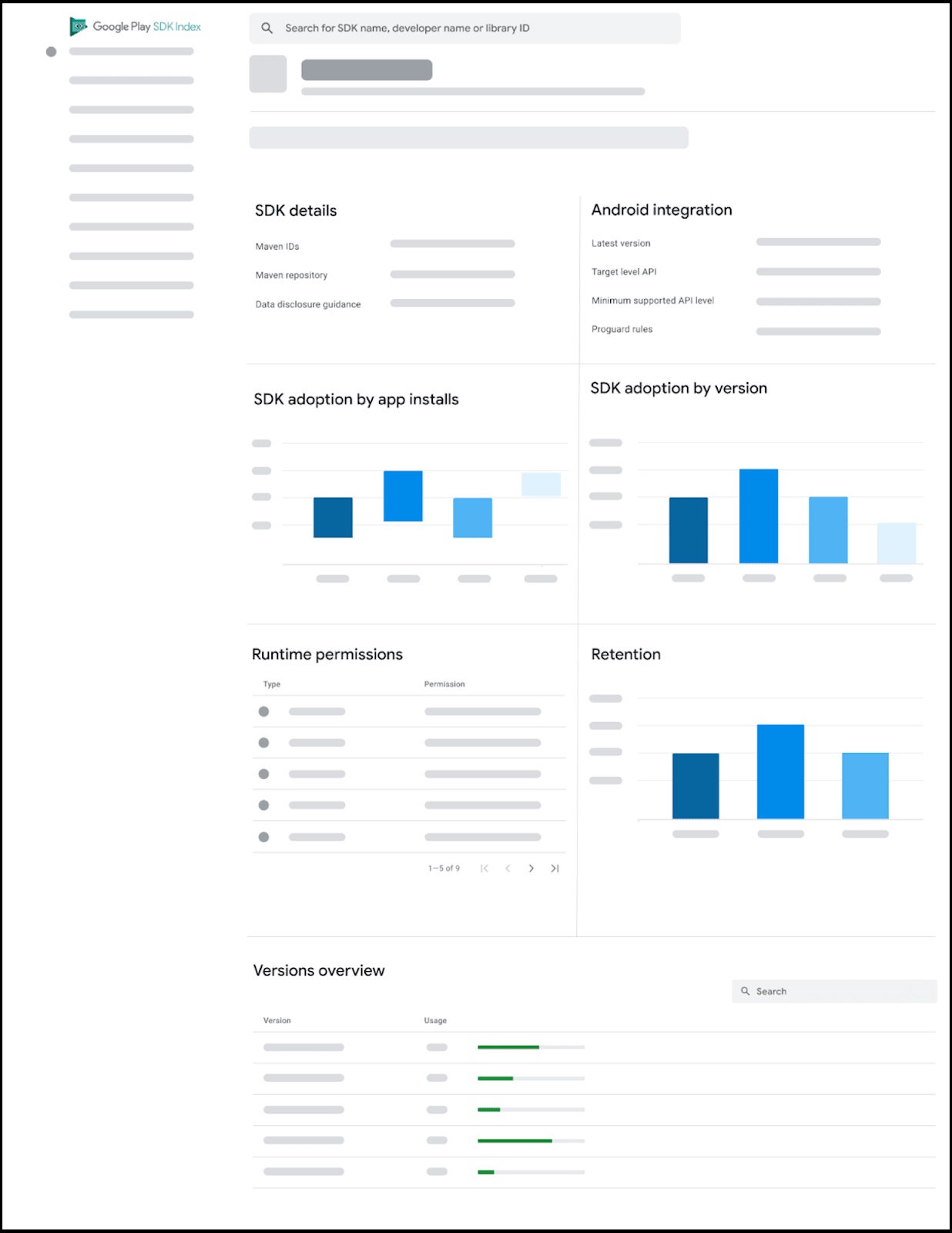

Google launched the Google Play SDK Index at Google I/O, a public portal that lists over 100 of the most popular commercial SDKs. The index also offers information on each SDK in the list, including what permissions they use, version adoption rates, retention metrics, and whether the SDK provider has committed to ensuring their code follows Google Play policies. SDK providers can also provide information on which versions are outdated or have critical issues and link to data safety guidance on what data their SDKs collect and why.

Play App Signing will be using Google Cloud Key Management to protect your app’s signing keys. Google will be using Cloud Key Management for all newly generated keys first before securely migrating eligible existing keys. Soon, developers using Play App Signing will be able to perform an annual app signing key rotation from within the Google Play Console. Google Play Protect will verify the integrity of app updates signed using a rotated key on older Android releases (back to Android Nougat) that don’t support key rotation.

Google recently launched the Play Integrity API, which builds upon SafetyNet Attestation and other signals to detect fraudulent activity, such as traffic from modded or pirated apps that may be running on rooted or compromised devices.

Developers can now create up to 50 custom store listings on Google Play, each with analytics and unique deep links. A new Google Play Console page dedicated to deep links will launch soon, and it’ll be filled with information and tools about your app’s deep links. When running a Store Listing Experiment, the feature will now show results more quickly and with more transparency and control.

LiveOps is a new feature that lets you submit content to be considered for featuring on the Play Store. It’s currently in beta, and Google is accepting applicants for the tool here.

For developers building apps for Android Automotive and Wear OS, Google is opening up the ability to independently run internal and open testing. This is available now for Android Automotive and coming soon for Wear OS.

For developers using the in-app updates API to inform users that there’s an update available, the API will now inform users of an update within 15 minutes instead of within 24 hours.

Other changes included in Play Billing Library 5.0:

- Support for more payment methods in Play Commerce. Google has added over 300 local payment methods in 70 countries, and added eWallet payment methods like MerPay in Japan, KCP in Korea, and Mercado Pago in Mexico.

- The ability to set ultra-low price points to better reflect local purchasing power.

- New subscription capabilities and a new Console UI that separates what the benefits of the subscription are from how the subscription is sold. Multiple base plans and offers can be configured.

- New prepaid plans that let users access subscriptions for a fixed amount of time.

- Opening up the in-app messaging API to inform them to update their payment method when it’s been declined.

Google Wallet & Payments

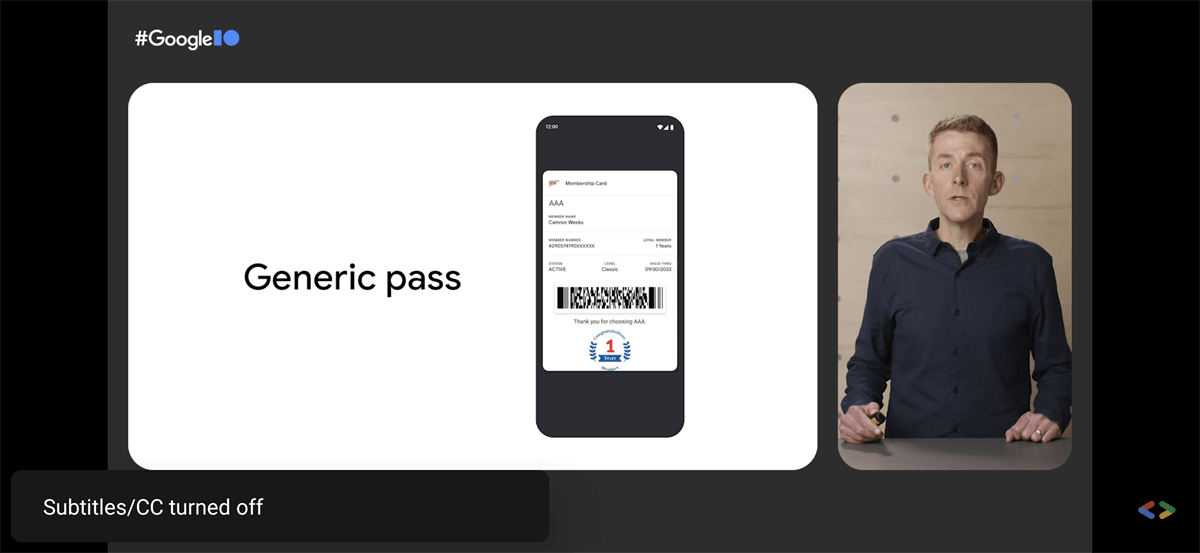

The new Google Wallet API — formerly called the Google Pay API for Passes — now supports storing generic passes, as announced in the “introducing Google Wallet and developer API features” talk at Google I/O. The generic passes API lets developers digitize any kind of pass, which the user can add by scanning a QR or barcode.

Businesses can control the look and design of the card by providing a card template with a maximum of three rows each with up to three fields per row. Google will soon launch a trial mode for passes that gives developers instant access to the passes sandbox environment so they can develop and test the Google Wallet API while waiting for approval for their service account.

Google Pay already supports storing offers, loyalty cards, gift cards, event tickets, boarding passes, transit tickets, and vaccine cards. In addition to these, Wallet will let users save and access hotel keys, office badges, and ID cards like student IDs and digital driver’s licenses. Google says that it is working with state governments in the U.S. and governments around the world to bring digital driver’s licenses to Wallet later this year.

Wallet uses Android’s Identity Credential API to store and retrieve digital driver’s licenses. Devices launching with Android 13 are required to support the Identity Credential HAL, enabling these digital driver’s licenses to be stored on the device’s secure hardware.

Users will be able to share Wallet objects with other Google apps when the sharing option is enabled. For example, users who have saved transit cards to their Wallet will be able to see information about their card and balance when searching for directions using Google Maps. The sharing option won’t be enabled for sensitive cards that are private to the user and stored only on the device like their vaccination card. Users can share multiple passes if developers enable the new grouping feature.

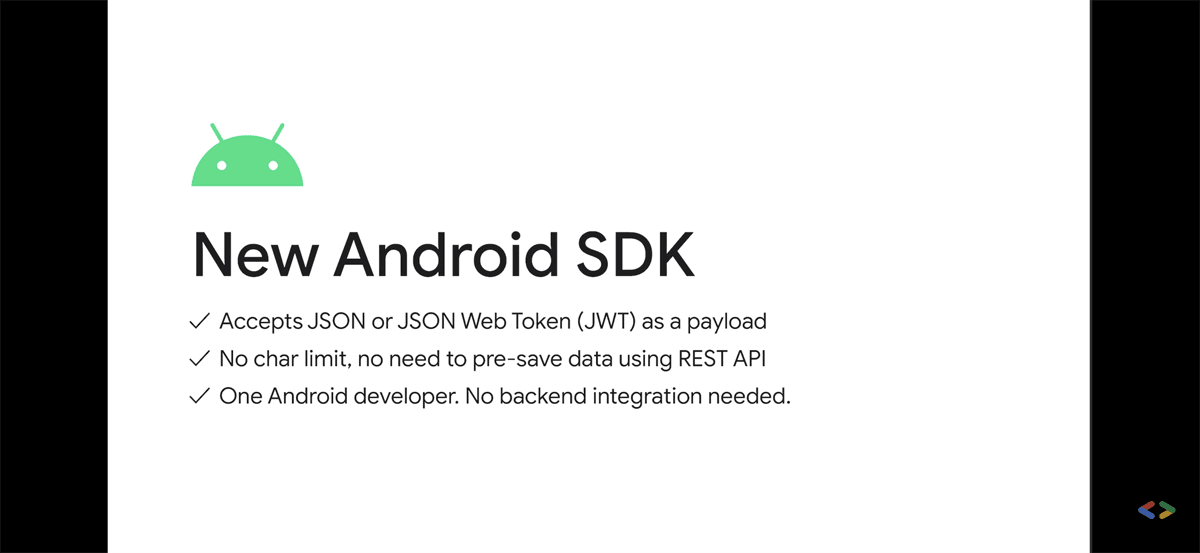

The new Android SDK is more extensible, allowing developers to save all existing pass types and future pass types, mix and match multiple pass types, and supports grouping passes. The SDK receives passes in JSON or JWT, but it only allows saving passes from Android apps if you don’t need to update or edit previously saved passes. If you do need to do that, you’ll need to use the REST API.

When Wallet launches, developers are encouraged to update their “G Pay | Save to phone” buttons to the new “Add to Google Wallet” button.

Wallet is rolling out to Android and Wear OS devices in over 40 countries around the world in the coming weeks.

Lastly, Google Chrome for Android will let users create virtual card numbers that can be used in place of their real credit card numbers. After setting up a virtual card, it will appear as an autofill option for future checkouts. Users can manage their virtual cards and see their transactions at pay.google.com. The feature will be available for users with cards from issuers like Capital One, Visa, American Express, and Mastercard.

Jetpack

Google announced major updates to the Android Jetpack suite, focusing on three key areas: architecture libraries and guidance, performance optimization of applications, and user interface libraries and guidance.

Room, the data persistence layer over SQLite, is being rewritten in Kotlin, starting with Room 2.5. Room 2.5 also adds built-in support for Paging 3.0 and lets developers perform JOIN queries without needing to define additional data structures. Updates to AutoMigrations simplify database migrations by adding new annotations and properties.

The Navigation component has been integrated into Jetpack Compose, and its Multiple Back Stacks feature has been improved with better state remembrance. NavigationUI now saves and restores the state of popped destinations automatically, so developers can support multiple back stacks without code changes.

The Macrobenchmark library has been updated to increase testing speed and add new experimental features. The JankStats library, which launched in alpha back in February, can be used to track and analyze performance problems in an app’s UI.

Jetpack Compose

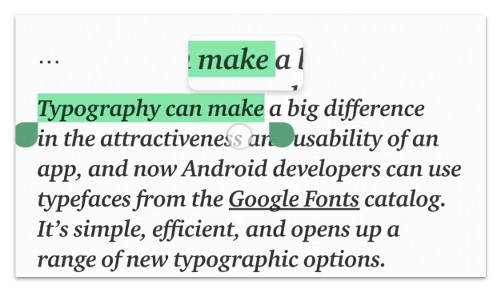

Jetpack Compose 1.2 Beta has been released. It addresses one of the top-voted bugs by making includeFontPadding a customizable parameter. Google recommends setting this value to false, as this enables more precise alignment of text within a layout. When setting the value to false, you can also adapt the line height of your Text composable by setting the lineHeightStyle parameter.

Jetpack Compose 1.2 also introduces downloadable fonts, letting you access Google Fonts asynchronously. You can even define fallback fonts without any complicated setups.

Compose also now supports Android’s text magnifier widget, which is shown when dragging a selection handle to help you see what’s under your finger. Compose 1.1.0 brought the magnifier to selection within text fields, and now 1.2.0 brings support for the magnifier in SelectionContainer. The magnifier has also been improved to match the precise behavior of the Android magnifier in Views.

An experimental API called LazyLayout has been added so developers can implement their own custom lazy layouts. In addition, the grid APIs LazyVerticalGrid and LazyHorizontalGrid have graduated out of experimental status.

The insets library in Accompanist has graduated to the Compose Foundation library, using the WindowInsets class.

Window size classes have been released, making it easier to design, develop, and test resizable layouts. These are available in alpha in the new material3-window-size-class library.

The Jetpack DragAndDrop 1.0.0 library has reached stable. DropHelper, the first member of the library, is a utility class that simplifies implementing drag and drop capabilities.

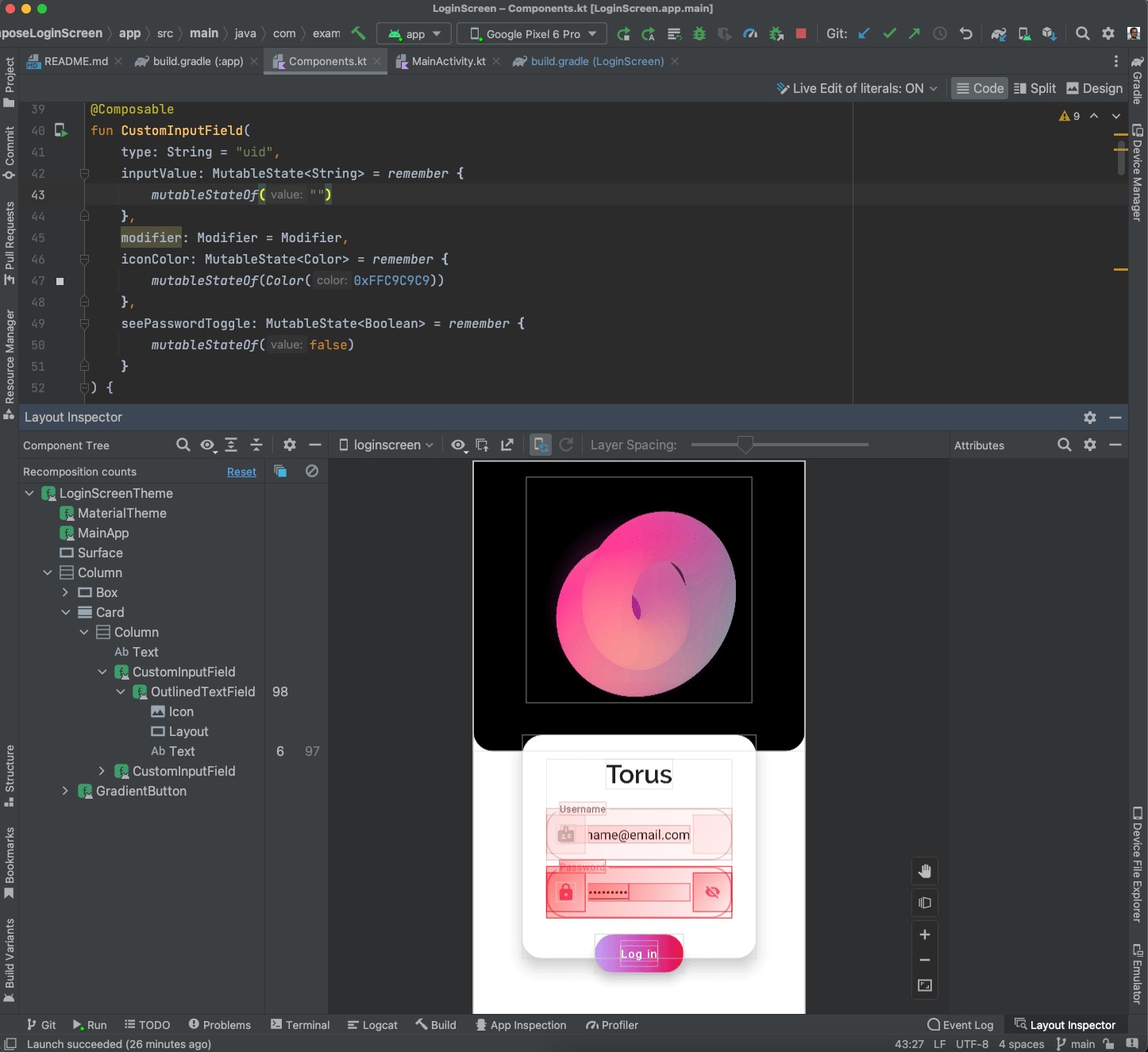

With Android Studio Dolphin, developers can inspect how often composables recompose using the Layout Inspector. High numbers of recomposition are a clue that a composable could be optimized. Dolphin also adds an Animation Coordination tool so developers can see and scrub through all their animations at once, and a MultiPreview annotation to help developers build for multiple screen sizes.

Meanwhile, Android Studio Electric Eel adds a recomposition highlighter, which visualizes which composables recompose when.

Live Sharing with Google Meet

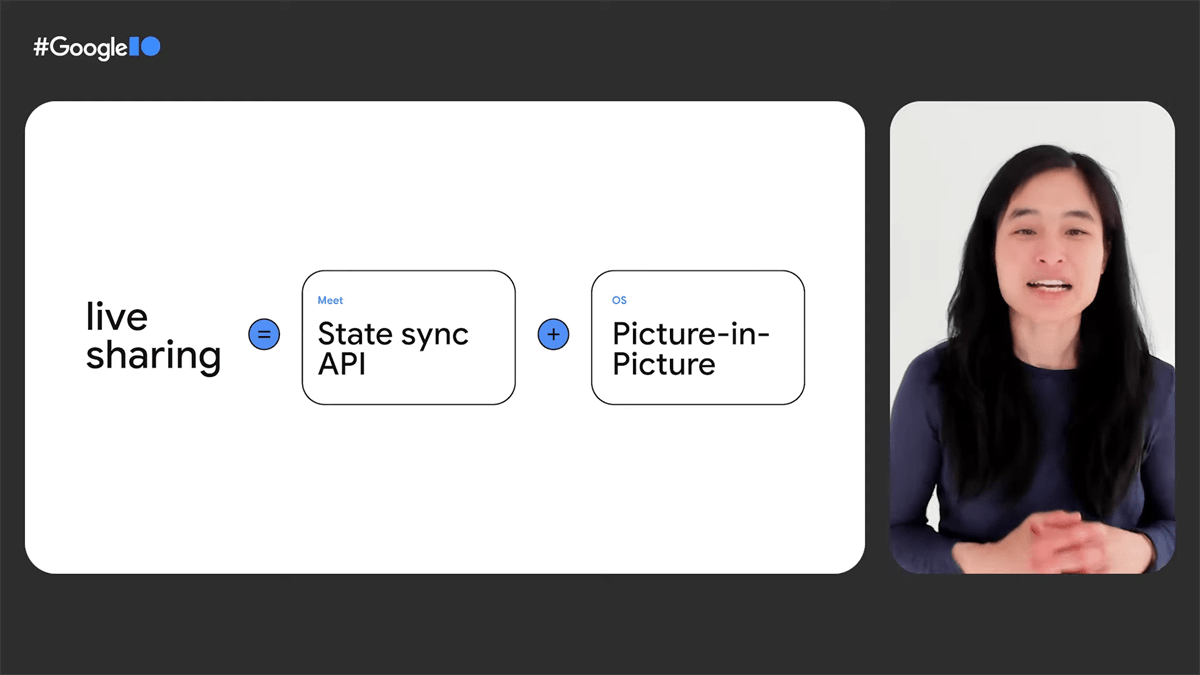

The Google Meet team shared a new library they’ve been working on in the “how to enable cross-platform experiences with Google Meet” talk. The library, called Live Sharing, enables apps to build experiences around real-time, synchronized content viewing with shared controls. For example, YouTube uses the API to let users join a Meet group call and watch and control videos together.

Live Sharing combines Meet’s state sync API and Android’s picture-in-picture mode to achieve this. The state sync API is an API that syncs your app’s content across all participant devices with shared controls, so users can play, pause, or change media content while participating in a group Meet call with others. With PiP, the Meet group call can be shown on top of your app’s window.

There are three entry points for Live Share:

- Start a shared activity from Meet

- Start from your app while in a Meet

- Start activity from your app.

Machine learning

Google shared how it’s following up on some of its promises from last year during the “what’s new in Android machine learning” session. Last year, Google announced that it would deliver TensorFlow Lite runtime updates via Google Play Services, which they started doing as of this February. Developers who switch to this API for inference can shave off up to 2MB from their app size, Google says. In addition, Google Play Services will continue to update the TensorFlow Lite runtime to optimize performance.

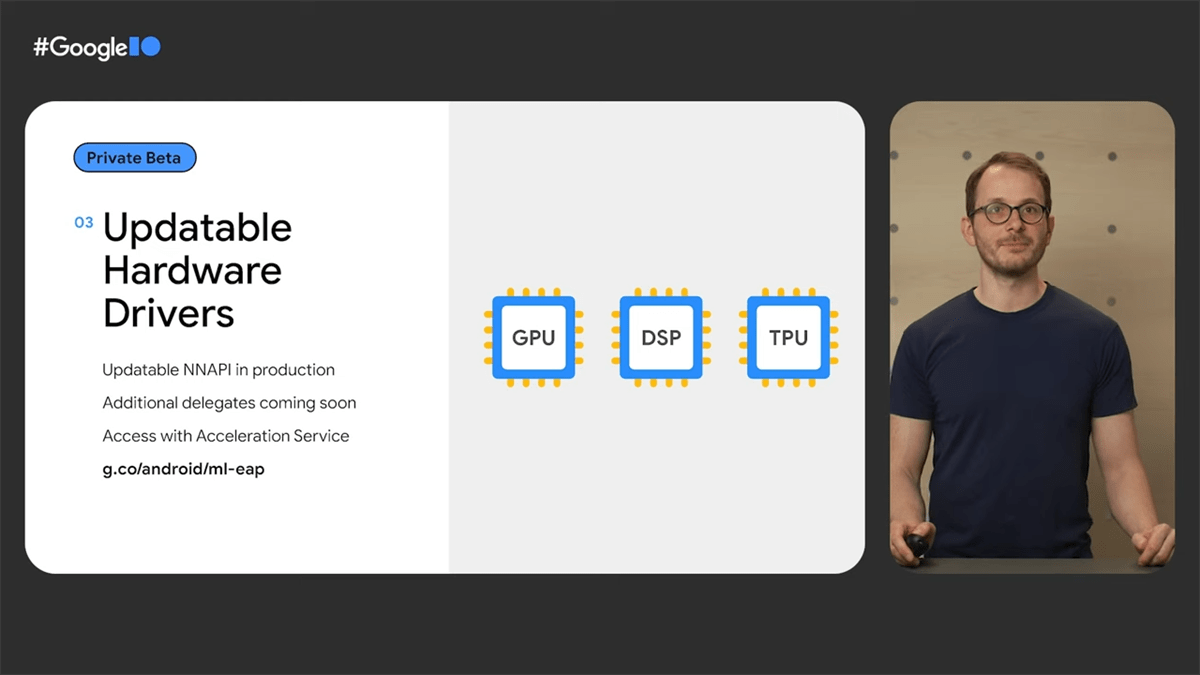

Google also confirmed that its plans to distribute updatable NNAPI drivers are in swing. According to Google, updatable NNAPI hardware drivers are now live in production under a private beta program. Apps can benefit from them by using the new Acceleration Service or by targeting them directly. It’s unclear if Android 13 is required to utilize these drivers, however.

Acceleration Service for Android is a new API that uses a technology called local benchmarking to determine the optimal hardware on the device on which to run an ML inference at runtime. It’s already used for pose detection in MLKit, making pose detection up to 43% faster. Apps call into the Acceleration Service with their ML model and a few “golden samples”. The service spins up processes for testing each different hardware that’s available. Spinning up separate processes ensures the app won’t suffer from driver stability issues.

After benchmarking is done, the app gets a report on the latency, stability, and accuracy of the different accelerators. Apps can then choose the accelerator they want to use. Plus, the more devices developers test this on, the better it gets at picking accelerators as telemetry is collected and decision-making is automated on the cloud. Developers can also choose when to run local benchmarks, for example at app initialization time or at a future time.

Finally, Google announced the new Google Code Scanner API, which is provided through Google Play Services. This API utilizes MLKit to provide a complete QR code scanning solution to apps without the need for them to request the camera permission. This makes it easier to integrate code scanning features into apps.

Alongside the machine learning improvements in Android, Google also introduced a few tools to help developers utilize the company’s updated machine learning models for generative language and facial analysis.

Using the AI Test Kitchen app, developers can play around with LaMDA 2, the second version of Google's Language Model for Dialogue Applications. LaMDA uses machine learning to deliver free-flowing conversations about a multitude of topics, and the AI Test Kitchen app lets developers experience LaMDA through a trio of demos. The first demo, “Imagine It,” lets developers name a place and have LaMDA offer follow-up questions. The second demo, “List It,” lets developers name a goal or topic and see how it can be broken down into multiple lists of subtasks. The third demo, “Talk About It,” lets developers pose a random question and see how LaMDA keeps the conversation flowing.

As part of Google’s ongoing research into equitable skin tone representation, Google announced the release of a new skin tone scaled that’s more inclusive of the skin tones seen in our society. This scale, called the Monk Skin Tone (MST) scale, is named after Dr. Ellis Monk, a Harvard professor and sociologist. Google will be incorporating this 10-shade scale into its own products in the coming months, but they’ve openly released the scale for others to employ it in their own products. The MST scale will be used in various Google products that employ computer vision, such as Google Search, Google Photos, and Google Camera. Google Image Search will let users refine results by skin tone, Google Photos will add new Real Tone filters, and Google Camera’s existing Real Tone feature for Pixels will see improvements.

Material Design 3

Material Design 3, the third major iteration of Google’s Material design language introduced with Android 12, has been updated. Google now recommends that floating action buttons (FABs) be integrated into the bottom bar rather than cutting into them. They’ve also updated the design of the switch to show a checkmark within the toggle.

Nearby

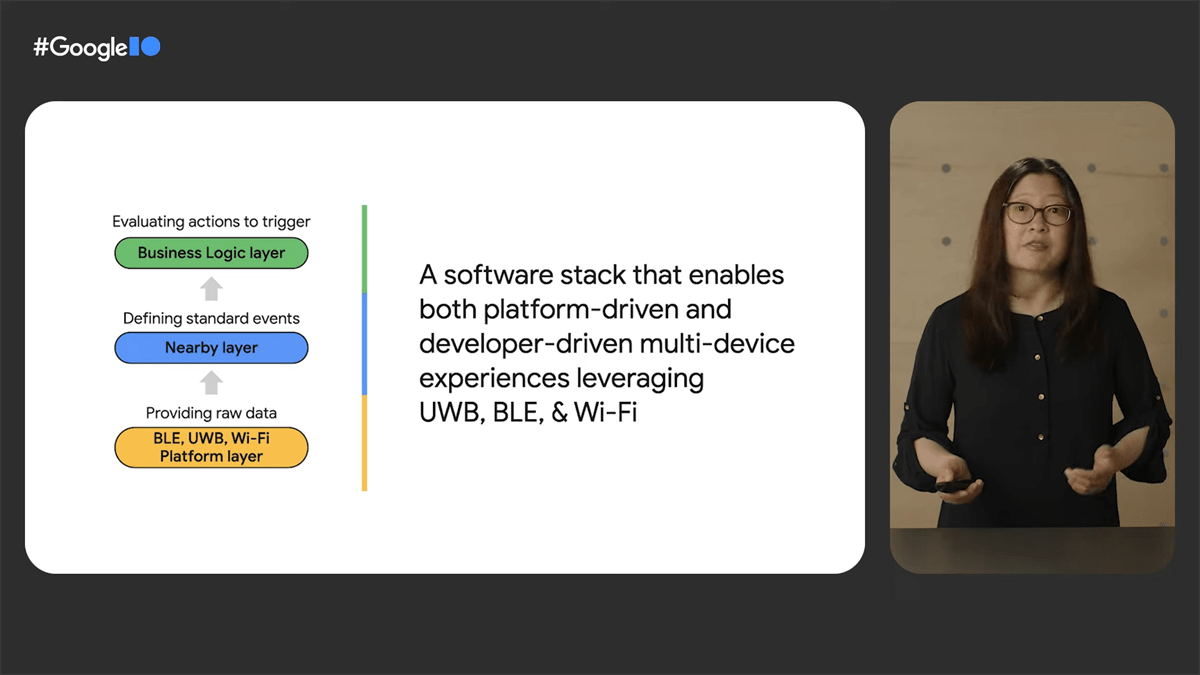

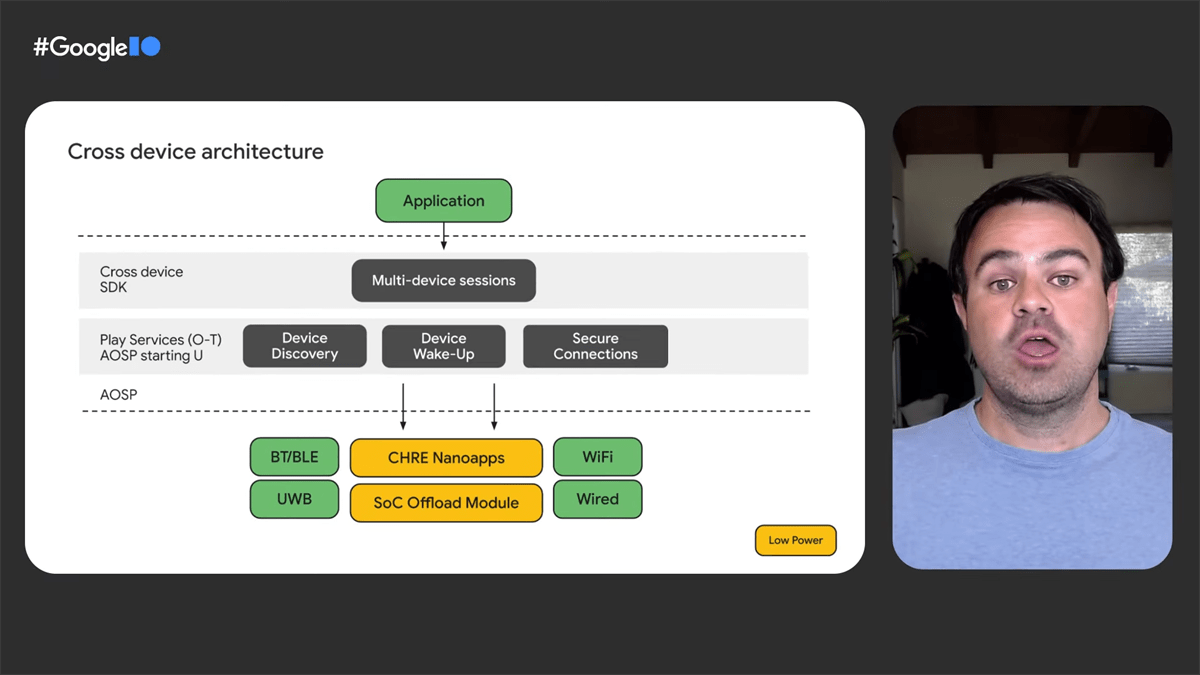

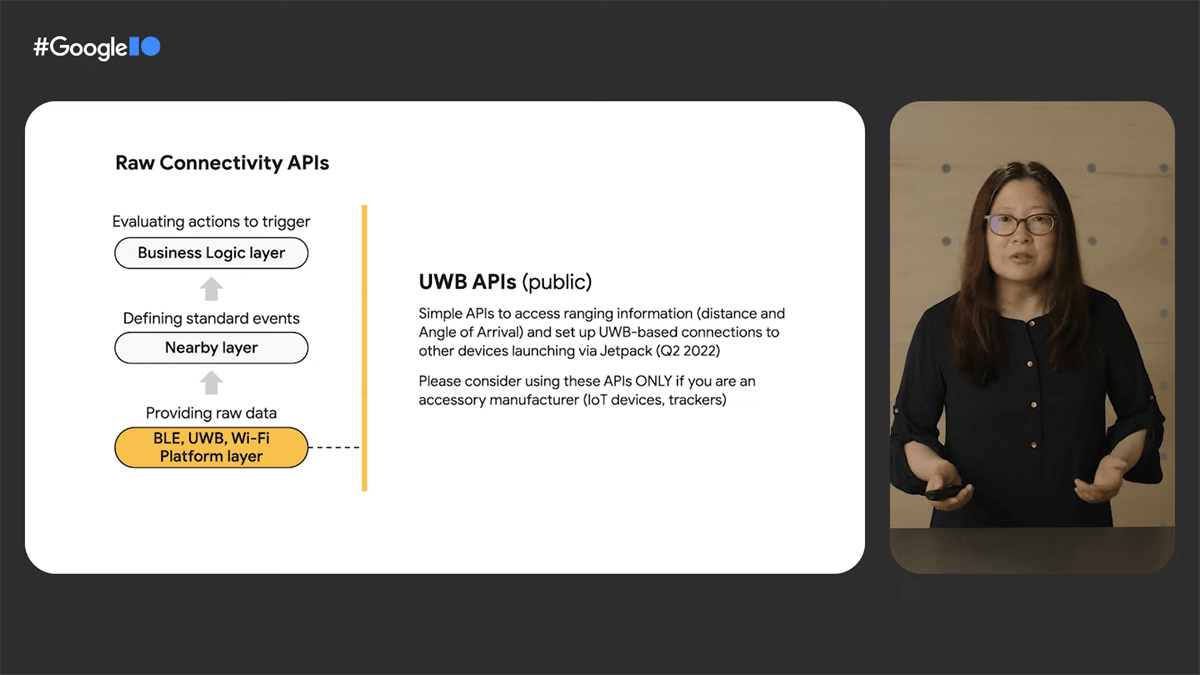

The “build powerful, multi-device experiences” session at Google I/O introduces a new software stack that “enables both platform-driven and developer-driven multi-device experiences,” leveraging raw data from ultra-wideband (UWB), Bluetooth low energy (BLE), and Wi-Fi. There are three layers in this software stack: the BLE, UWB, Wi-Fi platform layer at the bottom providing the raw data; the Nearby layer defining standard events; and the business logic layer evaluating actions to trigger.

The middle Nearby layer, which exists today, does Bluetooth advertising and scanning and establishes connections between devices. Features like Nearby Share and Phone Hub rely on Nearby today. While a similar API called Nearby Connections has existed for some time, it’s currently limited to Android devices.

The business logic layer at the top of the stack provides high-level abstracted APIs that are ideal for building cross-device experiences. These APIs abstract away the underlying connectivity technologies so apps don’t have to worry about what the device is capable of. They also support bidirectional communication between devices so two devices can not only talk to each other but also share a common task. Furthermore, these APIs will be backward compatible down to API level 26 (Android 8.0 Oreo), so they can be used on most Android devices.

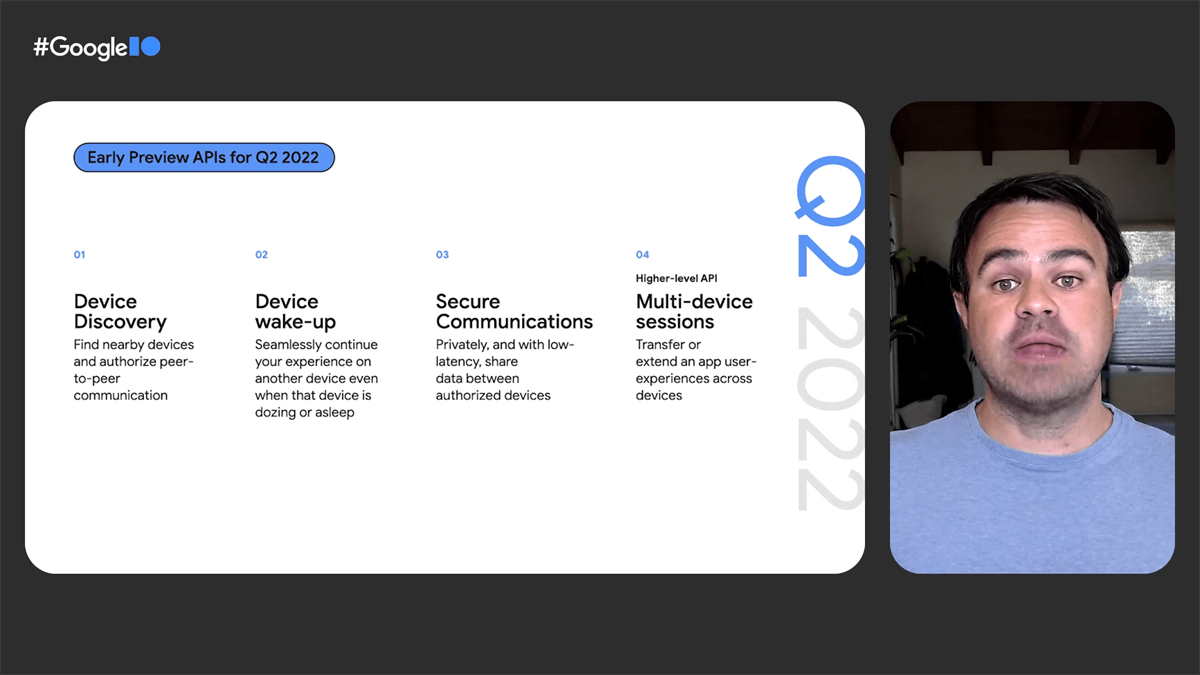

The new APIs Google will offer include the following:

- Device Discovery: Find nearby devices and authorize P2P comms

- Device wake-up: Seamlessly continue your experience on another device even when that device is dozing or asleep

- Secure communications: privately, and with low latency, share data between authorized devices

- Multi-device sessions: Transfer or extend an app’s user experience across devices

The APIs will be able to take advantage of SoC offload modules to offload discovery and connections to low-power hardware (on supported devices). The architecture also includes CHRE nanoapps, standardized APIs, and a new open OTA protocol.

These APIs will be delivered through a new open-source SDK backed by new platform capabilities. These APIs will be added to AOSP with Android 14 but will be distributed soon via Google Play Services with support back to API level 26.

For accessory makers building IoT devices or trackers, Google will soon launch a simple set of UWB APIs via a Jetpack library in the coming month.

For users, Google announced that the Phone Hub feature will let Pixel users stream their messaging apps to Chrome OS, so they can see and respond to all their messages without picking up their phone. In addition, Google announced that Nearby Share will soon let users copy text or pictures from their phone and share that data to other devices.

Passkeys

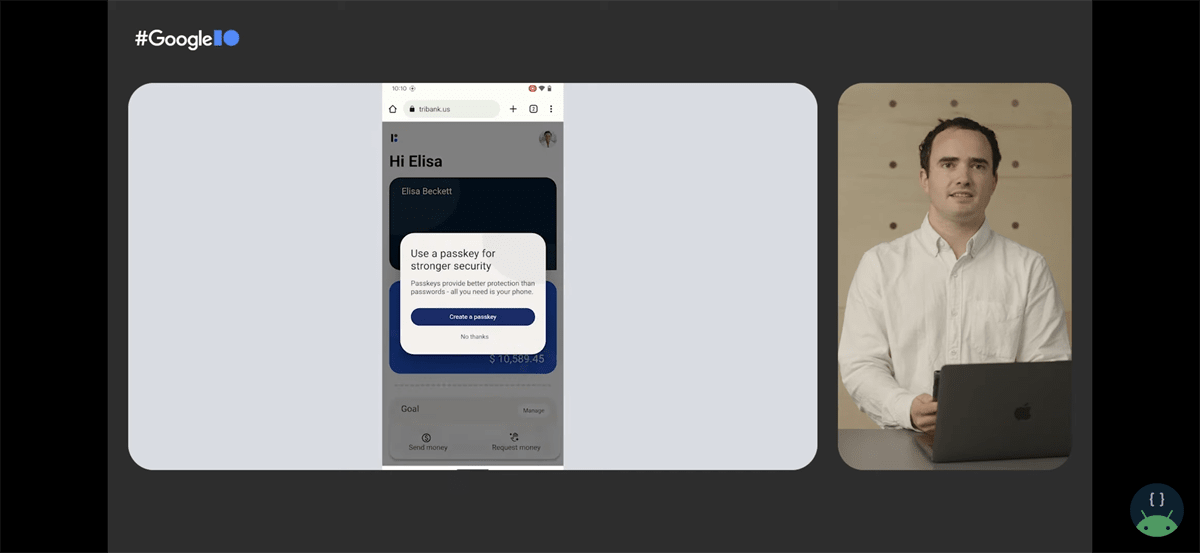

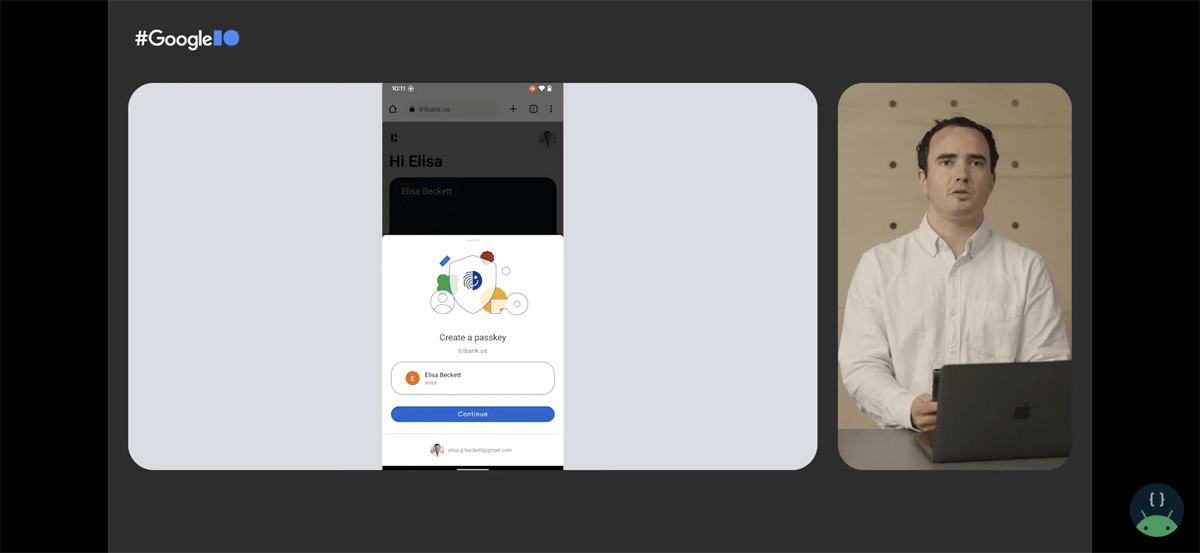

The FIDO Alliance recently announced its plans to replace usernames and passwords with “passkeys”. At Google I/O, Google shared how it’s bringing this feature to Android via Google Play Services and Chrome. The talk, “a path to a world without passwords,” covers the feature in detail, but here’s what’s new for Android.

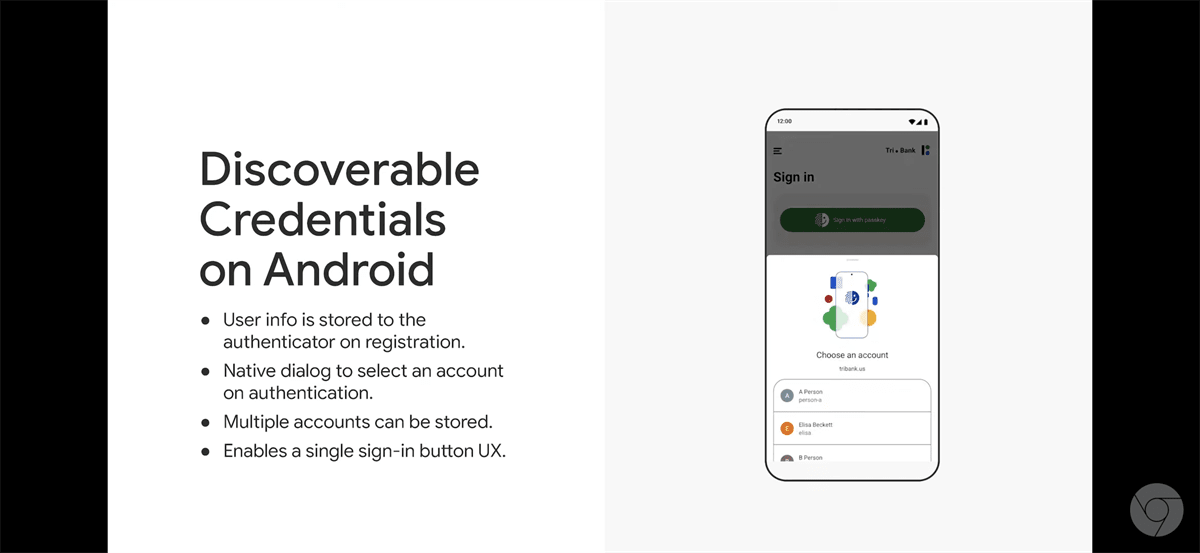

In the current FIDO implementation, to authenticate a user, you need a list of credential IDs, requiring that you know who the user is first. This is suboptimal as it requires asking the user what their username is before authenticating. In addition, since platform authenticators are built into the device, they can only be used on the same device, but users may register multiple platform authenticators with their account so they can use multiple devices. This means that every time the user switches to a new device, they need to register it as a platform authenticator again, which means the website needs to have an account recovery process.

This, however, means phishing is possible. In order to eliminate the need for usernames, you need to use discoverable credentials. With discoverable credentials, users choose an account that’s provided by the device’s OS and then authenticate locally to sign in, skipping username entry. This is possible since authenticators can remember the user account as a discoverable credential. This enables creating a super quick sign-in form where only a single button is needed to invoke WebAuthn. Chrome on desktop already supports this functionality, but support is finally coming to Android later this year.

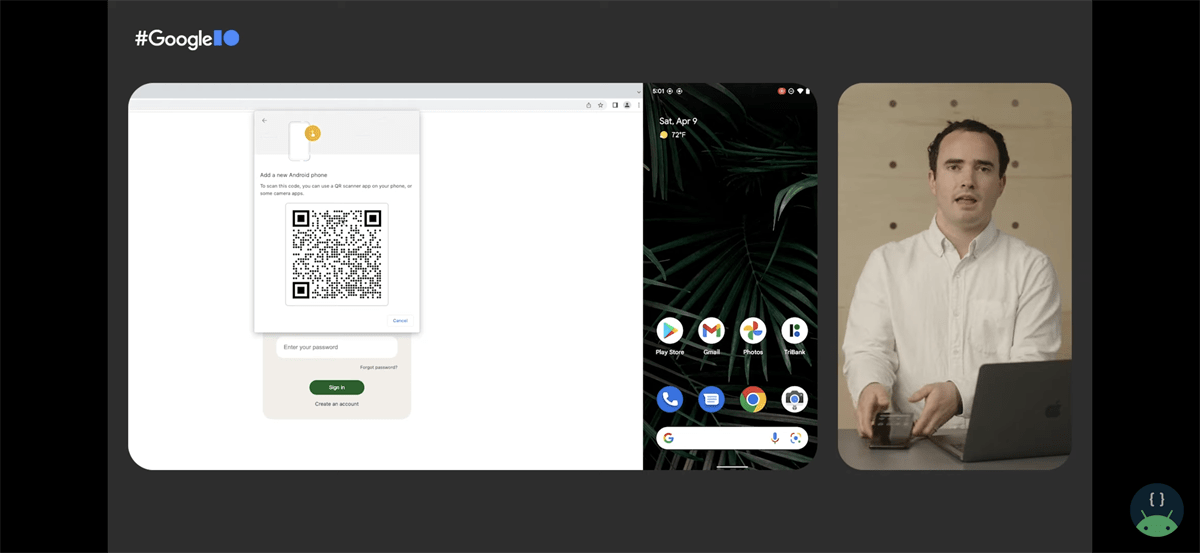

The new FIDO standard for passwordless entry supports cross-device sign-ins with passkeys. This lets a user sign-in on a Windows machine even though the passkey is stored on their Android device. When the user tries to sign in to a website on another platform for the first time, the WebAuthn dialog shows an option to sign in on their phone. The user can scan the QR code from their phone and locally choose an account to authenticate by unlocking their phone. QR code authorization is only required once, as next time, the user can pick the name of the phone from the discoverable credentials list to skip scanning. Passkeys on Android will be available later this year.

Performance Class 13

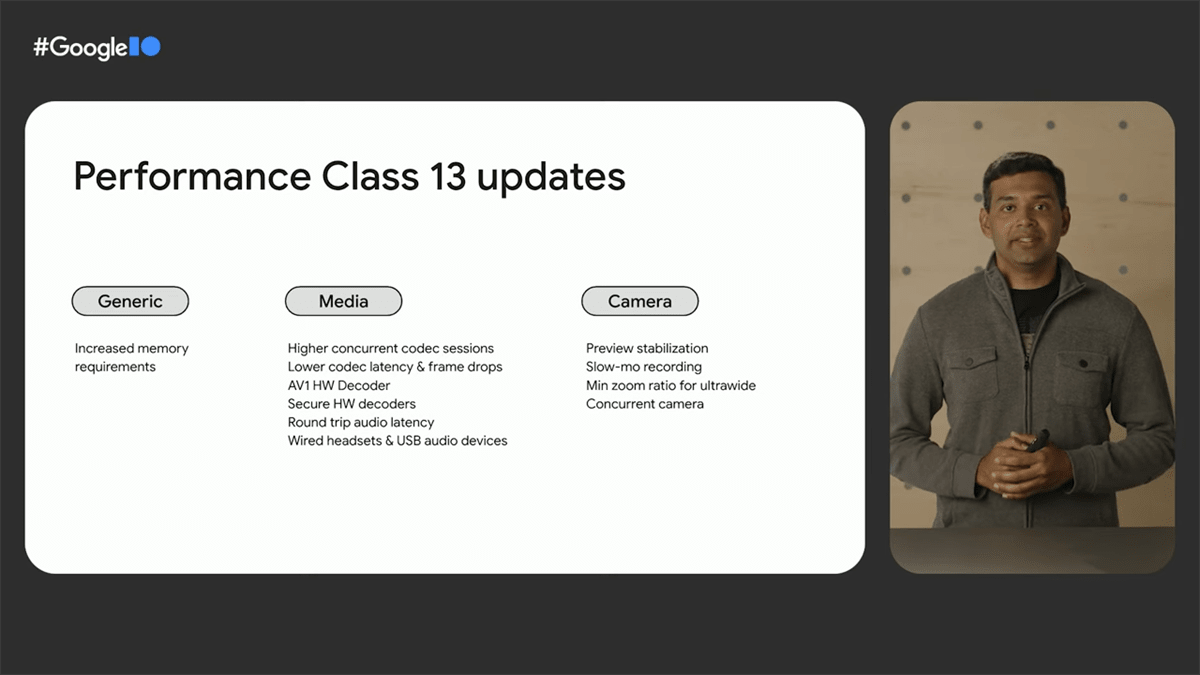

Android 12 introduced the concept of the “Performance Class," a set of hardware and software criteria that denotes devices that can handle highly-demanding media processing tasks. The requirements to declare support for Performance Class 12 are well-defined in the CDD, but the CDD for Android 13 isn’t available yet. Ahead of its release, Google revealed the criteria for Performance Class 13 in the “what’s new in Android media” talk.

Performance Class 13 makes the following changes to the previous iteration:

- Generic

- Increased memory requirements

- Media

- Higher concurrent codec sessions

- Lower codec latency & frame drops

- AV1 hardware decoder support

- Secure hardware decoders

- Round trip audio latency

- Wired headsets & USB audio devices

- Camera

- Preview stabilization

- Slow-mo recording

- Minimum zoom ratio for ultrawide

- Concurrent camera

Google, unfortunately, didn’t share exact numbers for each requirement, but this provides a good idea of what to expect from devices declaring Performance Class 13. Making hardware-accelerated AV1 decoding a requirement is particularly interesting, as current Qualcomm Snapdragon chipsets do not support this feature.

Performance and ART

Optimizing an app’s performance can be difficult, but Google’s “what’s new in app performance” talk offers a few tips about features you can try and tools you can use to identify performance pitfalls. It also brings up some upcoming changes to the Android runtime that are worth mentioning.

First of all, Google reiterated the recent launch of baseline profiles. Baseline profiles provide guided ahead-of-time compilation, improving app launch and hot code path performance. Apps provide the Android runtime a list of classes and methods to pre-compile into machine code. Google Maps improved its app startup time by 30% using baseline profiles, for example.

Libraries can also use baseline profiles to reduce the time it takes an app to render; Jetpack Compose is one such library using them, as are Fragments.

To use baseline profiles, a specialized Macrobenchmark test must first be run. The profile can then be pulled from the device and placed in the application’s source folder. The profile installer then picks up the baseline profile and installs it on the device during app installation.

In order to better profile performance, Google announced the microbenchmarking 1.1.0 release. It adds new features, including a new profiling stage that counts allocations and prints them in the test results. It also adds profiling support so you can load traces in Android Studio for further inspection after a benchmark is done. Android Studio can be used to set up microbenchmarks instead of a manual setup process.

Under the hood, Google is preparing to roll out ART updates that improve JNI performance and perform more bytecode verification at install time. Google says that switching between Java and native code will be up to 2.5X faster following the updates. Furthermore, the reference processing implementation has been reworked to be mostly non-blocking, and the class method lookup has also been improved to make the interpreter faster. In addition, Google is introducing a new garbage collector algorithm, which they spent quite a bit of time talking about.

Google’s new GC algorithm will supposedly get rid of the fixed memory overhead incurred by the current algorithm. Google wanted a GC that doesn’t impact performance while it’s running (as users won’t tolerate jank), improves the battery life of the device, and ensures the amount of cumulative memory committed to apps (in terms of heaps, caches, etc.) is always higher than the RAM size. The last point is especially important because if the OS isn’t careful about memory usage, then it’ll be forced to kill apps, leading to cold startups which are slow.

The new GC should perform allocation very frequently during an app’s execution, meaning it should happen quickly with minimal CPU consumption and thus battery drain. To ensure it doesn’t produce jank, it should perform its activities concurrently, so apps won’t be stopped while it’s doing garbage collection. Lastly, the GC should compact the heap so that fragmentation is taken care of.

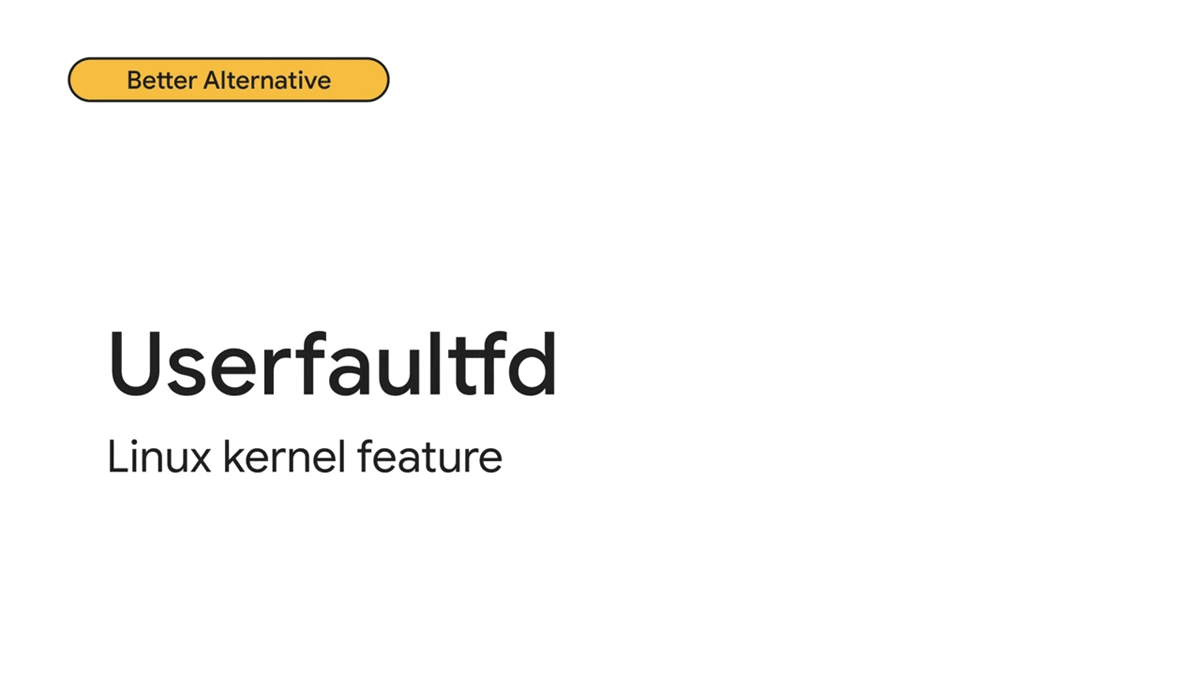

ART’s current GC called concurrent copying already meets these needs, but the problem is that it comes at a fixed memory overhead for every object load. This overhead is incurred even when the GC isn’t running due to the fact that the GC uses a read barrier that’s executed when an object is loaded by the application threads. In addition, when defragmenting the heap, scattered objects are copied to another region contiguously, and then that space is reclaimed by the scattered objects in the original region, leading to a Resident Set Size (RSS) cliff as the RSS goes up then down during this process (potentially leading the OS to kill background apps).

To combat this, Google is developing a new GC that uses userfaultd, a Linux kernel feature that performs the same functionality as the read barrier without its fixed memory overhead, for concurrent compaction. Google says that on average, about 10% of the compiled code size was attributed to the read barrier alone. Furthermore, the new GC doesn’t observe the RSS cliff since the pages can be freed before compaction progresses rather than waiting until the end of collection. This GC algorithm is also more efficient since the number of atomic operations that stall the processor pipeline are in the order of number of pages instead of the number of inferences. The algorithm also works sequentially in memory order and is thus hardware prefetch-friendly, and it also maintains the object’s allocation locality so objects that are allocated next to each other stay that way, reducing the burden on hardware resources.

Privacy

Android 13 introduces a plethora of new privacy features that developers need to be aware of, which is the idea behind the “developing privacy user-centric apps” talk at Google I/O. Some of the changes brought up in the talk include:

- Updates to the privacy dashboard in Android 13, which can now show permissions access from the past 7 days.

- Going forward, users can dismiss foreground service notifications (but will still be able to find them in the Foreground Service Task Manager).

- In an upcoming update to the System Photo Picker, cloud media providers like Google Photos will also be supported. It will seamlessly include backed-up photos and videos as well as custom albums the user creates.

- Android 13 will automatically wipe the clipboard data about 1 hour after it has been last assessed by the user.

Privacy Sandbox

If you’re interested in learning about the multi-year Privacy Sandbox on Android initiative, I’ve written a summary of the APIs it offers. The Privacy Sandbox is also the topic of the “building the Privacy Sandbox” at Google I/O, where they answered a question I had about the rollout of the feature. Google revealed that key components of the Privacy Sandbox, including the SDK Runtime, Topics, FLEDGE, and the Attribution Reporting APIs, will be distributed as mainline modules to mobile devices. When the project enters its Beta phase, Google will begin rolling out those mainline module updates to supported devices.

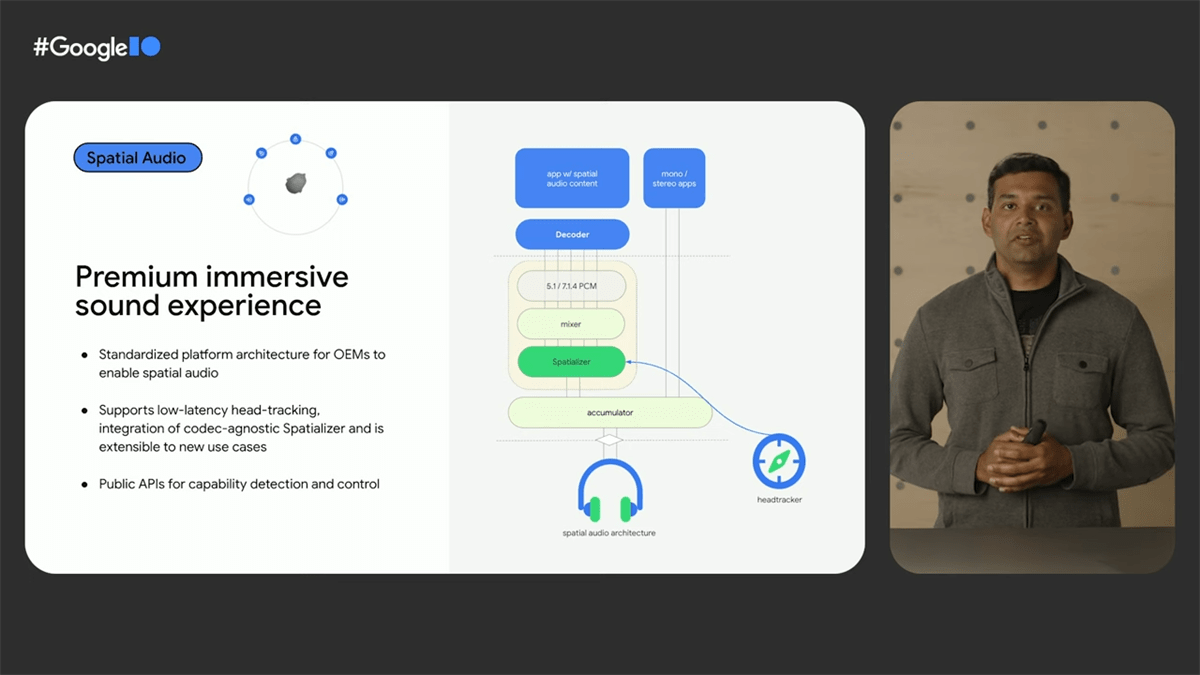

Spatial Audio

The “what’s new in Android media” talk spends a bit of time talking about Android 13’s support for spatial audio. The audio framework in Android 13 adds support for spatial audio, including both static spatial audio and dynamic spatial audio with head tracking. OEMs looking to enable this feature can use standardized platform architecture to integrate multichannel codecs. The new architecture enables lower latency head tracking and integration with a codec-agnostic spatializer.

Meanwhile, app developers can use new public APIs to detect device capabilities and multichannel audio. These APIs are part of the Spatializer class, first introduced in Android 12L, and they start with letting apps check the device’s ability to output spatialized audio. Android 13 will include a standard spatializer in the platform and a standard head-tracking protocol.

ExoPlayer 2.17 includes updates to configure the platform for multichannel spatial audio out of the box. ExoPlayer enables spatialization behavior and configures the decoder to output a multichannel stream on Android 12L+ when possible. All developers have to do is include a multichannel audio track in their media content.

What’s new in Android TV and Google TV?

During the aptly named “what’s new with Android TV and Google TV” talk, Google revealed a number of new features coming to the Android 13-based platform update. These features are available for testing in the Android 13 Beta 2 release for Android TV, available now through the Android Emulator. I’ve already mentioned nearly all of these changes in my Android 13 deep dive, but for reference, here’s a list:

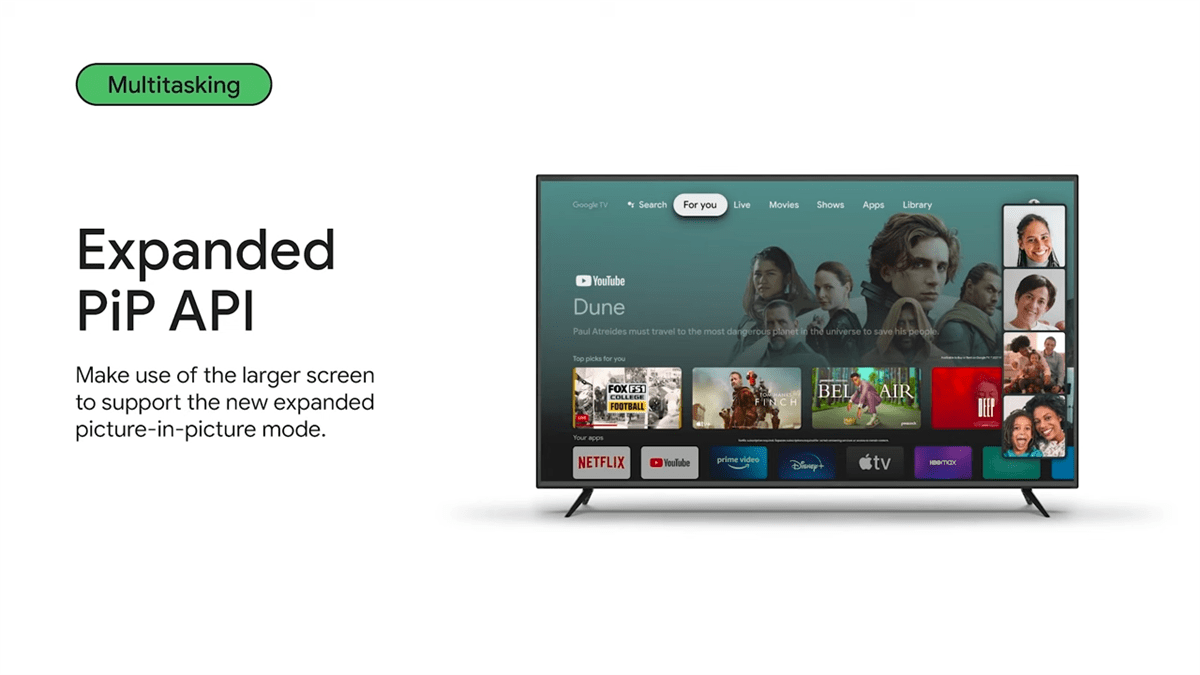

- Expanded picture-in-picture mode

- HDMI state changes are surfaced to the MediaSession lifecycle

- Keep clear APIs

- Keyboard layouts API

- Picture-in-picture mode support comes to Google TV

In addition, the Google TV app will soon allow users to seamlessly cast movies, TV shows, and move from connected services to their TV, without having to leave the Google TV app.

What's new for Wear OS?

Google’s announcements for Wear OS at Google I/O are summarized in the “create beautiful, power-efficient apps for Wear OS” talk, and can be broadly split into three categories: hardware, design, and health. The hardware, for those of you who don’t know, is a new Pixel Watch, but this article will focus on the other two aspects as they’re more directly related to Android.

To make it easier to design apps for Wear OS smartwatches, Google launched Jetpack Compose for Wear OS a while back. The company announced at I/O that Jetpack Compose for Wear OS 1.0 is now in beta, which means it’s feature complete and the API is stable, so developers have what they need to build production-ready apps. Since the developer preview, Google has added a number of components, such as navigation, scale, lazy lists, input and gesture support, and more.

Jetpack Compose for Wear OS is now in beta, making it easier to build layouts for Wear OS apps. Now that it’s in beta, Google considers it feature complete and API stable, so the company now recommends using it to build production-ready apps. Here’s a summary of some of the features in Jetpack Compose for Wear OS that developers can use to build layouts:

- The Wear OS version of navigation supports a resistant to swipe to dismiss box so the motion only happens when swiping to dismiss and not at all in the opposite direction.

- ScalingLazyColumn is a scaling fisheye list component that can handle large numbers of content items. It provides scaling and transparency effects, making it easy to build beautiful and smooth lists on Wear OS. Default behavior has been updated to be in line with your app’s specification, such as updating the scaling parameters, default extra pattern, and taking the size from the size of its contents. The new autoCentering property ensures that all items can be scrolled so they’re visible in the center of the viewport.

- ScalingLazyList supports the new anchorType property that controls whether an item’s center or edge should be aligned to the viewport center line.

- The Picker composable lets users select an item from a scrolling list. By default, the list is repeated infinitely in both directions. Picker supports setting the initial number of options and the initially selected item, and also whether the items will be repeated infinitely in both directions using the repeatItems property.

- InlineSlider lets users make a selection from a range of values, shown as a bar between the minimum and maximum values of the range.

- Stepper is a full screen control component letting users make a selection from a range of values.

- Dialog component displays a full screen dialog overlaid over any other content. It takes a single slot that’s expected to be an alert or confirmation message. It can be swiped to dismiss.

- CircularProgressIndicator is used to display progress by animating an indicator along the circular track in a clockwise direction.

In order to visualize and test these designs, developers are encouraged to try out Android Studio Electric Eel, which brings Wear composable previews, Live Edit, and Project Templates.

Developers can also take a look at Horologist, a set of open source libraries that extend the functionality provided by Compose for Wear OS. For example, it provides a navigation-aware scaffold with time text and position indicators that stay in sync with scrolling and navigating screen changes, beautiful Material date and time pickers, and media UI components including playback control and volume screens.

On the connected health and fitness front, Google announced that Health Services is now in Beta, with a stable release coming soon. As a reminder, Health Services lets apps take advantage of modern smartwatch architecture, offloading data collection and processing to a low-power processor, allowing the application processor to be suspended for extended periods of time. This enables Health Services to consume much less power than alternative APIs while delivering high-frequency data. Plus, as new sensors become available on new smartwatches, Google will continue to add new data types to the Health Services API.

With the Beta release, Health Services is getting more Kotlin-friendly changes and three different clients that map to the core use cases for health and fitness. The exercise client handles the state management and sensor control for workouts, providing metrics like heart rate, distance, location, and speed. It also does goal tracking for things like step counts and calories and supports auto-pause. The passive monitoring client offers a power-efficient way to collect standard all-day metrics like the step count, and it lets apps be notified of events like when a user meets their daily goals. Lastly, the measure client offers a way to provide high fidelity, instantaneous measurements of metrics like the heart rate.

In addition to Health Services, Google now offers Health Connect, a service that apps can use to store health and fitness data on the user’s mobile device. Where Health Services is used to collect real-time sensor data, Health Connect takes that data and stores it on the user’s phone. Apps can access this DataStore with a user’s permission and then sync this data with each other.

For consumers, Google is bringing emergency SOS to Wear so they can contact emergency services or contacts. Google is working with its partners to bring this feature later this year. In addition, the company is preparing to launch a Google Home app for the first time on the platform, letting users control their Google Home smart devices from their wrists. Lastly, Google is bringing Fitbit services to Wear OS, though initially only on the Google’s own Pixel Watch.

What's coming to Android for cars (Android Auto and Android Automotive)?

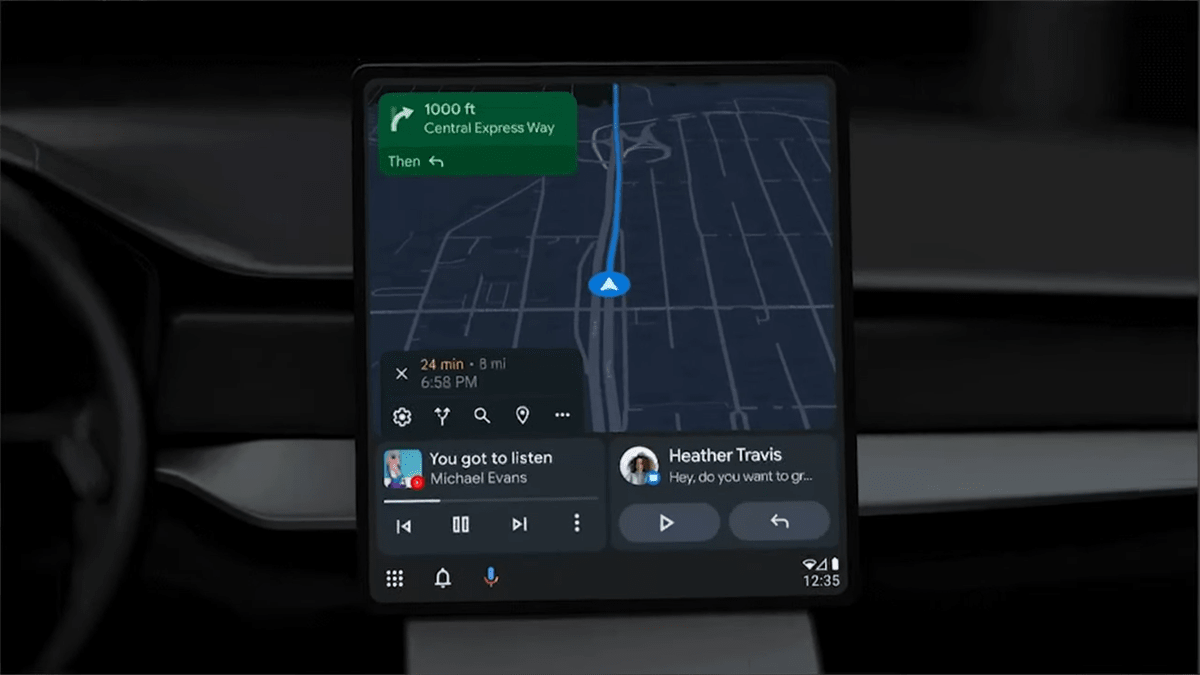

Google revealed that there are now over 150 million Android Auto compatible cars during the “what’s new with Android for cars” talk. It also, more importantly, unveiled a brand new design for Android Auto that brings split-screen functionality to all screen and form factors. The design is adaptive and responsive, optimizing its layout for any screen and scaling perfectly to even the largest portrait and wide displays.

Google also updated the Assistant experience for Auto, integrating it so it can provide relevant alerts and contextual suggestions. Google Assistant can respond to calls and play recommended music with your voice commands. Google also introduced a set of streamlined flows to quickly message and call your favorite contacts with a single tap as well as the ability to reply to messages by selecting a suggested response on the screen.

Developers can build car-specific Assistant experiences using features like App Actions. The Android for Cars App Library supports two App Actions built-in intents (BIIs) that are relevant to on-the-go use cases: GET_PARKING_FACILITY and GET_CHARGING_STATION. Apps respond to these intents by creating an intent filter for deep links in the car app activity. The Car App Library only supports deep link fulfillment, but the team is working on Android intent support.

When it comes to Android Automotive, the Android OS for in-car infotainment systems, Google is rolling out the ability to install and use video streaming apps. Users will be able to use apps like Tubi TV, YouTube, Epix, and more from their in-car infotainment systems. Google is also bringing more parked experiences like browsing to cars.

For developers, Google reiterated that all developers can publish supported apps directly to production on both Android Auto and Android Automotive. They talked about new templates and expansions to existing car app types, including adding driver apps to the Navigation category. Charging and parking categories have been expanded to include more points of interest apps, like Auto Pass and Fuelio, to help users find locations of places they want to go to.

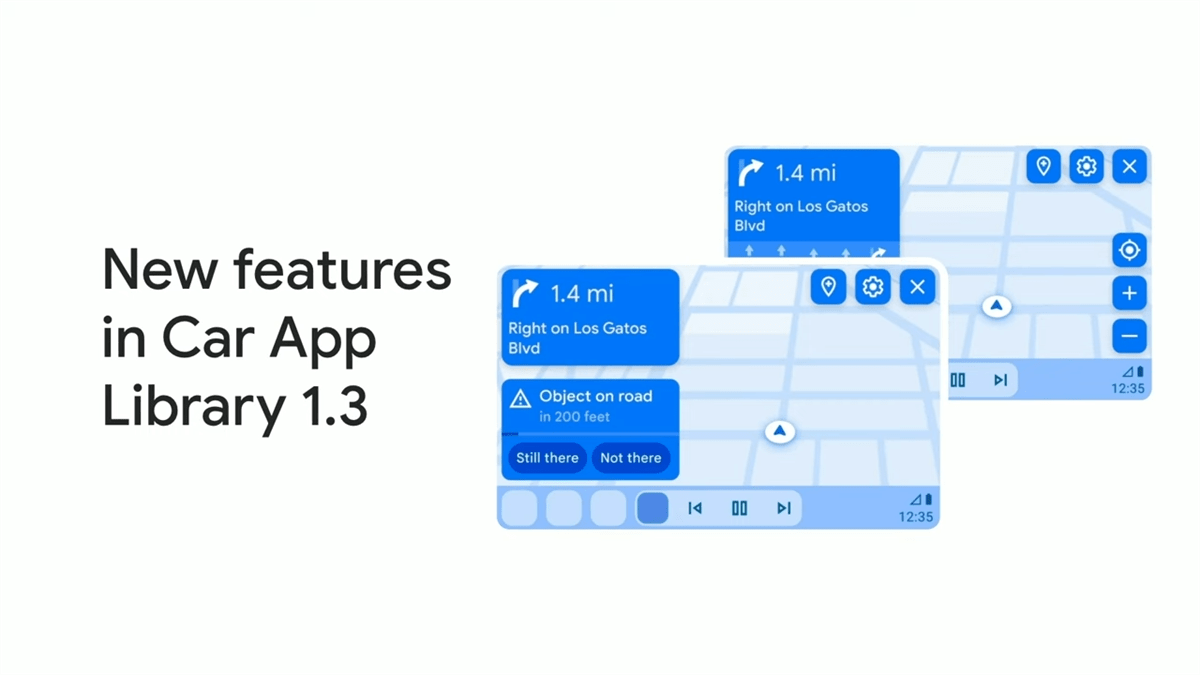

With the Android for Cars App Library v1.3, the navigation feature set has been enriched. Google is adding map interactivity in all map templates, content refresh to update point of interest list destinations dynamically, navigating to multiple destinations, and alerters to notify users of traffic cameras ahead of a new rider request.

Although Google I/O is Google’s largest developer conference of the year, it isn’t the only important one that Android developers should keep an eye out for. For those developers who are too busy developing, the Esper blog will continue to provide news and updates on the Android OS platform and ecosystem.